- From: Bjoern Hoehrmann <derhoermi@gmx.net>

- Date: Mon, 16 Apr 2012 04:16:30 +0200

- To: www-archive@w3.org

- Message-ID: <rjvmo7pscf5jj9f2qrhngpdrnhgm5b7hg4@hive.bjoern.hoehrmann.de>

Moin.

I found some time to whip up a script that compares Internet Archive

public domain books with the corresponding Google plain text. The basic

script is at <https://gist.github.com/2395307>, rather quick and dirty,

but it does the job. It generates a JSON file for each page, like

{

"pars" : [

{

"lines" : [

{

"words" : [

{

"width" : 147,

"x" : "790",

"height" : 54,

"y" : "593",

"word" : "aller",

"scan" : "data:image/png;base64,iVBORw0KGgoAAAANSUh...",

"google_words" : [

"t'bbo."

],

"chars" : [

{

"width" : 32,

"y" : "608",

"scan" : "data:image/png;base64,iVBORw0KGgoAAAAA...",

"char" : "a",

"x" : "790",

"height" : 38,

"code" : 97

},

...

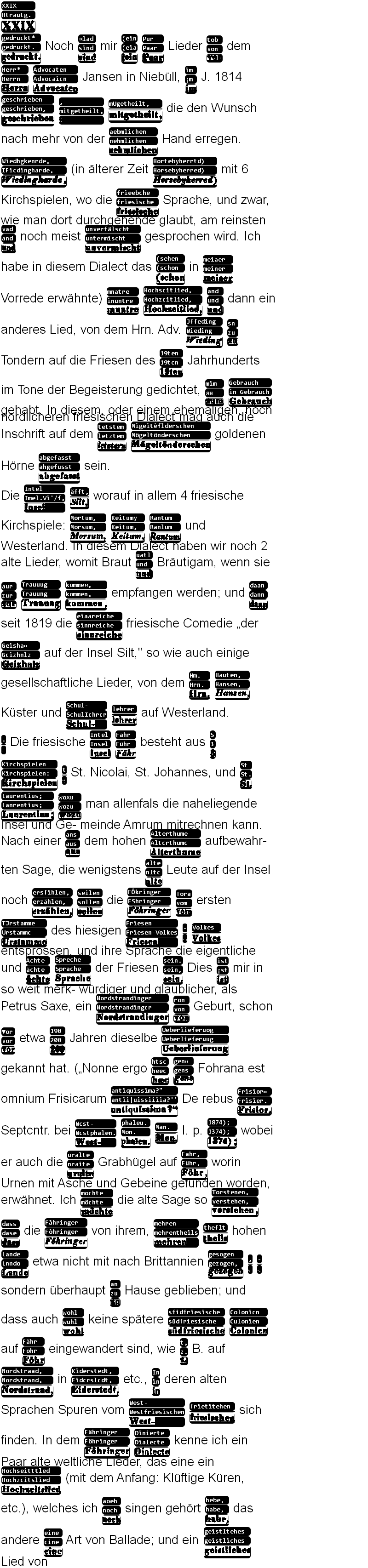

And I adapted my earlier script to produce some a visual diff, now with

the image data as third alternative, to get a better sense of errors in

the text and what might be done to fix them. I've attached the output

for a sample page (I used my http://cutycapt.sf.net utility to make the

shot, but didn't care much to make the style sheet compatible with the

Webkit version I've compiled it with, so it looks a bit messy).

I put in some extra code to deal with hyphenation, the Internet Archive

version keeps the hyphens but Google seems to remove them, which con-

fuses the diff algorithm I made and used. Punctuation is still a bit of

a similar issue, I am not sure how to address that yet, some words are

misrecognized as ending in punctuation even though they don't, so it'd

not be quite as simple as always splitting, say, non-letters from the

letters.

Some error patterns are quite obvious, names for instance are rendered

cursive in the book and very typically misinterpreted, commas and full

stops are often confused, 'c' and 'e', 'a' and 'n', 's' and 'z' and 't'

are, 'z' and 'x' are aswell, the Internet Archive tool likes to take an

's' for a guillemet when at the end of a word. With the confusion rules

if you will, you can for instance easily see that when Google sees a

word like "nltc", n<>a and c<>e gives "alte" as only interpretation if

you expect a proper german word, "Urstammc" is "Urstamme", and so on.

Algorithm::Diff's 'sdiff' would produce for the last example a series

of instructions, "u U U", "u r r", ... "c c e" meaning unchanged, "U"

in both at this position, change "c" into "e". If we run that over all

the words in the reference book these come out at the top:

1364 c e c

1288 c n u

1188 c a u

1028 c t l

873 c a n

853 c t s

784 c e s

671 c , .

608 c a s

577 c e o

537 c i ä

536 c m n

533 c k h

508 c t i

405 c o a

401 c 8 3

Google is on the right, so looking back at "nltc", in this short list

that would go as [nma][lt][t][ce], giving these options:

nltc nlte nttc ntte mltc mlte

mttc mtte altc alte attc atte

of these, only "alte" is a proper german word, and that's what the In-

ternet Archive saw, so there is a good chance, as the Internet Archive

guesses tend to be quite good, that "alte" is indeed correct. This can

also be used to spot possible cases where the two agree on the wrong

interpretation, an example I spotted was "Tondcrn". The c<>e confusion

is the most common, so that might be "Tondern", which is a town in Den-

mark, which is indeed what the text references. The 4-gram "dcrn" isn't

likely to appear in german text, that would be another clue.

Grepping Google's n-gram data, "dcrn" does occur as part of some words,

"Aendcrnng" (probably Aenderung), "Besondcrn" (Besondern), "Hindcrniß"

(Hinderniß), "Ländcrn" (Ländern), "Postmodcrne" (Postmoderne), in all

the cases, unless you hit the occasional code word or perhaps something

like this mail discussing OCR errors, it's basically wrong.

The bigram "dc" alone is already rather unusual, in the book, as far as

the Google plaintext goes, it appears only 101 times, properly only in

the form of "dch" like in Mädchen. In contrast, "de" occurs 9923 times.

More interesting cases, going by the attached image, are words like the

"unverfälscht", "untermischt", "unvermischt" (IA, Google, actual). All

three are proper words (literally, unfalsified, undermixed, undiluted),

and "unverfälscht" even makes sense in context, if you ignore that it's

a book from the 19th century, one could even argue "unverfälscht" is

more likely to be correct (and semantically they are quite similar in

this context). This would require a bit of a visual, geometrical solu-

tion; it's pretty clear that the "v" looks nothing like the "t" at the

end of the word, the "t" is higher, thicker at the bottom, and the "m"

is too small, too thick, and too dense to be an "f", even if you think

the "m" is "fä", it's too short, the "f" in the preceding "friesische"

clearly extends far beyond the top of an "m".

The JSON output generated by my script makes it easy to access the in-

dividual graphemes the OCR software recognized, thankfully both at the

word and at the grapheme level, the Internet Archive data has both but

in separate files with no direct link between them. I decided to ignore

the word-shape data and just took the bounding box of the glyphs on the

same line. I conducted a few experiments exploiting that (well, really,

I wrote a script that does just the extraction of individual glyphs, I

later combined several ad-hoc scripts into the result above, which is

why it's a bit of a mess). An interesting data point for instance is

that around 90% of the monochrome grapheme bitmaps are unique. I wonder

how more modern prints compare.

I particularily had a brief look at clustering, like taking all "k"s,

computing the average "k", and then compute the euclidean distance of

all "k"s to the average "k", and order them according to distance. That

produced fairly good results, putting the regular "k"s at the top, and

putting cursive "k"s a bit after the middle, and things clearly not "k"

at the end, with the occasional stray "h" and "b" inbetween. An option

there would be to split the "k" cluster at some point and then compute

new averages, re-assign to the new clusters; like I noted earlier, it'd

be interesting to separate regular and cursive for identification pur-

poses, and then treating both as "k" (or, more sophisticated, actually

treating the italics as italics).

A simpler thing would be applying this to the "unverfälscht", "unter-

mischt", "unvermischt" problem above, take the average of all "t"s in

the book and the average of all "v"s in the book, and then check which

is closer, which should resolve that particular issue. It might be good

enough to simply take the average of the letters on the same page to do

this, though for some letters that's not enough data, there is only one

"q" on this page, only one "y" (two, but one is misidentified).

Well, I'm bored now, but as a conclusion, let's say this OCR business

does not seem to be administered by your proverbial rocket scientists.

--

Björn Höhrmann · mailto:bjoern@hoehrmann.de · http://bjoern.hoehrmann.de

Am Badedeich 7 · Telefon: +49(0)160/4415681 · http://www.bjoernsworld.de

25899 Dagebüll · PGP Pub. KeyID: 0xA4357E78 · http://www.websitedev.de/

Attachments

- image/png attachment: sample.png

Received on Monday, 16 April 2012 02:16:53 UTC