- From: Bjoern Hoehrmann <derhoermi@gmx.net>

- Date: Tue, 10 Apr 2012 01:37:39 +0200

- To: www-archive@w3.org

- Message-ID: <hrp6o7djjfsbhm0o74lkkpvgof4f6cikta@hive.bjoern.hoehrmann.de>

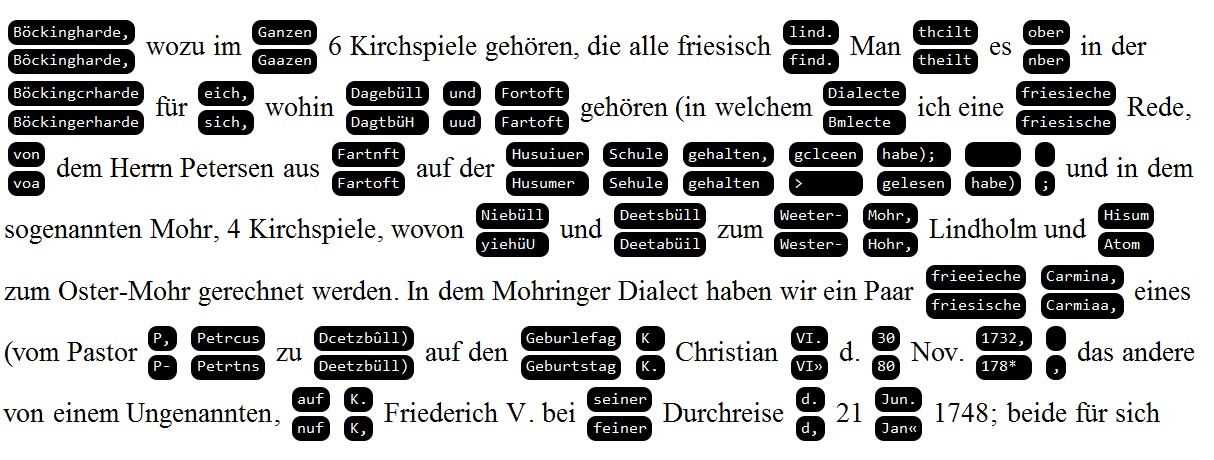

So, The Internet Archive hosts various public domain books that have been digitalized by Google. That's not terribly useful really, usually they tend to be difficult to read, and the OCR employed does not give good results at least for the books that interest me. But it turns out they use different OCR engines and they perform poorly in different ways! So I wondered how they compared and what could be done to recover from all the errors, other than using yet more existing OCR software, which isn't likely to produce better results. As a first approximation directly comparing some examples seemed a good idea, so I took some interesting excerpt and ran it through diff tools. Diff tools of course tend to be as advanced as you can witness, say, on Wikipedia, where you get the old text on one side and the new text on the other side, with "differences" highlighted in some form, and then it is up to you actually compare what's being highlighted, which I've found to be rather involved. For me http://lists.w3.org/Archives/Public/www-archive/2012Mar/0033.html it would be better if I actually had the differences directly adjacent while the unchanged text runs as usual, but the Ruby approach that works well for transliterating russian text, here we really have forks that represent essentially alternatives, so it needs a bit of a different vi- sual style. So I wrote a script using Perl's Algorithm::Diff 'sdiff' sub routine that generates inline tables for the alternatives. I've attached a sample rendering. (Quick hint as an aside, if you want to make sure you actually attach attachments, write you have attached them, then actually attach them, and then write an additional comment on what it shows or something along that line.) Most of these errors could be corrected simply by looking which words occur more frequently in texts of this language from the period (and I expect the same would hold for n-grams that make up the words, you'll find "ö" much more frequently than "ô" for instance, and "usuiuer" is not something that you would expect in a german word of the era either). There are other problems that should be easy to detect, like the many "friesisch" variant misrecognitions, having "Fortoft" and "Fartnft" so close next to each other should also be enough of a hint that you ought to spend more time analysing, not to mention that morphological analysis should easily fix the "lind", "find" issue in the first sentence (which is really a "sind", at the end of a sentence, neither of the results are likely, and certainly not grammatically correct, in german text. The Internet Archive data includes reports from the OCR software where it recognized each character along with the image data and the DJVU data has the same for words. There are some patterns to the errors, like the frequent misinterpretation of cursive (italicized) "ll" in names, which occurs a number of times in the relevant book (as part of "büll", a to- ponymical suffix indicating a settlements, dwellings). I would like to check whether you can exploit the size of the corpus (a whole book) to avoid this kind of error through clustering graphemes, the idea being something along the lines of, if you have a lot of cursive "l"s and many non-cursive "l"s, and one cluster is collectively quite different from the other, you might want to treat either both clusters as "l" or one of the clusters as never being "l"s, avoiding issues like "büil" if the data allows for that. (Here you would check again that the attachment is indeed present.) regards, -- Björn Höhrmann · mailto:bjoern@hoehrmann.de · http://bjoern.hoehrmann.de Am Badedeich 7 · Telefon: +49(0)160/4415681 · http://www.bjoernsworld.de 25899 Dagebüll · PGP Pub. KeyID: 0xA4357E78 · http://www.websitedev.de/

Attachments

- image/png attachment: diff.png

Received on Monday, 9 April 2012 23:38:07 UTC