- From: Chris Rogers <crogers@google.com>

- Date: Mon, 21 Jun 2010 12:27:00 -0700

- To: Jer Noble <jer.noble@apple.com>

- Cc: public-xg-audio@w3.org

- Message-ID: <AANLkTimrWD31WH7QeksfhRlT5GgSJ3dEYNtcXivqSmDr@mail.gmail.com>

Jer Noble and I had a great discussion on Friday about his ideas.

1. Ownership

Jer is quite right that the concept of ownership is not necessary to expose

in the javascript API. We looked carefully at ways that an implementation

could just "do the right thing" and I'm going to try to implement that.

This is a great simplification so I'll change it in the spec.

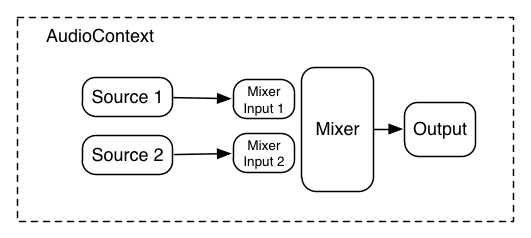

2. AudioMixerNode and AudioMixerInputNode

Another idea Jer brought up, which he explains in his email below, is to get

rid of the AudioMixerNode and AudioMixerInputNode and replace it with an

AudioGainNode. This along with the idea of being able to connect multiple

outputs to a single input makes things a lot cleaner. I love this idea and

will change the spec. I've created diagrams for the old way and the new

way:

Old Way:

[image: mixer-architecture-old.png]

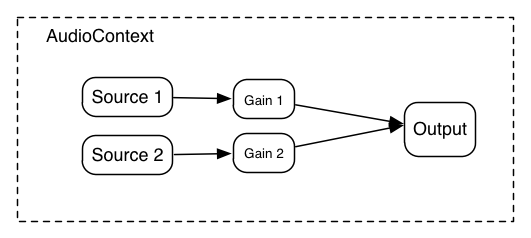

New Way:

Here it's possible to connect multiple outputs to a single input which

automatically acts as a unity gain summing junction.

[image: mixer-architecture-new.png]

3. We discussed the idea of constructors which automatically connect and

think it can work but may need to work on the API a little bit more.

We talked about a few other ideas, but these were the main ones. Jer, did I

forget anything important?

Chris

On Wed, Jun 16, 2010 at 12:21 PM, Chris Rogers <crogers@google.com> wrote:

> Hi Jer, it might be easiest to discuss these ideas offline. It'd be great

> to look at it together at a whiteboard, then we can get back to the group

> with our results.

>

>

> On Tue, Jun 15, 2010 at 5:20 PM, Jer Noble <jer.noble@apple.com> wrote:

>

>>

>> On Jun 15, 2010, at 3:51 PM, Chris Rogers wrote:

>>

>> Hi Jer, thanks for your comments. I'll try to address the points you

>> bring up:

>>

>>

>>

>>> Hi Chris,

>>>

>>> I'm in the midst of reviewing your spec, and I have a few comments and

>>> suggestions:

>>>

>>>

>>> - *Ownership*

>>>

>>>

>>> Building the concept of lifetime-management into the API for AudioNodes

>>> seems unnecessary. Each language has its own lifetime-management concepts,

>>> and in at least one case (JavaScript) the language lifetime-management will

>>> directly contradict the "ownership" model defined here. For example, in

>>> JavaScript the direction of the "owner" reference implies that the AudioNode

>>> owns its "owner", not vice versa.

>>>

>>>

>> I think the idea of ownership is important and I'll try to explain why.

>> There's a difference between the javascript object (the AudioNode) and its

>> underlying C++ object which implements its behavior. The ownership for the

>> javascript object itself behaves exactly the same as other javascript

>> objects with reference counting and garbage collection. However, the

>> underlying/backing C++ object may (in some cases) persist after the

>> javascript object no longer exists. For example, consider the simple case

>> of triggering a sound to play with the following javascript:

>>

>>

>>

>> function playSound() {

>> var source = context.createBufferSource();

>> source.buffer = dogBarkingBuffer;

>> source.connect(context.output);

>> source.noteOn(0);

>> }

>>

>> The javascript object *source* may be garbage collected immediately after

>> playSound() is called, but the underlying C++ object representing it may

>> very well still be connected to the rendering graph generating the sound of

>> the barking dog. At some later time when the sound has finished playing, it

>> will automatically be removed from the rendering graph in the realtime

>> thread (which is running asynchronously from the javascript thread). So,

>> strictly speaking the idea of *ownership *comes into play more at the

>> level of the underlying C++ objects and not the javascript objects

>> themselves. If you keep these ideas in mind while looking at my dynamic

>> lifetime example in the specification, maybe things will make a bit more

>> sense.

>>

>>

>> Even in that case, the "ownership" seems like an underlying implementation

>> detail. If the C++ object can live on after the JavaScript GC has "deleted"

>> the JS object, then is the lifetime management concept of the "owner" (as

>> exposed in JavaScript) really necessary?

>>

>> Because it seems like in JavaScript, you already have the ability to

>> create one-shot, self-destructing AudioNodes, and the concept of "ownership"

>> is as easy to implement as adding a global "var" pointing to an AudioNode.

>> In fact, without the "owner" concept, your dynamic lifetime example would

>> work exactly the same:

>>

>> function playSound() {

>> var oneShotSound = context.createBufferSource();

>> oneShotSound.buffer = dogBarkingBuffer;

>>

>> // Pass the oneShotSound as the owner so the filter, panner,

>> // and mixer input will go away when the sound is done.

>> var lowpass = context.createLowPass2Filter(); // no owner

>> var panner = context.createPanner(); // no owner

>> var mixerInput2 = mixer.createInput(); // no owner

>>

>> // Make connections

>> oneShotSound.connect(lowpass);

>> lowpass.connect(panner);

>> panner.connect(mixerInput2); // this used to read: panner.connect(mixer)

>>

>> panner.listener = listener;

>>

>> oneShotSound.noteOn(0.75);

>> }

>>

>>

>> I've modified the example to remove the "owner" params to the constructor

>> functions. At the point where "oneShotSound.noteOn(0.75)" is called, there

>> is a local reference to *oneShotSound*, *lowpass*, *panner*, *mixer*, and

>> *mixerInput2*. Once playSound() returns, those references disappear. *

>> oneShotSound* could be immediately GC'd, but it seems to make more sense

>> that *oneShotSound* holds a reference to itself as long as it's playing

>> (or is scheduled to play).

>>

>> At some time in the future, the scheduled noteOn() finishes. It releases

>> the reference to itself, and thus no one has a reference to *oneShotSound

>> * any longer, so it is GC'd. *oneShotSound* was the only holder of a

>> reference to *lowPass*, so *lowPass* is GC'd. *lowPass* was the only

>> holder of a reference to *panner*, so *panner* is GC'd. And so on.

>>

>> The end result is that all the filters and sources created inside

>> playSound() are removed from the graph as soon as *oneShotSound* finishes

>> playing. Which is exactly the same behavior as when the filters have an

>> explicit owner. So, I don't see that exposing an "owner" property adds any

>> functionality.

>>

>>

>> Additionally, it seems that it's currently impossible to change the

>>> "owner" of an AudioNode after that node has been created. Was the "owner"

>>> attribute left out of the AudioNode API purposefully?

>>>

>>>

>> *owner* could be added in as a read-only attribute, but I think it is not

>> the kind of thing which should change after the fact of creating the object.

>>

>>

>>> - *Multiple Outputs*

>>>

>>>

>>> While there is an explicit AudioMixerNode, there's no equivalent

>>> AudioSplitterNode, and thus no explicit way to mux the output of one node to

>>> multiple inputs.

>>>

>>>

>> It isn't necessary to have an AudioSplitterNode because it's possible to

>> connect an output to multiple inputs directly (this is called *fanout*).

>> You may be thinking in terms AudioUnits which require an explicit

>> splitter. I remember when we made that design decision with AudioUnits, but

>> it is not a problem here.

>>

>> So *fanout* from an output to multiple inputs is supported without fuss

>> or muss.

>>

>>

>> That seems reasonable. The spec should be updated to specifically call

>> that out, since it confused the heck out of me. However, see the next

>> comment:

>>

>> In the sample code attached to Section 17, a single source (e.g. source1)

>>> is connected to multiple inputs, merely by calling "connect()" multiple

>>> times with different input nodes. This doesn't match the AudioNode API,

>>> where the "connect()" function takes three parameters, the input node, the

>>> output index, and the input index. However, I find the sample code to be a

>>> much more natural and easier to use API. Perhaps you may want to consider

>>> adopting this model for multiple inputs and outputs everywhere.

>>>

>>>

>> Maybe I should change the API description to be more explicit here, but

>> the sample code *does* match the API because the *output* and *input*parameters are optional and default to 0.

>>

>>

>> Okay then, but if every output is capable of connecting to multiple

>> inputs, why would you need multiple outputs? Will any AudioNode ever have a

>> "numberOfOutputs" > 1, and if so, what functionality does that provide above

>> and beyond a single, fanout output?

>>

>>

>>

>>>

>>> - *Multiple Inputs*

>>>

>>>

>>> This same design could be applied to multiple inputs, as in the case with

>>> the mixers. Instead of manually creating inputs, they could also be created

>>> dynamically, per-connection.

>>>

>>> There is an explicit class, AudioMixerNode, which creates

>>> AudioMixerInputNodes, demuxes their outputs together, and adjusts the final

>>> output gain. It's somewhat strange that the AudioMixerNode can create

>>> AudioMixerInputNodes; that seems to be the responsibility of the

>>> AudioContext. And it seems that this section could be greatly simplified by

>>> dynamically creating inputs.

>>>

>>> Let me throw out another idea. AudioMixerNode and AudioMixerInputNode

>>> would be replaced by an AudioGainNode. Every AudioNode would be capable of

>>> becoming an audio mixer by virtue of dynamically-created demuxing inputs.

>>> The API would build upon the revised AudioNode above:

>>>

>>>

>>> interface AudioGainNode : AudioNode

>>>

>>> {

>>>

>>> AudioGain gain;

>>>

>>> void addGainContribution(in AudioGain);

>>>

>>> }

>>>

>>>

>>> The sample code in Section 17 would then go from:

>>>

>>>

>>> mainMixer = context.createMixer();

>>> send1Mixer = context.createMixer();

>>> send2Mixer = context.createMixer();

>>>

>>> g1_1 = mainMixer.createInput(source1);

>>> g2_1 = send1Mixer.createInput(source1);

>>> g3_1 = send2Mixer.createInput(source1);

>>> source1.connect(g1_1);

>>> source1.connect(g2_1);

>>> source1.connect(g3_1);

>>>

>>>

>>> to:

>>>

>>>

>>> mainMixer = context.createGain();

>>> send1Mixer = context.createGain();

>>> send2Mixer = context.createGain();

>>>

>>> source2.connect(mainMixer);

>>> source2.connect(send1Mixer);

>>> source2.connect(send2Mixer);

>>>

>>> Per-input gain could be achieved by adding an inline AudioGainNode

>>> between a source output and its demuxing input node:

>>>

>>>

>>> var g1_1 = context.createGain();

>>>

>>> source2.connect(g1_1);

>>>

>>> g1_1.connect(mainMixer);

>>>

>>> g1_1.gain.value = 0.5;

>>>

>>>

>>> If the default constructor for AudioNodes is changed from "in AudioNode

>>> owner" to "in AudioNode input", then a lot of these examples can be cleaned

>>> up and shortened. That's just syntactic sugar, however. :)

>>>

>>>

>> It doesn't look like it actually shortens the code to me. And I'm not

>> sure we can get rid of the idea of *owner* due to the dynamic lifetime

>> issues I tried to describe above. But maybe you can explain some more.

>>

>>

>> Sure thing.

>>

>> The code above isn't much shorter, granted. However, in your code

>> example, *send1Mixer* and *send2Mixer* could then be removed, and each of

>> the AudioGainNodes could be connect directly to *reverb* and *chorus*,

>> eliminating the need for those mixer nodes. Additionally, if any one of the

>> *g#_#* filters is extraneous (in that they will never have a gain !=

>> 1.0), they can be left out. This has the potential to make the audio graph

>> much, much simpler.

>>

>> Also, in your "playNote()" example above, you have to create a

>> AudioMixerNode and AudioMixerInputNode, just to add a gain effect to a

>> simple one-shot note. With the above change in API, those two nodes would

>> be replaced by a single AudioGainNode.

>>

>> Also, eliminating the AudioMixerNode interface removes one class from the

>> IDL, and eliminates the single piece of API where an AudioNode is created by

>> something other than the AudioContext, all without removing any

>> functionality.

>>

>> Let me give some sample code which demonstrates how much shorter the

>> client's code could be. From:

>>

>> function playSound() {

>> var source = context.createBufferSource(); source.buffer =

>> dogBarkingBuffer; var reverb = context.createReverb();

>> source.connect(reverb); var chorus = context.creteChorus();

>> source.connect(chorus); var mainMixer = context.createMixer();

>> var gain1 = mainMixer.createInput(); reverb.connect(gain1); var gain2 =

>> mainMixer.createInput(); chorus.connect(gain2);

>> mainMixer.connect(context.output); source.noteOn(0); }

>>

>>

>> to:

>>

>> function playSound() {

>> var source = context.createBufferSource(); source.buffer =

>> dogBarkingBuffer; var reverb = context.createReverb();

>> source.connect(reverb); var chorus = context.creteChorus();

>> source.connect(chorus); reverb.connect(context.output);

>>

>> chorus.connect(context.output);

>>

>> source.noteOn(0); }

>>

>>

>> Or the same code with the Constructors addition below:

>>

>> function playSound() {

>> var reverb = context.createReverb(context.output);

>> var chorus = context.creteChorus(context.output); var source =

>> context.createBufferSource([reverb, chorus]);

>> source.buffer = dogBarkingBuffer;

>>

>> source.noteOn(0);

>> }

>>

>>

>>

>> Okay, so I kind of cheated and passed in an Array to the

>> "createBufferSource()" constructor. But that seems like a simple addition

>> which could come in very handy, especially given the "fanout" nature of

>> inputs. Taken together, this brings a 13-line function down to 5 lines.

>>

>> Of course, not all permutations will be as amenable to simplification as

>> the function above. But I believe that even the worst case scenario is

>> still an improvement.

>>

>>

>>> - *Constructors*

>>>

>>>

>>> Except for the AudioContext.output node, every other created AudioNode

>>> needs to be connected to a downstream AudioNode input. For this reason, it

>>> seems that the constructor functions should be changed to take an "in

>>> AudioNode destination = 0" parameter (instead of an "owner" parameter).

>>> This would significantly reduce the amount of code needed to write an

>>> audio graph. In addition, anonymous AudioNodes could be created and

>>> connected without having to specify local variables:

>>>

>>> compressor = context.createCompressor(context.output);

>>>

>>> mainMixer = context.createGain(compressor);

>>>

>>>

>>> or:

>>>

>>> mainMixer = context.createGain(

>>> context.createCompressor(context.output));

>>>

>>>

>> I like the idea, but it may not always be desirable to connect the

>> AudioNode immediately upon construction. For example, there may be cases

>> where an AudioNode is created, then later passed to some other function

>> where it is finally known where it needs to be connected. I'm sure we can

>> come up with variants on the constructors to handle the various cases.

>>

>>

>> Oh, I'm not suggesting that constructors replace the connect() function.

>> That's why I called this change "syntactic sugar". The same effect could

>> be had by returning "this" from the connect() function, allowing such

>> constructions as:

>>

>> source2.connect(g1_2).connect(g2_2).connect(g3_2);

>>

>> and:

>>

>> mainMixer =

>> context.createGain().connect(context.createCompressor().connect(context.output));

>>

>>

>> But I think the connect-in-the-constructor alternative is less confusing.

>>

>> Thanks again!

>>

>> -Jer

>>

>

>

Attachments

- image/png attachment: mixer-architecture-old.png

- image/png attachment: mixer-architecture-new.png

Received on Monday, 21 June 2010 19:27:36 UTC