- From: John Foliot <john.foliot@deque.com>

- Date: Mon, 24 Jun 2019 10:22:21 -0500

- To: "Abma, J.D. (Jake)" <Jake.Abma@ing.com>

- Cc: "Hall, Charles (DET-MRM)" <Charles.Hall@mrm-mccann.com>, Alastair Campbell <acampbell@nomensa.com>, Silver Task Force <public-silver@w3.org>, Andrew Kirkpatrick <akirkpat@adobe.com>

- Message-ID: <CAKdCpxy159a0dftUK3-P5_FfBwQG=5kF0SQ1nfrr_DcvxAAZuw@mail.gmail.com>

Hi Jake,

I agree that feasibility will need to be discussed, however I think we can

set it up in such a way that the "hard testing", which I agree takes more

time and money, will be valuable enough "score-wise" that it will be worth

the effort.

I also note that your scoring suggestions have always been expressed as

XX/100 - i.e. a percentile score.

I tend to agree that no matter the "points score" it will be able to be

expressed as an percentage. However, I've also envisioned that as we get

more granular in our requirements, that individual requirements will have

greater and lesser value when totaled up. I do not at this time rule out a

model that says "minimum compliance = 739 'points', and if your site

accrues 637 points you are at (637/739 = 0.8619756427604871) - or 86%

conformant.

However, our TF has yet to determine whether to simply go with a

hard-number score ("2158 = the total number of points you can ever get to

be *perfect*"), or whether we ultimately express that as a percentage with

regard to reporting and conformance.

One of the many unanswered questions we have before us. (And if we *do*

decide to go with percentages, how many decimal points do we go to? x.xx?

x.xxx? none? All TBD)

JF

On Sat, Jun 22, 2019 at 9:32 AM Abma, J.D. (Jake) <Jake.Abma@ing.com> wrote:

> Just some thoughts:

>

> I do like all of the ideas from all of you but are they really feasible?

>

> With feasible I mean in terms of time to test, money spend, the difficulty

> of compiling a score and the expertise to judge all of this?

>

> I would love to see a simple framework with clear categories for valuing

> content, like:

>

> - Original WCAG score => pass/fail = 67/100

> - How often do pass/fails occur => not often / often / very often =

> 90/100

> - What is the severity of the fails => not that bad / bad / blocking =

> 70/10

> - How easy it is to finish a task => easy / average / hard

> = 65/100

> - What is the quality of the translations / alternative text, etc.

> = 72/100

> - How understandable is the content => easy / average / hard = 55/100

>

> Total = 69/100

>

> And then also thinking about feasibility of this kind of measuring.

> Questions like: will it take 6 times as long to test as an audit now?

> Will only a few people in the world be able to judge all categories

> sufficiently?

>

> Cheers,

> Jake

>

>

>

>

>

>

>

> ------------------------------

> *From:* John Foliot <john.foliot@deque.com>

> *Sent:* Saturday, June 22, 2019 12:36 AM

> *To:* Hall, Charles (DET-MRM)

> *Cc:* Alastair Campbell; Silver Task Force; Andrew Kirkpatrick

> *Subject:* Re: Conformance and method 'levels'

>

> Hi Charles,

>

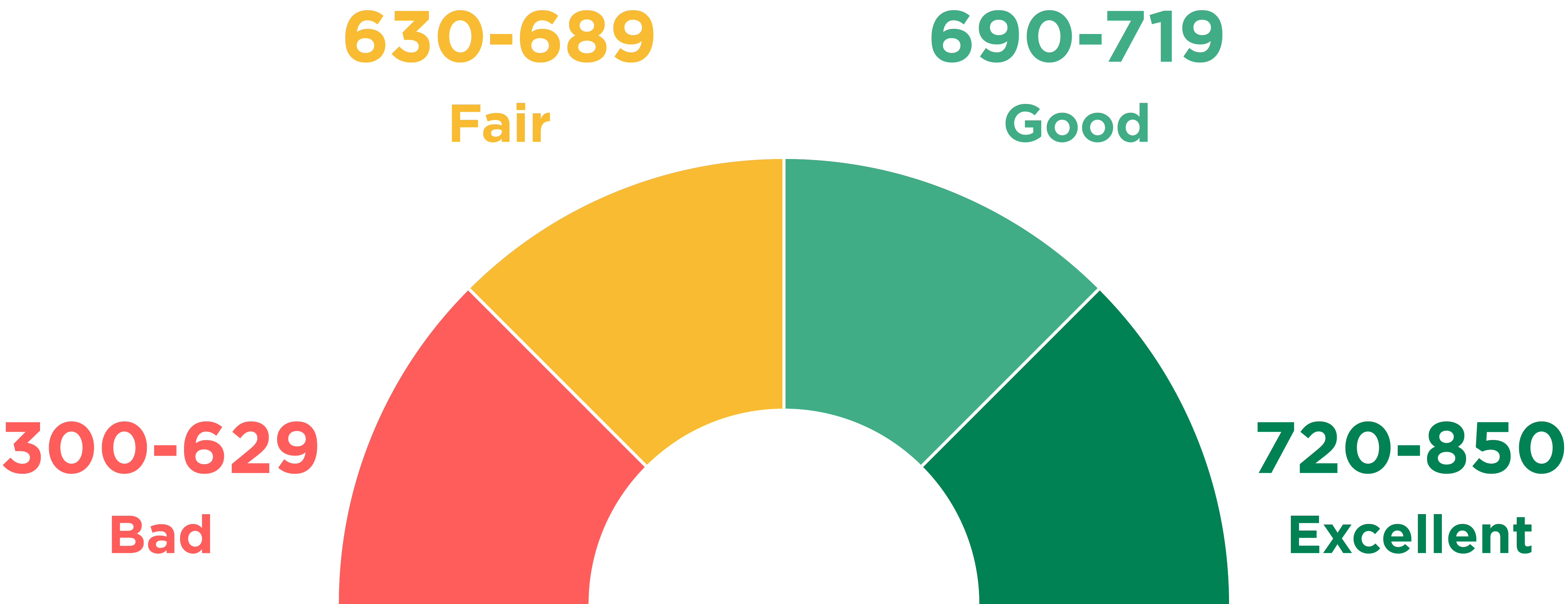

> I for one am under the same understanding, and I see it as far more

> granular than just Bronze, Silver or Gold plateaus, but that rather,

> through the accumulation of points (by doing good things) you can advance

> from Bronze, to Silver to Gold - not for individual pages, but rather **for

> your site**. (I've come to conceptualize it as similar to your FICO

> score, which numerically improves or degrades over time, yet your score is

> still always inside of a "range" from Bad to Excellent: increasing your

> score from 638 to 687 is commendable and a good stretch, yet you are still

> only - and remain - in the "Fair" range, so stretch harder still).

>

> [image: image.png]

> [alt: a semi-circle graph showing the 4 levels of FICO scoring: Bad, Fair,

> Good, and Excellent, along with the range of score values associated to

> each section. Bad is a range of 300 points to 629 points, Fair ranges from

> 630 to 689 points, Good ranges from 690 to 719 points, and excellent ranges

> from 720 to 850 points.]

>

> I've also arrived at the notion that your score is never going to be a

> "one-and-done" numeric value, but that your score will change based on the

> most current data available* (in part because we all know that web sites

> [sic] are living breathing organic things, with content changes being

> pushed at regular - in some cases daily or hourly - basis.)

>

> This then also leads me to conclude that your "Accessibility Score" will

> be a floating points total with those points being impacted not only by

> specific "techniques", but equally (if not more importantly) by functional

> outcomes. And so the model of:

>

>

> - *Bronze: EITHER provide AD or transcript*

> - *Silver: provide AD and transcript*

> - *Gold: Provide live transcript or live AD.*

>

>

> ...feels rather simplistic to me. Much of our documentation *speaks of

> scores* (which I perceive to be numeric in nature), while what Alastair

> is proposing is simply Good, Better, Best - with no actual "score" involved.

>

> Additionally, nowhere in Alastair's metric is there a measurement for

> "quality" of the caption, transcript or audio description (should there be?

> I believe yes), nor for that matter (in this particular instance) a

> recognition of the two very varied approaches to providing 'support assets'

> to the video: in-band or out-of-band (where in-band = the assets are

> bundled inside of the MP4 wrapper, versus out-of-band, where captions and

> Audio Descriptions are declared via the <track> element.) From a

> "functional" perspective, providing the assets in-band, while slightly

> harder to do production-wise, is a more robust technique (for lots of

> reasons), so... do we reward authors with a "better" score if they use the

> in-band method? And if yes, how many more "points" do they get (and why

> that number?) If no, why not? For transcripts, does providing the

> transcript as structured HTML earn you more points over providing the

> transcript as a .txt file? A PDF? (WCAG 2.x doesn't seem to care about

> that) Should it?

>

> (* This is already a very long email, so I will just state that I have

> some additional ideas about stale-dating data as well, as I suspect a

> cognitive walk-through result from 4 years ago likely has little-to-no

> value today...)

>

> ******************

> In fact, if we're handing out points, how many points **do** you get for

> minimal functional requirement for "Accessible Media" (aka "Bronze"), and

> what do I need to do to increase my score to Silver (not on a single asset,

> but across the "range" of content - a.k.a.pages - scoped by your

> conformance claim) versus Gold?

>

> Do you get the same number of points for ensuring that the language of the

> page has been declared (which to my mind is the easiest SC to meet) - does

> providing the language of the document have the same impact on users as

> ensuring that Audio Descriptions are present and accurate? If (like me) you

> believe one to be far more important than the other, how many points do

> either requirement start with (as a representation of "perfect" for that

> requirement)? For that matter, do we count up or down in our scoring

> (counting up = minimal score that improves, counting down = maximum score

> that degrades)?

>

> (ProTip: I'd also revisit the MAUR

> <https://www.w3.org/TR/media-accessibility-reqs/> for ideas on how to

> improve your score for Accessible Media, which is more than just captions

> and audio description).

>

> Then, of course, is the conundrum of "page scoring" versus "site scoring",

> where a video asset is (likely) displayed on a "page", and perhaps there

> are multiple videos on multiple pages, with accessibility support ranging

> from "Pretty good" on one example, to "OMG that is horrible" on another

> example... how do we score that on a site-level basis? If I have 5 videos

> on my site, and one has no captions, transcripts or Audio Descriptions

> (AD), two have captions and no AD or transcripts, one has captions and a

> transcript but no AD, and one has all the required bits (caption, AD,

> transcript)... what's my score? Am I Gold, Bronze, or Silver? Why?

>

> And if I clean up 3 of those five videos above, but leave the other two

> as-is, do I see an increase in my score? If yes, by how much? Why? Do I get

> more points for cleaning up the video that lacks AD *and* transcript

> versus not as many points for cleaning up the the video that just needs

> audio descriptions? Does adding audio descriptions accrue more points than

> just adding a transcript? Can points, as numeric values, also include

> decimal points? (i.e. 16.25 'points' out of a maximum number available of

> 25)? Is this the path we are on?

>

> *Scoring is *everything** if we are moving to a Good, Better, Best model

> for all of our web accessibility conformance reporting. Saying you are at

> "Silver", without knowing explicitly how you got there will be a major

> hurdle that we'll need to be able to explain.

>

> It is for these reasons that I have volunteered to help work on the

> conformance model, as I am of the opinion that all the other migration work

> will eventually run into this scoring issue as a major blocker: no matter

> which existing SC I consider, I soon arrive at variants of the questions

> above (and more), all related to scalability, techniques, impact on

> different user-groups, and our move from page conformance reporting to site

> conformance reporting, and a sliding scale of "points" that we've yet to

> tackle - points that will come to represent Bronze, Silver and Gold.

>

> JF

>

>

> On Fri, Jun 21, 2019 at 12:53 PM Hall, Charles (DET-MRM) <

> Charles.Hall@mrm-mccann.com> wrote:

>

>> I understand the logical parallel.

>>

>>

>>

>> However, my understanding (perhaps influenced by my own intent) of the

>> point system is not directly proportional to the number of features

>> (supported by methods) added or by the difficulty associated with adding

>> them, but instead based on meeting functional needs. In this example,

>> transcription, captioning and audio description (recorded) may all be

>> implemented but still only have sufficient points to earn silver. While

>> addressing the content itself to be more understandable by people with

>> cognitive issues or intersectional needs would be required for sufficient

>> points to earn gold. The difference being people and not methods.

>>

>>

>>

>> Am I alone in this view?

>>

>>

>>

>>

>>

>> *Charles Hall* // Senior UX Architect

>>

>>

>>

>> charles.hall@mrm-mccann.com

>> <charles.hall@mrm-mccann.com?subject=Note%20From%20Signature>

>>

>> w 248.203.8723

>>

>> m 248.225.8179

>>

>> 360 W Maple Ave, Birmingham MI 48009

>>

>> mrm-mccann.com <https://www.mrm-mccann.com/>

>>

>>

>>

>> [image: MRM//McCann]

>>

>> Relationship Is Our Middle Name

>>

>>

>>

>> Ad Age Agency A-List 2016, 2017, 2019

>>

>> Ad Age Creativity Innovators 2016, 2017

>>

>> Ad Age B-to-B Agency of the Year 2018

>>

>> North American Agency of the Year, Cannes 2016

>>

>> Leader in Gartner Magic Quadrant 2017, 2018, 2019

>>

>> Most Creatively Effective Agency Network in the World, Effie 2018, 2019

>>

>>

>>

>>

>>

>>

>>

>> *From: *Alastair Campbell <acampbell@nomensa.com>

>> *Date: *Friday, June 21, 2019 at 12:01 PM

>> *To: *Silver Task Force <public-silver@w3.org>

>> *Subject: *[EXTERNAL] Conformance and method 'levels'

>> *Resent-From: *Silver Task Force <public-silver@w3.org>

>> *Resent-Date: *Friday, June 21, 2019 at 12:01 PM

>>

>>

>>

>> Hi everyone,

>>

>>

>>

>> I think this is a useful thread to be aware of when thinking about

>> conformance and how different methods might be set at different levels:

>>

>> https://github.com/w3c/wcag/issues/782

>> <https://urldefense.proofpoint.com/v2/url?u=https-3A__github.com_w3c_wcag_issues_782&d=DwMGaQ&c=Ftw_YSVcGmqQBvrGwAZugGylNRkk-uER0-5bY94tjsc&r=FbsK8fvOGBHiAasJukQr6i2dv-WpJzmR-w48cl75l3c&m=qRlBlL2XbaOAr9ZQ1gk036BFzRHfv3et7ZuRCfnYttk&s=81tZlSYylHRs1Awy147BMGnUzy0MuO6s7Qk5IO0FhoU&e=>

>>

>>

>>

>> It is about multimedia access, so the 1.2.x section in WCAG 2.x. You

>> might think that it is fairly straightforward as the solutions are fairly

>> cut & dried (captions, transcripts, AD etc.)

>>

>>

>>

>> However, the tricky bit is at what level you require different solutions.

>>

>>

>>

>> If you had a guideline such as “A user does not need to see in order to

>> understand visual multimedia content”, then Patrick’s levelling in one

>> of the comments

>> <https://urldefense.proofpoint.com/v2/url?u=https-3A__github.com_w3c_wcag_issues_782-23issuecomment-2D504038948&d=DwMGaQ&c=Ftw_YSVcGmqQBvrGwAZugGylNRkk-uER0-5bY94tjsc&r=FbsK8fvOGBHiAasJukQr6i2dv-WpJzmR-w48cl75l3c&m=qRlBlL2XbaOAr9ZQ1gk036BFzRHfv3et7ZuRCfnYttk&s=eQu0fdZeTflKCDpdR_3mguGA09aq52UmWnQTBdPRhjE&e=>

>> makes sense:

>>

>> - Bronze: EITHER provide AD or transcript

>> - Silver: provide AD and transcript

>> - Gold: Provide live transcript or live AD.

>>

>>

>>

>> I raise this as if you read the thread, you’ll see how the levels

>> impacted the drafting of the guidelines, and I think we’ll have a similar

>> (or more complex?) dynamic for the scoring in Silver, and how methods are

>> drafted.

>>

>>

>>

>> Kind regards,

>>

>>

>>

>> -Alastair

>>

>>

>>

>> --

>>

>>

>>

>> www.nomensa.com

>> <https://urldefense.proofpoint.com/v2/url?u=http-3A__www.nomensa.com_&d=DwMGaQ&c=Ftw_YSVcGmqQBvrGwAZugGylNRkk-uER0-5bY94tjsc&r=FbsK8fvOGBHiAasJukQr6i2dv-WpJzmR-w48cl75l3c&m=qRlBlL2XbaOAr9ZQ1gk036BFzRHfv3et7ZuRCfnYttk&s=KYOhqBbA2ZqPfWqucl5pHqD50APEkM1wkeBHHBrRswc&e=>

>> / @alastc

>>

>>

>> This message contains information which may be confidential and

>> privileged. Unless you are the intended recipient (or authorized to receive

>> this message for the intended recipient), you may not use, copy,

>> disseminate or disclose to anyone the message or any information contained

>> in the message. If you have received the message in error, please advise

>> the sender by reply e-mail, and delete the message. Thank you very much.

>>

>

>

> --

> *John Foliot* | Principal Accessibility Strategist | W3C AC Representative

> Deque Systems - Accessibility for Good

> deque.com

>

> -----------------------------------------------------------------

> ATTENTION:

> The information in this e-mail is confidential and only meant for the intended recipient. If you are not the intended recipient, don't use or disclose it in any way. Please let the sender know and delete the message immediately.

> -----------------------------------------------------------------

>

>

--

*John Foliot* | Principal Accessibility Strategist | W3C AC Representative

Deque Systems - Accessibility for Good

deque.com

Attachments

- image/jpeg attachment: image001.jpg

- image/png attachment: image.png

Received on Monday, 24 June 2019 15:23:29 UTC