- From: Simon Thompson - NM <Simon.Thompson2@bbc.co.uk>

- Date: Thu, 21 Sep 2023 10:14:39 +0000

- To: Pekka Paalanen <pekka.paalanen@collabora.com>

- CC: Sebastian Wick <sebastian.wick@redhat.com>, "public-colorweb@w3.org" <public-colorweb@w3.org>

- Message-ID: <AS8PR01MB8258D60ABC25196D8A365EA2FDF8A@AS8PR01MB8258.eurprd01.prod.exchangelabs>

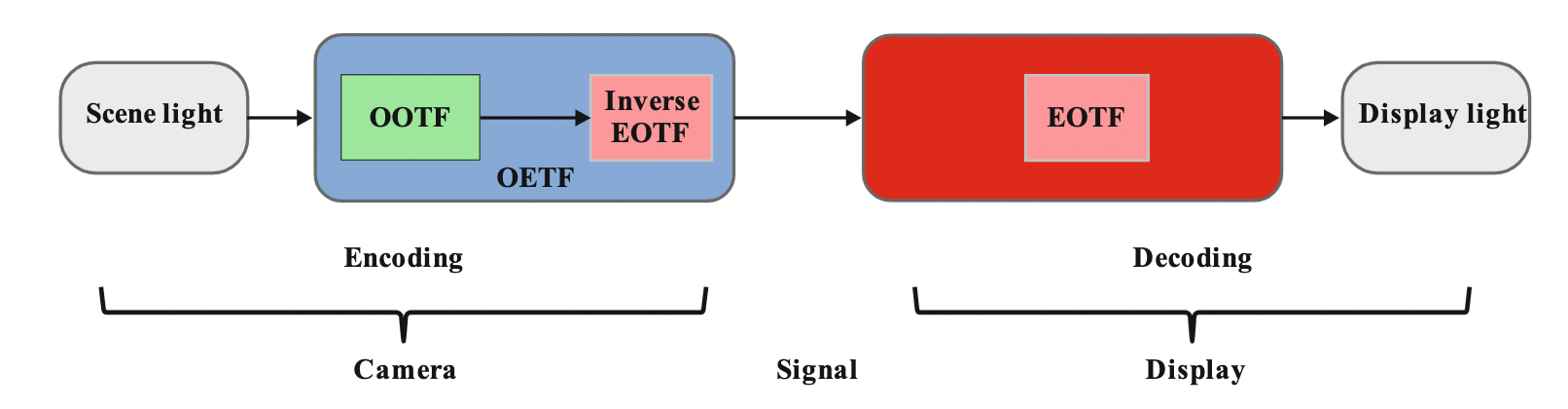

Apologies for the delay, I've been at the International Broadcasting Convention and SMPTE meetings. I'll try and deal with a number of emails in one. To understand the design of the 2 HDR systems it’s worth looking at the block diagrams from ITU-R BT.2100. The PQ system includes the OOTF within the encoding module. The OOTF maps the scene light to a display light signal that represents the pixel RGB luminances on the display used (the EOTF and Inverse EOTF are to prevent visual artefacts such as banding in a quantised signal). This means that the target display OOTF is burnt into the signal and metadata (e.g. HDR10, HDR10+, SL-HDR1, DV) is used to help adjust the signal to match the target display and ambient lighting conditions. [A diagram of a signal Description automatically generated] In the HLG system, this OOTF is present in the decoding module, which is usually present in the display. The OOTF is a function of peak screen brightness and ambient illumination (both of which are either known by the display or can be set by the end user). The signal represents the light falling on the sensor, not the light from a display. The adaptation for the screen all happens within the decoding block with no reference to another display.. [A diagram of a signal Description automatically generated] This is similar to ITU-R BT.709 (which only defines the OETF for an SDR scene-referred signal). We do not limit the signal to prevent filter ringing and encoding problems. Please see: https://tech.ebu.ch/docs/r/r103.pdf When converting from HLG to BT.709 to create a second stream/channel, most conversions on the market will leave the majority of colours (up to ~95% luminance) that are within the target colourspace alone, and then scale the highlights and out of gamut colours in a hue-linear colourspace so they are within the target video range (quite often EBU R103 -5% to 105%). The mappings available are all designed to convert a compliant HLG signal to a compliant BT.709 signal (i.e. they are designed to deal with any input). See for more detail: https://www.color.org/hdr/03-Simon_Thompson.pdf For reference monitors, the preferred mode is to display the entire luminance range of the signal. Chrominance is shown only up to the device gamut and signals that are outside are hard clipped to the display gamut. However, the signal is not limited to this gamut in any way and overshoots are expected, especially with uncontrolled lighting. See: https://tech.ebu.ch/docs/tech/tech3320.pdf For televisions, whilst the luminance performance is defined, the mapping from BT.2100 primaries to display gamut is unspecified – television manufacturers usually want to differentiate on “look” between brands and price points, so implement their own processing, have their own colour modes etc. This is the workflow that has been used in many large productions. >For HLG, because a gamma-adaption is applied to the entire signal range, shadows and midtones are shifted (dependent on the display brightness which >alters variable gamma – see BT.2100), a fixed look is applied with the >gamma adaption which we think alters the appearance and affects the way shaders >act on the content. Rather than just shifted, they are adjusted non-linearly to maintain a similar perceptual experience for different brightness displays and ambient environments. This is all described in ITU-R BT.2408 including the experiments undertaken to calculate the functions used. The pros and cons of camera shading methods is probably not within the purview of the W3C and is probably confusing for non-broadcast users. As discussed in last week's breakout meeting, there may be a need to provide highlight roll-off to match the operating system requirements where the required dynamic range is changing due to the device operating system changing the screen brightness dynamically to reduce battery usage, react to ambient lighting etc. -- Simon Thompson MEng CEng MIET Senior R&D Engineer BBC Research and Development On 15/09/2023, 16:48, "Pekka Paalanen" <pekka.paalanen@collabora.com> wrote: On Wed, 13 Sep 2023 10:36:53 +0000 Simon Thompson - NM <Simon.Thompson2@bbc.co.uk<mailto:Simon.Thompson2@bbc.co.uk>> wrote: > Hi Sebastien > > >The Static HDR Metadata's mastering display primaries are used to > >improve the quality of the correction in the panels by limiting the > >distance of the mappings they have to perform. I don't see why this > >would not equally benefit HLG. > > >Regarding composition: the metadata has to be either recomputed or > >discarded. Depending on the target format, the metadata on the > >elements can be useful to limit the distance of the mappings required > >to get the element to the target format. > > I’m not sure that the minimum distance mapping is necessarily the > best, it would depend on the colour space in which you’re performing > the process. In dark colours, the minimum distance may lift the > noise floor too. Chris Lilley has an interesting page looking at the > effect in a few different colour spaces, I’ll see if I can find it. > As I said in the previous email, a significant proportion of HDR is > currently being created whilst looking at the SDR downmapping on an > SDR monitor – the HDR monitor may be used for checking, but not for > mastering the image. When an HDR monitor is used, there are still a > number of issues: > > * The HLG signal is scene-referred and I would expect that there > will be colours outside the primaries of this display as it’s not > usual to clip signals in broadcast workflows. (Clipping leads to > ringing in scaling filters and issues with compression – this is why > the signal has headroom and footroom for overshoots). The signal > does not describe the colours of pixels on a screen. > * In a large, live production, the signal may traverse a number > of production centres and encode/decode hops before distribution. > Each of these will need to have a method for conveying this metadata > for it to arrive at the distribution point. As the transform from > Scene-referred HLG signal to a display is standardised, I don’t think > additional metadata is needed. If included, the software rendering > to the screen must not expect it to be present. Hi Simon, everyone, I feel I should clarify that my original question was about everything orthogonal to dynamic range or luminance: the color gamut, or the chromaticity space. I understand that the size of chromaticity plane in a color volume depends on the point along the luminance axis, but let's try to forget the luminance axis for a moment. Let's pick a single luminance point, perhaps somewhere in the nice mid-tones. We could imagine comparing e.g. BT.709 SDR and BT.2020 SDR. If your input signal is encoded as BT.2020 SDR, and the display conforms to BT.709 SDR, how do you do the gamut mapping in the display to retain the intended appearance of the image? Let's say the input imagery contains pixels that fill the P3 D65 color gamut. If you assume that the full BT.2020 gamut is used and statically scale it all down to BT.709, the result looks de-saturated, right? Or if you clip chromaticity, color gradients become flat at their saturated ends, losing detail. But if you have no metadata about the input signal, how could you assume anything else? If there was metadata to tell us what portion of the BT.2020 color gamut is actually useful, we could gamut map only that portion, and end up making use of the full display capabilities without reserving space for colors that will not appear in the input, while mapping instead of clipping all colors that do appear. The image would not be de-saturated any more than necessary, and details would remain. If we move this to the actual context of my question, HLG signal encodes BT.2020 chromaticity, and a HLG display emits something smaller than full BT.2020 color gamut. Would it not be useful to know which part of the input signal color gamut is useful to map to the display? The mastering display color gamut would at least be the outer bounds, because the author cannot have seen colors outside of it. Is color so unimportant that no-one bothers to adjust it during mastering? Or is chromaticity clipping the processing that producers expect, so that logos etc. are always the correct color (intentionally inside, say, BT.709?), meaning you prefer absolute colorimetric (in ICC terms) rendering for chromaticity, and perceptual rendering only for luminance? If so, why does ST 2086 bother carrying primaries and white point? Thanks, pq

Attachments

- image/png attachment: image001.png

- image/png attachment: image002.png

Received on Thursday, 21 September 2023 10:31:03 UTC