- From: Peter Thatcher <pthatcher@google.com>

- Date: Tue, 22 May 2018 14:21:25 -0700

- To: Harald Alvestrand <harald@alvestrand.no>

- Cc: public-webrtc@w3.org

- Message-ID: <CAJrXDUEH7ZuV_p+7k6zSfUGTPNxQ82CVjG2g4BYGR-gG7xAoLQ@mail.gmail.com>

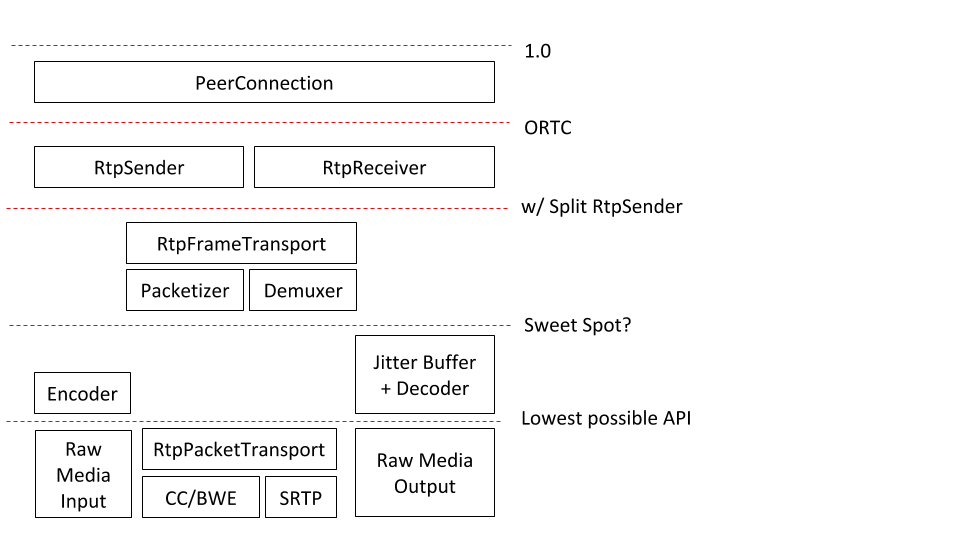

I made a diagram of my thinking of the different possible API layers. Here it is: [image: RTP object stack.png] This highlights the question: what happens to RtpParameters? Some are per-transport, some are per-stream, some are per-encoder (which is different than per-stream), and some don't make sense any more. Per-transport: - Payload type <-> codec mapping - RTCP reduced size - Header extension ID <-> URI mapping Per-stream: - PTs used for PT-based demux - priority - mid - cname - ssrcs Per-encoder: - dtx - ptime - codec parameters - bitrate - maxFramerate - scaleResolutionDownBy Don't make sense: - active (just stop the encoder) - degradation preference (just change the encoder directly) Providing the per-transport and per-encoder ones is straightforward. But what about per-stream parameters? Where do those go? Perhaps we'll need some kind RTP object that works with a encoded media instead of with unencoded/decoded tracks. Perhaps EncodedRtpSender and EncodedRtpReceiver? And if we have those, do we even need a separate RtpFrameTransport instead of just sticking an EncodedRtpSender and EncodedRtpReceiver directly on top of the RtpPacketTransport? On Tue, May 22, 2018 at 1:00 AM Harald Alvestrand <harald@alvestrand.no> wrote: > On 05/21/2018 11:14 PM, Peter Thatcher wrote: > > > > On Sun, May 20, 2018 at 9:14 PM Harald Alvestrand <harald@alvestrand.no> > wrote: > >> On 05/19/2018 12:11 AM, Sergio Garcia Murillo wrote: >> >> >> We should separate transports from encoders (split the RtpSender in half) >> to give more flexibility to apps. >> >> >> I agree with that, but given RTP packetization is codec specific, we >> can't ignore that. >> >> I think we can translate this into requirements language: >> >> IF we specify the transport separately from the encoder >> >> THEN we need a number of pieces of API, either as JS API or as >> specifications of "what happens when you couple a transport with an >> encoder": >> >> - If the transport is like RTP, and isn't codec agnostic, it needs >> information from the encoder about what the codec is, and may need to >> inform the encoder about some transport characteristics (like max MTU size >> for H.264 packetization mode 0). >> >> - If the transport is congestion controlled (and they all are!), then >> there needs to be coupling of the transport's congestion control with the >> encoder's target bitrate >> > - If the transport isn't reliable, and may toss frames, it needs to be >> coupled back to the encoder to tell it to do things like stop referring to >> a lost frame, or to go back and emit a new iframe. >> >> Handling the data is just one part of handling the interaction between >> transport and encoder. >> > > You are correct that a transport needs to have certain signals like a > BWE. It's also useful if it can give info about when a message/frame has > been either acked or thrown away. > > > > You are also correct that a high-level RTP transport would need to be told > how to packetize. But a low-level RTP transport could leave that up to the > app. > > > I think we're closing in on saying that the conceptual model of an RTP > transport is layered: There's a layer that sends frames (which knows about > codec specific packetization and would tell the encoder that a frame has > been lost), and there's a layer that sends packets (and doesn't need to > know about how to break frames into packets). > > The RTPFrameTransport would offer an interface similar to what > QUICTransport is proposed to do, while RTPPacketTransport would offer an > interface that lets the client set fields like PT. I think SRTP encryption > is agnostic to packet content, so it could be part of the > RTPPacketTransport. > > merging in another thread on the same subject: unlike our current model > (and, I think, ORTC), I would think of an RTPTransport as an object that > handles multiple media streams. An RTPSender would be the object that > handles one media stream, since that's what we're using the name for today. > RTPSender stlll seems to have a mission. > > > > > > >> -- >> Surveillance is pervasive. Go Dark. >> >> >

Attachments

- image/png attachment: RTP_object_stack.png

Received on Tuesday, 22 May 2018 21:22:05 UTC