- From: Iñaki Baz Castillo <ibc@aliax.net>

- Date: Wed, 4 Jul 2018 01:41:43 +0200

- To: Bernard Aboba <Bernard.Aboba@microsoft.com>

- Cc: WebRTC WG <public-webrtc@w3.org>, Robin Raymond <robin@opticaltone.com>

- Message-ID: <CALiegfkoQCC32H6EQPGMWu_T4U5vqac6Y_3pZxtWt+Yuoeyxsg@mail.gmail.com>

Hi Bernard,

This approach is much better than the previous one (since it decouples both

rid and encodingId). I'm just not sure whether it's too error prune (since

each SVC layer is indicated as a new entry within

RTCRtpParameters.encodings).

Can you please check my last response in the previous thread?

On Wed, 4 Jul 2018 at 01:35, Bernard Aboba <Bernard.Aboba@microsoft.com>

wrote:

> Here is a revised proposal for SVC support in WebRTC 1.0 (with some

> implied changes to ORTC):

>

> *Assumptions*

>

>

>

> 1. No need for negotiation. If an RTCRtpReceiver can always decode any

> legal SVC bitstream sent to it without pre-configuration, then there is no

> need for negotiation to enable sending and receiving of an SVC bitstream.

> This assumption, if valid, enables addition of SVC support by addition of

> attributes to encoding parameters.

> 2. Codec support. Today several browsers have implemented VP8

> (temporal) and VP9 (temporal, with spatial as experimental) codecs with SVC

> support. It is also expected that support for AV1 (temporal and spatial)

> will be added by multiple browsers in future. AFAIK, each of these codecs

> fits within the no-negotiation model (e.g. in AV1, support for SVC tools

> are required at all profile levels). As a side note, I believe

> that H.264/SVC could also be made to fit within the no-negotiation model if

> some additional restrictions are imposed upon implementations (such as

> requiring support for UC mode 1, described here:

> http://www.mef.net/resources/technical-specifications/download?id=106&fileid=file1 ).

> So the no-negotiation model seems like it would allow support for current

> and potential future SVC-capable codecs.

> 3. Single SSRC/rid for all SVC layers. VP8, VP9, AV1 and most

> implementations of H.264/SVC send all SVC layers with the same SSRC. If we

> can assume that all SVC layers of a simulcast stream will utilize the same

> SSRC and rid, this provides some simplification because it means that the

> rid attribute is used *only* to differentiate simulcast streams. See below

> for the effect of this within examples.

>

>

> *General approach*

>

>

> Today, the encoding and decoding parameters appear as follows:

>

>

>

> dictionary *RTCRtpCodingParameters* <https://w3c.github.io/webrtc-pc/#dom-rtcrtpcodingparameters> {

> DOMString <https://heycam.github.io/webidl/#idl-DOMString> rid <https://w3c.github.io/webrtc-pc/#dom-rtcrtpcodingparameters-rid>;

> };

>

>

>

>

> dictionary *RTCRtpDecodingParameters* <https://w3c.github.io/webrtc-pc/#dom-rtcrtpdecodingparameters> : *RTCRtpCodingParameters* <https://w3c.github.io/webrtc-pc/#dom-rtcrtpcodingparameters> {

> };

>

>

>

>

> dictionary *RTCRtpEncodingParameters* <https://w3c.github.io/webrtc-pc/#dom-rtcrtpencodingparameters> : *RTCRtpCodingParameters* <https://w3c.github.io/webrtc-pc/#dom-rtcrtpcodingparameters> {

> octet <https://heycam.github.io/webidl/#idl-octet> codecPayloadType <https://w3c.github.io/webrtc-pc/#dom-rtcrtpencodingparameters-codecpayloadtype>;

> RTCDtxStatus <https://w3c.github.io/webrtc-pc/#dom-rtcdtxstatus> dtx <https://w3c.github.io/webrtc-pc/#dom-rtcrtpencodingparameters-dtx>;

> boolean <https://heycam.github.io/webidl/#idl-boolean> active <https://w3c.github.io/webrtc-pc/#dom-rtcrtpencodingparameters-active> = true;

> RTCPriorityType <https://w3c.github.io/webrtc-pc/#dom-rtcprioritytype> priority <https://w3c.github.io/webrtc-pc/#dom-rtcrtpencodingparameters-priority> = "low";

> unsigned long <https://heycam.github.io/webidl/#idl-unsigned-long> ptime <https://w3c.github.io/webrtc-pc/#dom-rtcrtpencodingparameters-ptime>;

> unsigned long <https://heycam.github.io/webidl/#idl-unsigned-long> maxBitrate <https://w3c.github.io/webrtc-pc/#dom-rtcrtpencodingparameters-maxbitrate>;

> double <https://heycam.github.io/webidl/#idl-double> maxFramerate <https://w3c.github.io/webrtc-pc/#dom-rtcrtpencodingparameters-maxframerate>;

> double <https://heycam.github.io/webidl/#idl-double> scaleResolutionDownBy <https://w3c.github.io/webrtc-pc/#dom-rtcrtpencodingparameters-scaleresolutiondownby>;

> };

>

> To provide SVC support, we proposed to add the following additional

> encoding parameters:

>

> partial dictionary RTCRtpEncodingParameters <http://draft.ortc.org/#dom-rtcrtpcodingparameters> { d <https://heycam.github.io/webidl/#idl-boolean>ouble scaleFramerateDownBy; DOMString <https://heycam.github.io/webidl/#idl-DOMString> encodingId <http://draft.ortc.org/#dom-rtcrtpcodingparameters-encodingid>; sequence <https://heycam.github.io/webidl/#idl-sequence><DOMString <https://heycam.github.io/webidl/#idl-DOMString>> dependencyEncodingIds <http://draft.ortc.org/#dom-rtcrtpcodingparameters-dependencyencodingids>;

> };

>

>

> encodingId of type DOMString

>

> An identifier for the encoding object. This identifier should be unique

> within the scope of the localized sequence of RTCRtpCodingParameters

> <http://draft.ortc.org/#dom-rtcrtpcodingparameters> for any given

> RTCRtpParameters <http://draft.ortc.org/#dom-rtcrtpparameters> object.

> Values *MUST* be composed only of alphanumeric characters (a-z, A-Z, 0-9)

> up to a maximum of 16 characters.

> *scaleFramerateDownBy of type double*

> * Inverse of the input framerate fraction to be encoded. Example: 1.0 =

> full framerate, 2.0 = one half of the full framerate. For scalable video

> coding, scaleFramerateDownBy refers to the inverse of the aggregate

> fraction of input framerate achieved by this layer when combined with all

> dependent layers. *

> dependencyEncodingIds of type sequence<DOMString>

>

> The encodingId

> <http://draft.ortc.org/#dom-rtcrtpcodingparameters-encodingid>s on which

> this layer depends. Within this specification encodingId

> <http://draft.ortc.org/#dom-rtcrtpcodingparameters-encodingid>s are

> permitted only within the same RTCRtpCodingParameters

> <http://draft.ortc.org/#dom-rtcrtpcodingparameters> sequence. In order to

> send scalable video coding (SVC <http://draft.ortc.org/#dfn-svc>), both

> the encodingId anddependencyEncodingIds are required.

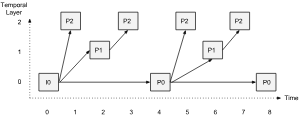

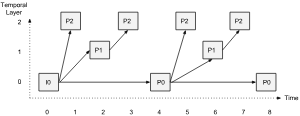

> Here is an example of how this would work for a single temporal

> encoded stream (without simulcast):

>

> *// Example of 3-layer temporal scalability encoding with only a single stream**var* encodings = [{

> *// Base framerate is one quarter of the input framerate*

> encodingId: "layer0",

> scaleFramerateDownBy: 4.0}, {

> *// Temporal enhancement (half the input framerate when combined with the base layer)*

> encodingId: "layer1",

> dependencyEncodingIds: ["layer0"],

> scaleFramerateDownBy: 2.0}, {

> *// Another temporal enhancement layer (full input framerate when all layers combined)*

> encodingId: "layer2",

> dependencyEncodingIds: ["layer0", "layer1"],

> scaleFramerateDownBy: 1.0}];

>

>

> The bitstream dependencies look like this:

>

>

>

>

>

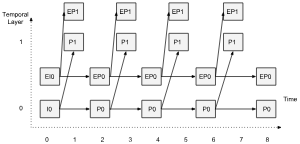

> Combining spatial simulcast and temporal scalability would look like this:

>

> // Example of 2-layer spatial simulcast combined with 2-layer temporal scalabilityvar encodings = [{

> // Low resolution base layer (half the input framerate, half the input resolution)

> rid: "lowres",

>

> encodingId: "L0",

> resolutionScale: 2.0,

> framerateScale: 2.0

> }, {

> // High resolution Base layer (half the input framerate, full input resolution)

> rid: "highres",

>

> encodingId: "H0",

> resolutionScale: 1.0,

> framerateScale: 2.0

> }, {

> // Temporal enhancement to the low resolution base layer (full input framerate, half resolution)

> rid: "lowres",

>

> encodingId: "L1",

> dependencyEncodingIds: ["L0"],

> resolutionScale: 2.0,

> framerateScale: 1.0

> }, {

> // Temporal enhancement to the high resolution base layer (full input framerate and resolution)

> rid: "highres",

>

> encodingId: "H1",

> dependencyEncodingIds: ["H0"],

> resolutionScale: 1.0,

> framerateScale: 1.0

> }];

>

>

> The layer diagram corresponding to this example is here:

>

>

>

>

>

> At the F2F, we also talked about allowing developers uninterested in the

> details to easily generate appropriate configurations.

>

> This could be done via helper functions, such as generateEncodings():

>

>

> // function generateEncodings (spatialSimulcastLayers, temporalLayers)*var* encodings = generateEncodings(1, 3); // generates the encoding shown in the first example

>

> *var* encodings = generateEncodings(2, 2); // generates the encoding shown in the second example

>

> *var* encodings = generateEncodings(); // generates a default encoding such as 3 spatial simulcast, 3 temporal layers

>

> *Limitations*

>

>

> What does this proposal *not* enable? RTCRtpFecParameters and

> RTCRtpRtxParameters were removed from WebRTC 1.0 a while ago, so this

> proposal does *not *enable support for differential protection (e.g.

> retransmission or forward error correction only for the base layer).

>

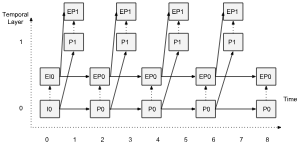

> However, the proposal can support for spatial scalability, if there is

> interest in enabling this at some point. How this works is illustrated in

> the following example (which does not utilize rids):

>

> // Example of 2-layer spatial scalability combined with 2-layer temporal scalabilityvar encodings = [{

> // Base layer (half input framerate, half resolution)

> encodingId: "T0S0",

> resolutionScale: 2.0,

> framerateScale: 2.0

> }, {

> // Temporal enhancement to the base layer (full input framerate, half resolution)

> encodingId: "T1S0",

> dependencyEncodingIds: ["0"],

> resolutionScale: 2.0,

> framerateScale: 1.0

> }, {

> // Spatial enhancement to the base layer (half input framerate, full resolution)

> encodingId: "T0S1",

> dependencyEncodingIds: ["T0S0"],

> resolutionScale: 1.0,

> framerateScale: 2.0

> }, {

> // Temporal enhancement to the spatial enhancement layer (full input framerate, full resolution)

> encodingId: "T1S1",

> dependencyEncodingIds: ["T0S1"],

> resolutionScale: 1.0,

> framerateScale: 1.0

> }];

>

>

> The diagram for looks like this:

>

>

>

--

Iñaki Baz Castillo

<ibc@aliax.net>

Attachments

- image/png attachment: pastedImage.png

- image/png attachment: 02-pastedImage.png

- image/png attachment: 03-pastedImage.png

- image/png attachment: 04-pastedImage.png

Received on Tuesday, 3 July 2018 23:42:21 UTC