- From: Maciej Stachowiak <mjs@apple.com>

- Date: Thu, 13 Feb 2020 18:33:23 -0800

- To: public-privacy@w3.org

- Message-id: <CB6A76B4-41AA-4DAA-B8F8-6F77CBE36755@apple.com>

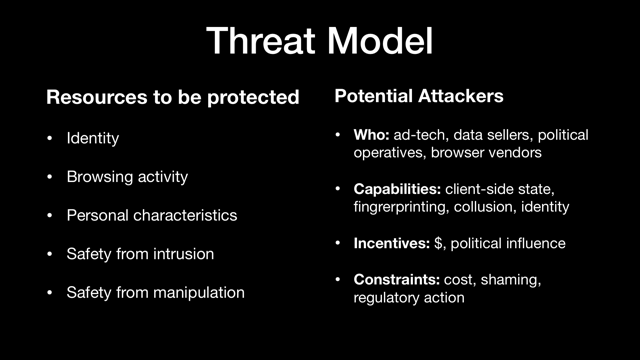

Hello all, A while back at a summit on browser privacy, I presented slides that, among other things, explained how the WebKit and Safari teams at Apple think about tracking threats on the web. In many ways, this is the threat model implicit in WebKit’s Tracking Prevention Policy <https://webkit.org/tracking-prevention-policy/ <https://webkit.org/tracking-prevention-policy/>>. This is very brief, because it’s converted from a slide in a presentation, and I have not had much time to expand it. I’d like this to be considered as possible input for the Privacy Threat Model that PING is working on <https://w3cping.github.io/privacy-threat-model/ <https://w3cping.github.io/privacy-threat-model/>>. Though these notes are very brief, they point to a more expansive way of thinking about tracking threats. The current Privacy Threat Model draft seems focused primarily on linking of user ID between different websites. That’s the viewpoint also expressed in Chrome’s Privacy Sandbox effort, which is also primarily focused on linking identity. Users may consider certain information to be private, even if it does not constitute full linkage of identity. For example, if a site can learn about personal characteristics, such as ethnicity, sexual orientation, or political views, and the user did not choose to give that information to that website, then that’s a privacy violation even if no linkage of identity between two websites occurs. I’d be happy to discuss this more in whatever venue is congenial. For now I just wanted to send this out, since I was asked to do so quite some time ago. Below is the text of the slide (and its speaker notes), followed by an image of the slide itself. ------------ == Threat Model == = Resources to be protected = * Identity * Browsing activity * Personal characteristics * Safety from intrusion * Safety from manipulation = Potential Attackers = * Who: ad-tech, data sellers, political operatives, browser vendors * Capabilities: client-side state, fingrerprinting, collusion, identity * Incentives: $, political influence * Constraints: cost, shaming, regulatory action Speaker Notes * Intrusion: highly targeted ad based on personal characteristics, recently viewed product, even if no real tracking * Manipulation: Cambridge Analytica * Who: we include ourselves; browsers shouldn’t track their users either

Attachments

- text/html attachment: stored

- image/png attachment: PastedGraphic-1.png

Received on Friday, 14 February 2020 02:33:43 UTC