- From: Christina Noel <CNoel@musicnotes.com>

- Date: Fri, 22 Apr 2016 14:35:44 +0000

- To: "public-music-notation-contrib@w3.org" <public-music-notation-contrib@w3.org>

- CC: Joe Berkovitz <joe@noteflight.com>, James Ingram <j.ingram@netcologne.de>

- Message-ID: <6e56265ba84d491dbcb1c002f9439c75@MNMail01.musicnotes.com>

James,

Ooh... You’re right. I misunderstood. I was thinking a MIDI file, not MIDI tone and velocity information embedded into the hierarchy (whether it’s XML or SVG or something else is irrelevant for that!)

Duration units are something that are up for grabs as far as I’m concerned. It’s important that the INSTANCE be able to keep an even tempo and beat set for the CWMN pieces, but I can see the advantage and flexibility in encoding by ms instead, and letting the tempo/beat visual markers be score:objects that are unimportant for those that only care about the audio playback. It then becomes the responsibility of the AUTHORING_APP to either make the durations match for matching symbols (all quarter notes at 1000ms = 60 bpm tempo) or to not do so, as your app does.

The only reason to make the tempo an explicit temporal marker anywhere would be so that the PARSING_APP can implement a metronome at the right tempo…

To be clear, are we talking about switching to SVG as the next radical rev of MusicXML (which wouldn’t be XML anymore), or just talking about organizational methods that can be applied to XML as well as SVG?

--Christina

---

Christina Noel

Lead Engineer of Music Technology, Musicnotes

Direct: | Fax: 608.662.1688 | CNoel@musicnotes.com<mailto:CNoel@musicnotes.com>

[Musicnotes.com]<http://www.musicnotes.com>

www.musicnotes.com<http://www.musicnotes.com/> | Download Sheet Music

Musicnotes, Inc | 901 Deming Way, Ste 100 | Madison, WI 53717

Facebook<http://www.facebook.com/musicnotesdotcom> | Twitter<http://twitter.com/musicnotes>

From: James Ingram [mailto:j.ingram@netcologne.de]

Sent: Friday, April 22, 2016 7:10 AM

To: public-music-notation-contrib@w3.org

Cc: Christina Noel <CNoel@musicnotes.com>; Joe Berkovitz <joe@noteflight.com>

Subject: Re: Notations in Scope

Hi Christina, Joe, all,

Thanks, Christina, for loving the concept and provoking this reaction! :-))

You are nearly there, but have misunderstood a couple of things. You said:

In the first place, whatever encoding we produce must be able to contain both the graphical and temporal data in one document. I’ve seen several parts of this discussion talk about using SVG+MIDI, which is fine for the output of a PARSING_APP or the input of the AUTHORING_APP, but should not be used for the INSTANCE. Whatever is used for INSTANCE, it needs to be self-sufficient in a single file because otherwise you end up with read/write problems: What if you lose one of the files, or are only given one? What if you have both files, but one is from a previous version of the music?

Well, the INSTANCE actually is a single file. :-)

The INSTANCEs produced by my Moritz (C# desktop) app and read by my Assistant Performer (Javascript web app) contain both SVG and MIDI information. This format is SVG augmented by an extra namespace for the MIDI info. As I said before, the CONTRACT I'm using is defined in

http://www.james-ingram-act-two.de/open-source/svgScoreExtensions.html

Here's a skeleton example of an INSTANCE that uses the xmlns:score namespace, showing an "outputChord" that contains both a score:midiChord and graphics.

...

<svg ... xmlns:score="http://www.james-ingram-act-two.de/open-source/svgScoreExtensions.html"<http://www.james-ingram-act-two.de/open-source/svgScoreExtensions.html> ...>

...

<g class="system">

...

<g class="outputStaff" ...>

...

<g class="outputVoice" ...>

...

<g class="outputChord" ...>

<score:midiChord>

<basicChords>

<basicChord

msDuration="777"

patch="0"

pitches="32 64"

velocities="127 127"

/>

...

</basicChords>

<sliders

pitchWheel="0 100 0"

expressionSlider="38 127 10 38"

...

/>

</score:midiChord>

<g class="graphics">

...

</g>

</g>

...

</g>

...

</g>

...

</g>

...

</svg>

Notes:

1. Don't be confused by the term "outputChord". My CONTRACT also contains "inputChord", but that's not relevant here.

2. There is a list of basicChords in the score:midiChord because the outputChord (symbol) can be decorated with an ornament sign, so can contain a sequence of basicChords.

3. This is an experimental prototype, and the MIDI encodings defined in my CONTRACT are currently limited to a useful subset of the MIDI standard. In principle, the CONTRACT could be extended to include other MIDI messages.

4. My current CONTRACT only expects one score:midiChord to be included in an outputChord. This could easily be changed so that more than one "interpretation" of the graphics could be stored in the same file.

5. I think it should be possible to create a standard CONTRACT for these things. Best would be if that were done co-operatively, drawing on the experience of everyone in the industry. There must be people out there who know better how to express MIDI in XML than I do. It would also be good if the CONTRACT could be put into machine-readable form. I don't (yet) know how to define a schema for an extension to SVG, but am pretty sure that it is possible.

And then there's this:

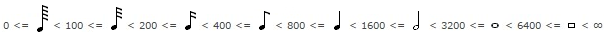

But I don't use tuplets. Duration classes are defined as bandwidths. For example, like this (durations in milliseconds)

[cid:image001.png@01D19C77.4A320B50]

Christina said:

I’m not sure what that means. Is that just a convenient wrapper for beats to note notation, or does it really mean milliseconds, and ignores the possible tempo specification? What happens when you need triplets, which don’t fit those definitions? (For triplets to represent properly, you need your whole note count to be a multiple of 3).

I compose with millisecond durations and let the software select the appropriate duration class symbol.

Humanly perceptible tempo and tuplets are really redundant concepts here. There really is no humanly perceptible tempo unless I deliberately choose the appropriate millisecond durations. And without a tempo, there can be no tuplets (Time is indivisible. Tuplets are tempo relations. A triplet means "go three times as fast".).

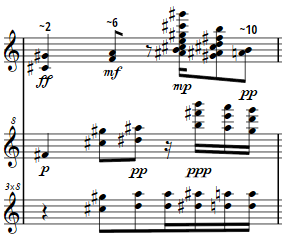

Take, for example, bar 8 of my Study 2:

[cid:image002.png@01D19C77.4A320B50]

The durations of the duration symbols in the top staff are composed as:

1000, 545, 500, 375, 429, 400 (milliseconds)

So their duration classes are (using the key above)

crotchet, quaver, quaver, semi-quaver, quaver, quaver

and the total duration of the bar is 3249ms

Bars have to add up at the millisecond level (otherwise the following bar won't begin synchronously), so the durations of the duration symbols in the lower two staves were composed to add up to 3249ms.

In this case, both the lower staves have the same durations:

857, 532, 442, 375, 337, 364, 342 (milliseconds)

Which is a composed accelerando.

I could have made the 7 durations as equal as possible:

464, 464, 464, 464, 464, 464, 465

But even then, it would be meaningless to add a tuplet bracket annotation above the notes because there is no tempo (no repeated time segments of 3249ms). It wouldn't help the PARSING_APP either.

Such music is, of course, not intended to be played by humans. But being able to provide arbitrary default temporal renderings at a first rehearsal of music that is intended for human performances shortens rehearsal times and can allow traditions of performance practice to develop.

The revival of performance practice traditions for Early Music notations (during the past 40 years or so) would never have happened without the use of recordings. Maybe we can do something similar for New Music.

Hope that helps,

All the best,

James

--

http://james-ingram-act-two.de

https://github.com/notator

Attachments

- image/png attachment: image001.png

- image/png attachment: image002.png

Received on Friday, 22 April 2016 14:36:19 UTC