- From: Mark Hampton <mark.hampton@ieee.org>

- Date: Wed, 25 Sep 2024 12:12:36 +0200

- To: Michael Robbins <michael@learningpathmakers.org>

- Cc: Human-centric AI <public-humancentricai@w3.org>

- Message-ID: <CAMYbm87Vz9uM07qThj5TZrCxRynY2W7Fb2Mg-Y7jQ3MZjy1KNA@mail.gmail.com>

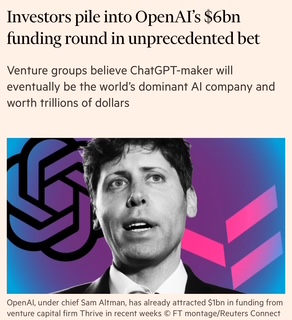

Hi Michael, I understand that people may not have participated for a variety of reasons, and I believe that encouraging engagement in this community is crucial. However, I feel that statements like “where the large majority of silent group members have abdicated their participation” may unintentionally discourage further involvement. I hope we can leave such framing behind and focus on fostering more inclusive dialogue moving forward. Regarding "human-centric AI," I agree there’s likely a broad spectrum of interpretations. For instance, if you were to ask someone like Sam Altman if OpenAI is building human-centric AI, he’d likely say yes. However, most of us here might disagree with his perspective. No one is explicitly aiming to build “anti-human” AI, but there are indeed varied ideas about what “good AI” looks like. This group, by nature, will attract individuals who generally see technology as a force for good, but it’s important to recognize that there will be different cultural and philosophical foundations shaping our views. I strongly support the idea of defining what we mean by “human-centric” before delving into the AI part of this challenge. Human-centricity spans many fields—philosophy, social psychology, anthropology—and many of these aren’t typically studied by technologists There is a risk, albeit not a certainty, that those unfamiliar with these fields may make decisions based on unconscious biases, particularly those rooted in Western individualism and materialism, which are increasingly seen as problematic. For example, hyper-individualism and materialism, as dominant Western values, are contributing to societal and ecological crises. Any AI that perpetuates these frameworks risks being equally harmful. If we base our understanding of “intelligence” on the Modern Western tendency toward essentialism, we will follow an incoherent path. Ultimately, defining “human-centric” AI should be an inclusive process that draws on the wisdom and insights from diverse fields and cultures. I would appreciate seeing input from experts in moral philosophy, social psychology, and other non-technical disciplines, as well as from a diverse group of participants across different regions and traditions. If we can't get those people participating, then unfortunately perhaps, the task of understanding and representing those perspectives falls on us. Regards, Mark On Wed, Sep 25, 2024 at 12:18 AM Michael Robbins < michael@learningpathmakers.org> wrote: > We have failed to protect the web. Big AI is eating it and filling it > with slop. Now we must take a radically different approach: > > "Investors seeking to buy into OpenAI’s latest $6bn-plus funding round are > making an unprecedented bet that the ChatGPT-maker will become the world’s > dominant artificial intelligence company and be worth trillions of dollars. > > The San Francisco-based start-up is finalising a new fundraising valuing > the company at $150bn. Thrive Capital, Josh Kushner’s venture capital firm, > has already provided at least $1bn to the company in recent weeks, > according to people with knowledge of the deal. > > OpenAI aims to raise an additional $5bn or more. Apple, Nvidia and > Microsoft — the three most valuable technology companies in the world — are > in talks to join the funding round. Others seeking to invest are New > York-based Tiger Global and United Arab Emirates-backed fund MGX, according > to multiple people with knowledge of the discussions. The deal is expected > to close imminently. > > However, other leading tech investors, including Andreessen Horowitz and > Sequoia Capital — Silicon Valley’s top venture capitalists and existing > OpenAI backers — are sitting out of the round, according to people with > knowledge of the matter. > > Investors in the deal said it was highly unusual in its scale and > structure. Venture investors such as Thrive and Tiger typically write far > smaller cheques for less established start-ups, hoping for 10 to 100 times > their money back. > > To achieve such a return with OpenAI, the company would need to grow in > the coming years to become worth at least $1.5tn; larger than Facebook > parent Meta and Warren Buffett’s Berkshire Hathaway. > > Many are persuaded it will. “We’re talking about the path to building a > trillion-dollar company,” said a partner at an investment firm that has > backed OpenAI. “I don’t think this is unreasonable.” > > (Via the Financial Times) > > Make no mistake. This is what human-centric AI is up against. It’s Game of > Thrones in the Age of Technofeudalism. > > *Quis custodiet ipsos custodes?* > > Winter is coming. We can’t combat this with a litany of tech standards and > solutions. It must begin with people and their “why” for data dignity: to > build AI agents that help them in school, work, and life. > > This is the challenge that I’ll be setting out for this community group. *There > must be a pathway and purpose for exodus from Technofeudalism and the web > as we know it.* I’ll be sharing what I’ve discovered for this with the > group. And recruiting others to join us, to take up where the large > majority of silent group members have abdicated their participation. > > [image: image0.jpeg] > > > > On Sep 24, 2024, at 2:02 PM, Timothy Holborn <timothy.holborn@gmail.com> > wrote: > > > Hi Michael & others on the list... > > Whilst I personally despair the direction wide-scale AI developments have > taken, and the various related implications; I think it's important for > various reasons to ensure language does not target people[1], but rather > problems. Further, if one 'looks under the hood', I suspect findings > would show MS more influential[2][3]. This week, there's been some fairly > significant UN meetings, based on what now exists. > > not what could have, should have or might have been made, but was neither > available to try, nor were the marketing materials available for decision > makers to consider... > > Within this complex scope of so called 'competition', there are also > competing applied definitions to the notion of 'human centric', and as a > W3C CG is a community space - i sought input to better understand what the > meaning of the nomenclature was now to people[4], notwithstanding my own > strong views[5]... > > FWIW: there's a call for submissions re: https://www2025.thewebconf.org/ > which can be found on that site. > > A Quick update otherwise; noting, its not specifically 'w3c work', rather, > just letting people know - perhaps something will fall out of it, that > ends-up needing w3c processes or would become better, if that occurred... > > I think my work is fairly slow-going atm.. nonetheless. The work on > defining different types of 'agents' or artificial minds, is a continuing > topic. This sheet has some old notes[6] but I haven't really updated it. > I'm still working through the process of defining what I call 'webizen', > which I don't think should be anthropomorphised.. Therein, considerations > about defining them AS ROBOTS, or FOAF:AGENT:SOFTWARE (not legal person) > and thinking about how to create 'cartridges', for lack of a better term > now; where they may be loaded / unloaded, called for specific purposes, and > in-turn how to define them.. Part of these considerations are about the > psychological aspects, whilst the other part is about the geek processes. > > I've not historically been focused on LLMs, as my view was that the > foundations needed to be done first, then linked to LLMs; but as noted, > I've got the hardware to do the RD&D, nonetheless, it's only been a few > months... I've updated the 'tools' sheet, with some LLM related > resources[7], there's some other notes - particularly about GPU hardware in > this sheets doc[8] and both need updating - whilst i've also been thinking > about how to define a schema & ideally also, enable decentralised discovery > / updates.. > > I've got 'models' running locally, and have then got them working on my > other devices over TailScale[9] which has then led to investigation about > replacing the 'default assistant app' on my phone, finding: > https://github.com/AndraxDev/speak-gpt/ but i haven't got HTTPS working > yet. Currently investigating LocalAI[10][11] which is written in go, and > i'm hopeful, might make it feasible for me - to integrate with the older > WebizenAI[12] work, that used the old RWW[13] libraries, whilst also > exploring spatio-temporal supports, as noted earlier[14]. Earlier work, > considered the use of WebID-TLS / WebID-RSA for devices & things, alongside > the need for WebID-OIDC for AUTH - but - context was about my attempts (now > considered to have failed) to define a 'knowledge banking' ecosystem(s). If > alternatives are running locally, context changes.. So, as I'm working > through the issues with enabling TLS, I'm also considerate of the NextCloud > Solid[15] work, and am generally seeking to ensure support for SANs... > but, its all very experimental atm.. > > As for fairly simple tests / 'thought experiments'; I've also been toying > with the idea of how to define a PRIVATE & Permissions Supporting - chat > app; that uses local LLMs, and has the purpose of translating the language > people communicate in, to whatever language is preferred by the recipient. > Then also, considering what functionality might be added into it, to > support a profile intended to be for children (with guardianship supports); > in-part, considering proposed laws that claim to be about protecting > children on social media, by banning the use of social-media by young > people; but social media or social-web was part of the old definition of > 'web 3.0'[16]; whereby 'social', refers to socialisation a foundational and > essential element to the natural function of many species of life, flora, > fauna & funga, and media being a plural to medium - so, the words they use > (whilst seeking 'age credentials' / identity wallet integration with social > media silos[17], that i envisage will lead to payments requirements being > sought to be resolved) have far broader implications; and, i find the > situation broadly depressing, as the 'status quo', due to the priorities > illustrated by others generally, means that there isn't a good way to > illustrate to these decision makers - the envisaged alternatives, that they > may act to make unlawful to produce - that could otherwise act to support > engagement between one-another, rather than dependencies upon global social > agora that continues to engender harms upon all people, whilst certainly > not equally, indeed, moreover - dynamically, per idiocracy related > 'norms'... IMHO... > > The embodiment of these sorts of apps are still envisaged to be progressed > as a web-extension, but there's also still plumbing related processes being > investigated. > > *NOTE: that in my modelling, i'm assuming users have their own domain (or > sub-domain); as was part of the > 'ADP' https://datatracker.ietf.org/meeting/119/materials/agenda-119-hotrfc-sessa-04#h.eno136kpyxqs > <https://datatracker.ietf.org/meeting/119/materials/agenda-119-hotrfc-sessa-04#h.eno136kpyxqs> > work.. Therein, for example, users could create Email Alias that > specifically relates to the relationship (ie: the other person in their > 'address book'); which, was hoped to help combat spam; but, may also now > have other implications given the direction of emergent laws, alongside > interfaces for different kinds of AI Agents.* > > The other issue, that I've done SOME work on, but not enough, is in > seeking to figure out how best to structure content for ingesting into > LLMs. They don't appear to support RDF 'natively', rather - designed with > a focus on JSON; which I think, has implications due to the lack of > name-space support; and, the means to better integrate Triple/Quad Stores > with VectorDB sources, I haven't figured out yet. At least, not well. I > experimented with uploading my pathology results archive into an LLM but > got poor outcomes, understanding that as the system is running on my > machine - its private. I also tried processing proposed legislation, but > similarly didn't get very good results - indeed, it seems they may be > 'preset' to preference US legal structures, which has alot of differences > to other jurisdictions and related contexts. > > But i need to figure out how to process the input docs, into whatever the > 'best' format is; and am then seeking to progress previous work on 'hyper > media containers', which would package the files, provide an index in > whatever the best format ends-up being defined as; and, incorporate also > the links to original sources, etc... which may in-turn lead to improved > solutions that might seek to make software available over IPFS, WebTorrent > or similar... > > Part of the original purposes of importance to me, was the means to more > easily present complex legal issues to authorised parties... part of this > process was also about collection of digital evidence, therefore > 'verifiable claims'... I think the function of a personally operated > 'default assistant app', and related software, might change the way phones > work - so that, the means in which web-results change seemingly related to > private conversations - is more - personally controllable, definable, > etc... doesn't need to share 'the data' to foreign companies / > jurisdictions; and, make use of the active employment of those sensors for > purposes that act to better support human rights, as have otherwise been > set-aside... or, considered a lesser priority, etc. Yet, as is, i think, > consequential to the still nascent state of development in these areas, i > think there is still a lot of work to do; and, believe (given current > resources) it'll take some time to advance into something, that can be > easily deployed on a 'mac studio' or similar... > > Another finding, fwiw, there's alot of these LLM applications and they > usually all want to provide the 'llm store', which, given some of these > 'models' or 'ai agents', are 10's of GB (GB size, generally associates to > how many GB of vRAM needed to run them), duplicates on a system is > undesirable. It would be better if there was a 'common store', and some > standard way apps can try to find it; whilst, there's still competition on > the host software running, that is then expected to load it; and, having > many of these agents is also undesirable... > > AND: I was asked about Computer Vision, CCTV, Video Surveillance policy > recommendations. I made a few suggestions based upon the notions of > ensuring people own their own biometric signatures, decentralised > discovery, support for 'commons informatics' in a manner that protects > 'freedom of thought', as to seek to ensure analysis and/or identification > of objects (macroscopic or microscopic) does not end-up being 'owned' as a > proprietary interest via a particular jurisdiction (perhaps foreign, from > source) & various other related considerations, noting Mico-Project[19] and > Sparql-mm (thought usefully employed with Sparql-Fed) presented a potential > path, sometime ago.. I don't think Sparql-MM documentation is on W3C atm? > not sure how it could or should be updated either... > > But; Per the Anti-Pattern[3] related realities - there is, at least > seemingly, a functional process where works seeking to define alternatives > to 'god ai'[18] projects can be disrupted, as a 'threat' by a now very > powerful movement, somewhat denoted by the use of JSON specifically, which > re: "The Software shall be used for Good, not Evil."[21] subjectively > associates to various complex implications, that are seemingly very > difficult for many 'geeks' and thereby reasonably impossible for other non > computer science experts - to be reasonably expected to understand.. OH - > if / when, the social elements develop, the means for people to form & > manage agreements between one-another, as is different to platform provided > 'mandates', also brings about a need to look at how to support rules in > association to those agreements... > > SO, whilst i tried to get UN Parties to help craft the UN Instruments to > be useful for that purpose; after years of best efforts, i'll likely > end-up progressing that work myself.. as much as i had hoped there's be > interest to build it to be supported via https://metadata.un.org/ > > certainly, its difficult to both comply with expectations and > simultaneously, whilst controlling utterances, remain hopeful for the > future and communicate honourably, derivatives of work intended to promote > human rights and protect the means for persons to resolve disputes (or > consequences of harms) peacefully.. but, i also think that these issues > are indeed common and that it most disaffects those who truly care about > those sorts of values, as is different to the actions, activities and > derivatives of gamification... therein, 'reality check tech' would and > should end-up illustrating facts about how all natural agents are indeed > imperfect; whereas alternatives, may be delivered using modelling that > engenders different types of outcomes. Either way, there's causal effects, > but if 'drama protocols' gain supports in areas previously associated with > fields of STEM - then, the dynamics of the environment changes, as do the > outcomes, imho... But, the consequence may be that some are defined to be > the problem; whilst others, defined perfect; and whether and/or how this > acts systemically, may well, by design, become unknowable.. I did > historically try to define 'Knowledge Age' but it got deleted > https://en.wikipedia.org/w/index.php?title=Draft:Knowledge_age&action=edit&redlink=1 So, > perhaps an 'authorised thinker' might want to try again, as this > 'information age'[22] has many problems... some like it, others - not so > much... but, i'm not sure if a 'knowledge age'[23] is allowed, > particularly as historical attempts have resulted in failure. As such, I'm > led to remember the days when i started, as a young person in the 80s / > early 90s, a kid - getting parts from people selling their old gear 2nd > hand; finding software, learning - including, as one of the very few geeks > in the computer room at school... those old days, of BBS sites, dial-up > modems - had a different sort of ethos about it.. I think the cost of > building LLM supporting machines is about the same, as computers back then > cost about 2k (AUD) so, putting something together nowadays (dual xeon, > 256gb+ ram, 72gb vRAM, etc) when using 2nd hand parts, is about the > same... not for everyone, but i think, something that should be allowed... > > in the end, i think the outcomes have the capacity to be safer, better and > more useful, for people... human centric[5], supporting the means for > people to own their own 'thoughtware'... thereby seeking to ensure an > alternative to digital slavery, where others own the software that defines > your mind... made accessible to you, via API as the consumable consumer.. > said to be benefiting, because it's 'free'... I disagree, but, because I > disagree, I'm poor. I'd prefer alternatives to UBI, where people are paid > fairly for good & useful work, but that's still something that MAY be made > achievable in future... sadly. Perhaps, as some suggest, it'll take > decades.. which, seems to me, to be an awful suggestion.. Whilst most > define whether a person is 'successful' based upon the characteristics of > their wallet, their 'identity wallet', which therefore makes it often > dangerous to talk about, if poor.. the PCI goals defined October 24-25 at > Stanford University, 2015[24] included; "7. Personal information in the > digital environment is protected by law and controlled by the individual > owner.", so, whilst its not been realised from that time, others, who may > not live to see it happen, have considered such ideals as being > important.. so, i think, there's still a lot of merit in seeking to > realise the availability of these softs of options; but overall, i guess - > that's where it's / i'm at... > > There's certainly alot that can be pulled apart / deconflated, and sought > to be progressed... I continue to believe 'humanitarian ict' is of > instrumental importance for our humanity... So, I hope this helps. > > 🙏🕊️ > > Tim.H > > *NOTE: This has been just written, no agents, not alot of 'careful > drafting', there may be errors whether it be due to autocorrect or similar; > or, just because i made a mistake or oversight, or other unintended error > or fault.* > > [1] https://www.w3.org/policies/code-of-conduct/ > [2] > https://lists.w3.org/Archives/Public/public-payments-wg/2016Mar/0177.html > [3] > https://web.archive.org/web/20180901130552/http://manu.sporny.org/2016/browser-api-incubation-antipattern/ > > [4] > https://lists.w3.org/Archives/Public/public-humancentricai/2023Apr/0004.html > > [5] > https://www.w3.org/Search/Mail/Public/search?keywords=%22Human+Centric%22&indexes=Public&resultsperpage=100&sortby=date-asc > > [6] > https://docs.google.com/spreadsheets/d/1rqYC2E2BDIHBADAT7-9CabawkmYBJpBBf1KJO24D7ig/edit?usp=sharing > [7] > https://docs.google.com/spreadsheets/d/19IEgvdvwl_EOGhmIFinVQu4OerRojeje8PaZWGvoO4Q/edit?gid=0#gid=0 > > [8] > https://docs.google.com/spreadsheets/d/1jDLieMm-KroKY6nKv40amukfFGAGaQU8tFfZBM7iF_U/edit > > [9] https://tailscale.com/ > [10] https://localai.io/ > [11] https://github.com/mudler/LocalAI > [12] https://github.com/WebizenAI > [13] https://www.w3.org/community/rww/ > [14] > https://lists.w3.org/Archives/Public/public-humancentricai/2023Jun/0005.html > > [15] https://github.com/pdsinterop/solid-nextcloud > [16] > https://web.archive.org/web/20130122051820/http://jeffsayre.com/2010/09/15/web-3-0-smartups-the-social-web-and-the-web-of-data/ > > [17] https://www.w3.org/DesignIssues/CloudStorage.html > [18] https://www.youtube.com/watch?v=ReB5UHfKCG8&t=666s > [19] https://www.mico-project.eu/ > [20] https://www.mico-project.eu/portfolio/sparql-mm/ > [21] https://www.json.org/license.html > [22] https://en.wikipedia.org/wiki/Information_Age > [23] old notes: > https://medium.com/webcivics/a-future-knowledge-age-2e3f5095c67 > [24] > https://peoplecentered.net/wp-content/uploads/2017/10/Principles-of-the-People-Centered-Internet.pdf > > > On Wed, 25 Sept 2024 at 01:36, Michael Robbins < > michael@learningpathmakers.org> wrote: > >> Sam Altman is touting his vision for centralized AI. Our group must be >> the vanguard for a radically different approach. >> >> [image: cover.png] >> >> The Intelligence Age <https://ia.samaltman.com/> >> ia.samaltman.com >> >> >> The human-centric pathway for AI doesn’t use unbelievable amounts of >> energy and water in the process. But this doesn’t leave control in the >> hands of businesses and billionaires, so Altman isn’t promoting it. It >> hinges upon the the decentralization of computing to local devices and >> building a radically different architecture for AI on a foundation of data >> dignity. >> >> Instead of supercomputing and server farms, the sane and sustainable >> paradigm is building ecosystems of AI agents that represent individuals >> and organizations. This is also the revolutionary pathway we need to remake >> learning, digital networks, and democracy. >> >> The only way to reign in AI out of control is by building representative >> governance inside the AI ecosystem. We can’t leave it to businesses and >> technology has outstripped government. Our AI-powered assistants, built >> with data that we privately secure away from mega corporations, will become >> the ante for citizenship and democracy in the digital realm. >> >> Michael >> >

Attachments

- image/jpeg attachment: image0.jpeg

- image/png attachment: cover.png

Received on Wednesday, 25 September 2024 10:12:54 UTC