- From: Tobias Looker <tobias.looker@mattr.global>

- Date: Wed, 23 Nov 2022 08:18:13 +0000

- To: Manu Sporny <msporny@digitalbazaar.com>, W3C Credentials CG <public-credentials@w3.org>

- Message-ID: <SY4P282MB1274B9FBAA61F058E01ED7B29D0C9@SY4P282MB1274.AUSP282.PROD.OUTLOOK.COM>

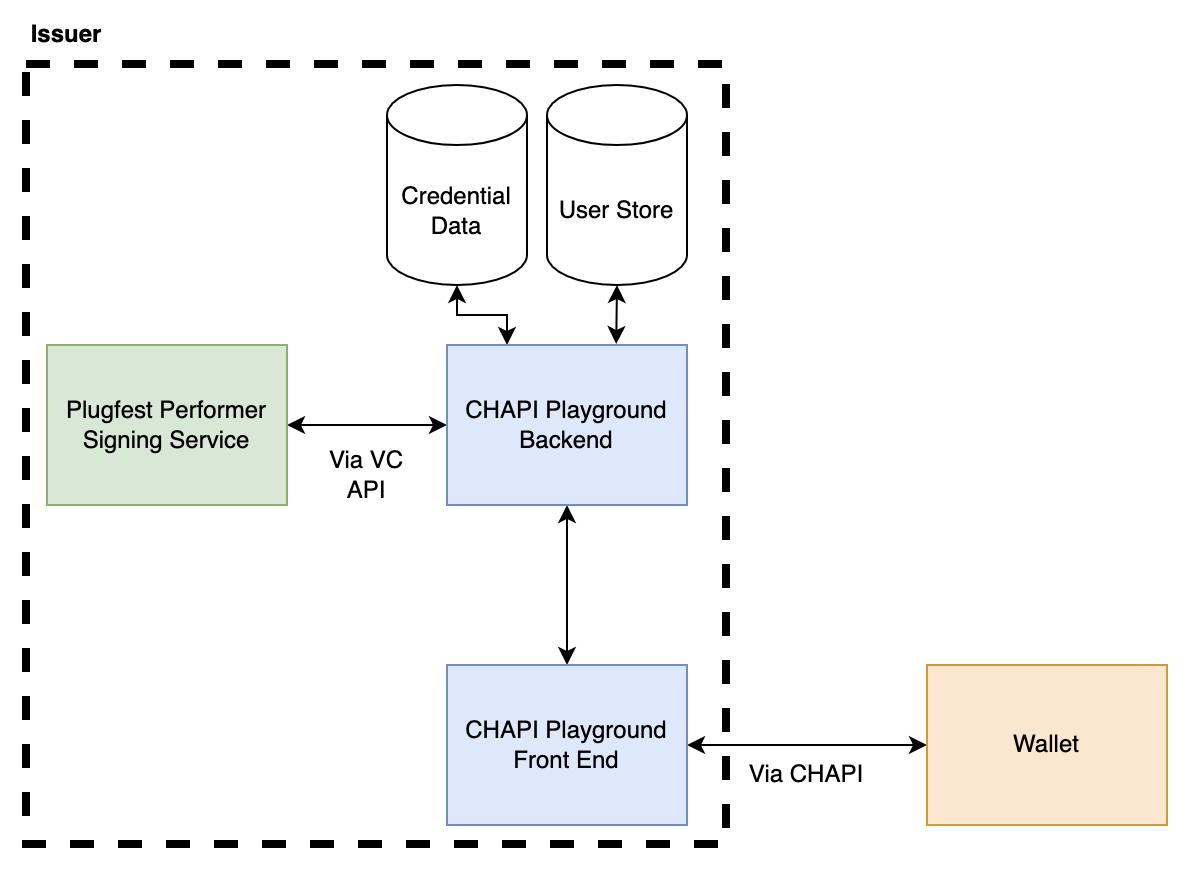

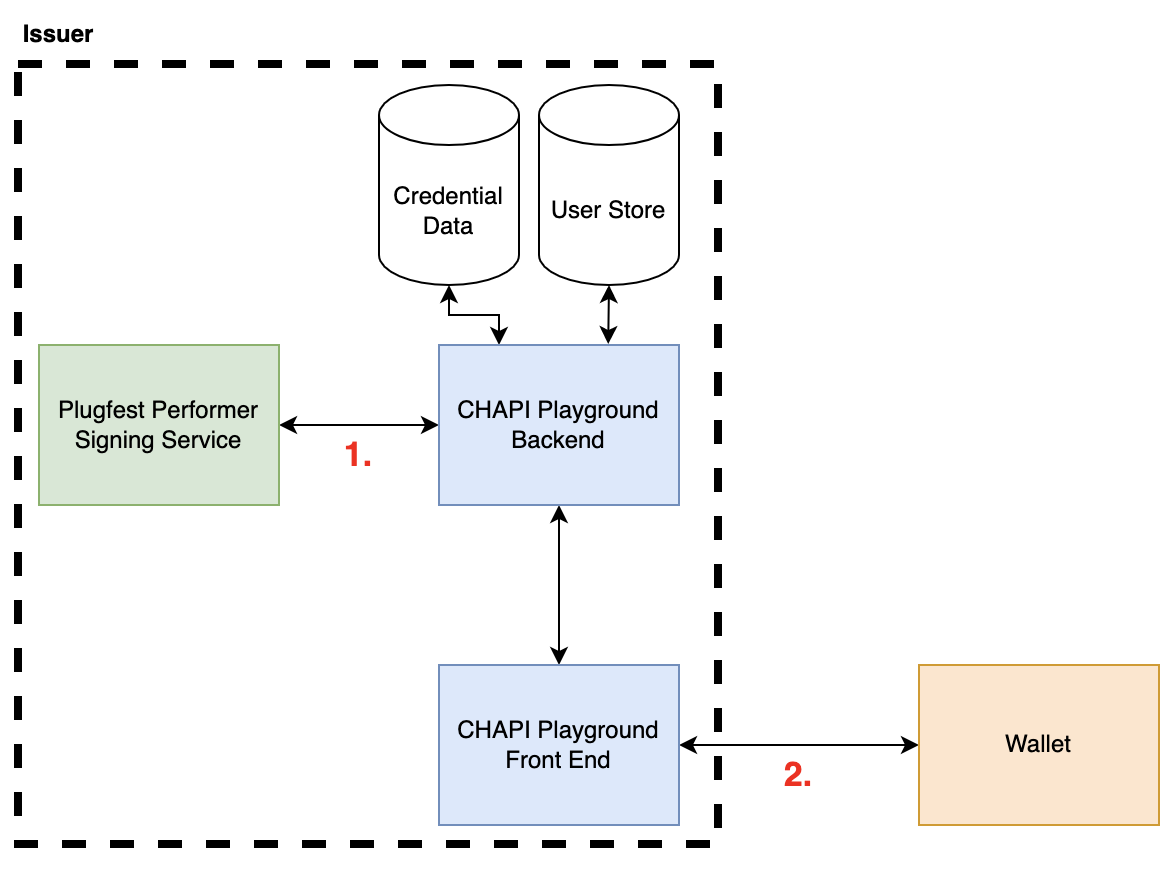

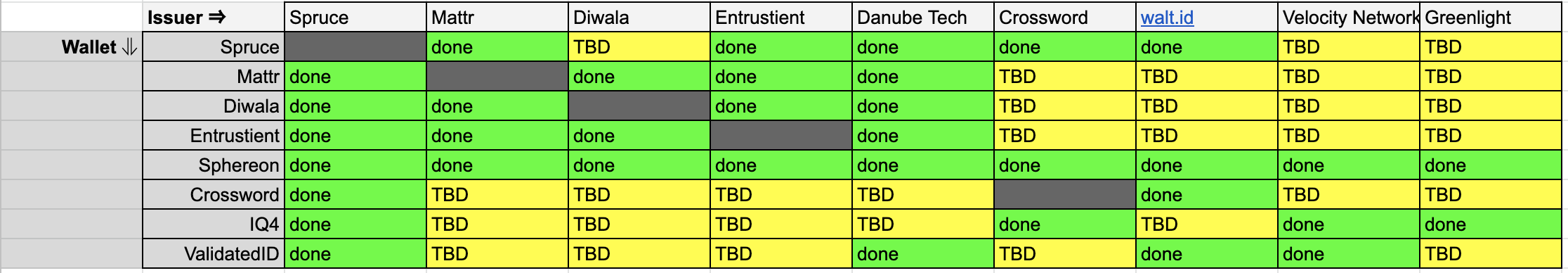

Manu, I appreciate you taking time to write up your thoughts on this topic, but TL;DR I really do strongly disagree with most of this characterization and quite frankly I'm concerned if this is truly the perception of how VC API vs OpenID 4 VCI works. I hope we can forge a better collective understanding. Lets break down the "17 implementations" asserted, based on the interop matrix, 13 of these implemented somewhat partially between options 1-3 (of 6 possible interop options! talk about fragmentation :P), only 4 (aside CHAPI playground) actually implemented some interface that spoke to a wallet. That means for 76% of the reported interop scenarios you cited, fell into the following form of architectural pattern. [cid:354a782d-fd08-446d-bfba-01362847afaf] You argue that VC API has now introduced a new term that has divided the responsibility of the issuer into two parts, but that isn't agreed upon anywhere outside of the VC API, the VC data model has no notion of an "Issuer co-ordinator". I think what it comes down to is which is more important to standardize. Is it: 1. An internally focused API that presumes so many things about the issuers infrastructure (e.g the existence of some "issuer co-ordinator"). 2. The interface that allows the issuer to interact with a holder (wallet). Options labelled on the following picture for clarity [cid:5df88577-a9b9-46ea-9023-5b7e81ff6984] OpenID 4 VCI focuses on the latter without any need for an "issuer co-ordinator", because that aligns to the VC data model and hey we should probably focus on getting interop between the defined parties in the VC data model, before we start inventing more roles right? To further re-enforce this point, I'll give what I hope is an analogy to explain where I believe the disconnect is and why a signing API exposed via VC API does not constitute the issuer of said outputted credential. Lets take a real-world physical credential as an example, a passport. As a document there are a tonne of vendors and services involved in delivery of a specific countries document, but only one issuer. For example the vendor that supplies the hologram or the special ink is not the issuer, just a service provider that is contracted by the issuer to provide this function. To make this even more relate-able to verifiable credentials, many will know that ePassports actually make use of digital signatures, that is every passport that rolls off the production line includes an NFC enabled chip that has a signed eMRTD structure. Now for many countries the service that does this signing is not actually the government, it is a PKI service provider who actually does this signing. This is conceptually identical to what went on with JFF plugfest for those that used the CHAPI playground. The issuer as you describe it was not the signing service, instead the signing service is only but a small part, everything else was controlled and performed by the CHAPI playground, including: - Invoking the wallet - Identifying the user - Sourcing the cryptographic proof of posession for credential binding - Mastering the information that goes in the credential - Calling the signing service to issue the credential - Sending the credential to the wallet. How can it possibly be that all of these functions are not that of an issuer, again to map this to a real world usecase, there is simply no way the government of a country would say "hey CHAPI playground, i'll expose you an API and i'll just sign whatever you send me about whoever, and have no idea where or who it lands with", sounds like a fun mess of identity fraud waiting to happen :p. To be clear im not criticising exposing an API to sign a blob of information, I'm calling out whether its important for that being a standard interface AND that the claims about the interop it fosters are dubious. It doesn't promote issuer-holder (wallet) interop, which are the parties in the VC data model, it promotes interop between two new parties that VC API has essentially invented who are collectively the issuer in the VC data model. > 1) OID4 interop split into two non-interoperable end-to-end camps (but was combined into one camp for reporting purposes, inflating the number of issuers beyond what was actually achieved) This is just categorically false, we chose to group the issuers into two primary groups, even though there were members that participated in both, whereas in your interop spreadsheet you chose to put them all in one table. To help clarify this once and for all, here is a combined table that took the same information representation approach you did. [cid:90ad879d-eea8-43cd-a03d-e6ec8e3b9ff3] w.r.t optionality and fragmentation, can you explain how the fact that VC API actually having 6 groups/classes of interop isn't 3 times worse :P than your assertion about OpenID 4 VCI? All in all its really problematic, to assert that "we demonstrated interop in a 3 party model (issuer, verifier, holder) by adding a 4th party called issuer co-ordinator who does all of the heavy lifting of the issuer via a common broker we stood up!" and still call that issuer to holder interop. OpenID 4 VCI on the other hand did all its interop successfully with out a centralized common broker. > * The organizations implementing OID4 had to do this extra work to achieve interop: Hmmmm, I mean now this is actually quite funny to respond to :p. > * Publish which OIDC profile they were using I mean its is just bizarre to frame this as being problematic, yes we had to profile the standard, because it has extensibility points that future proof the protocol, most of which the VC API is yet to even provide a possible solution to, for example: - Protocol Feature negotiation - Cryptographic suite negotiation - Did method negotiaion - Proof of possession options (server generated nonces) - Party Discovery - Party Identification > * Create a login-based, QRCode-based, and/or deep link initiation page So its problematic that all the issuers stood up their own independent sites? Again that is evidence of the inverse, the implementations were able to implement, not just delegate and rely on a common broker to do all their heavy lifting (CHAPI playground). Putting that question differently why if VC API is so easy to implement did 76% of your cohort have to rely on a central, common broker for much of the critical issuance functionality? > * Decide if they were going to support VC-JWT or VC-DI; reducing interop partners But they didn't, quite a few implemented both, see above. > * Publish a Credential Issuer Metadata Endpoint Errm yes, so people know who the issuers are and what credentials they offer, are you arguing this is hard or this feature shouldn't exist, neither makes sense to me. > * Create an OAuth token endpoint for pre-authorization code flow Yes correct they had to expose an HTTP endpoint that took a JSON object and gave back a token, whats your point? This has been a standard for ~15 years and it underpins an entire industry (IAM). > * Publish a Credential Resource Endpoint Correct thats where the credential was issued from, whats your point? > * Publish an OAuth server/Issuer shared JWKS URL False, not required by the protocol at all, some chose to if they wished > * Publish an Issuer JWKs URL Again totally false, please read the specification before you make an assertion like this. > * Depend on non-publicly available wallet software to test their interoperability status So you are criticising some vendors not having their code publicly available, whats that got to do with the protocol involved? Throughout this thread you appear to be trying to weaponize the word "proprietary" as a way to dis the OpenID 4 VCI approach when in reality BOTH approaches involve the ability for an implementer to make proprietary decisions with their implementation, which I might add is not a bug but a feature! The only difference is what and where. You've drawn a line through an agreed role as per the VC data model, the "issuer", splitting it into the "issuer service" and "issuer co-ordinator", then asserted "the interface between these two must be standard otherwise vendor lock in!", I both disagree with the need to split the function of the issuer the way you have AND that it creates material risk for customers of this capability. Thanks, [Mattr website]<https://aus01.safelinks.protection.outlook.com/?url=https%3A%2F%2Fscanmail.trustwave.com%2F%3Fc%3D15517%26d%3Dw46s4eMXULV_ns1ZfAKYLbVKcqey_PHiW1WeN4boYw%26u%3Dhttps%253a%252f%252fmattr.global%252f&data=04%7C01%7CSteve.Lowes%40mbie.govt.nz%7C5a65fe33c70b41fd8ba908d976f3a2f1%7C78b2bd11e42b47eab0112e04c3af5ec1%7C0%7C0%7C637671611076709977%7CUnknown%7CTWFpbGZsb3d8eyJWIjoiMC4wLjAwMDAiLCJQIjoiV2luMzIiLCJBTiI6Ik1haWwiLCJXVCI6Mn0%3D%7C1000&sdata=tKqCMzLUQNCeORd908YqfqZoT7tCy%2FMVwXdjpch1sDY%3D&reserved=0> Tobias Looker MATTR CTO +64 (0) 27 378 0461 tobias.looker@mattr.global<mailto:tobias.looker@mattr.global> [Mattr website]<https://aus01.safelinks.protection.outlook.com/?url=https%3A%2F%2Fscanmail.trustwave.com%2F%3Fc%3D15517%26d%3Dw46s4eMXULV_ns1ZfAKYLbVKcqey_PHiW1WeN4boYw%26u%3Dhttps%253a%252f%252fmattr.global%252f&data=04%7C01%7CSteve.Lowes%40mbie.govt.nz%7C5a65fe33c70b41fd8ba908d976f3a2f1%7C78b2bd11e42b47eab0112e04c3af5ec1%7C0%7C0%7C637671611076709977%7CUnknown%7CTWFpbGZsb3d8eyJWIjoiMC4wLjAwMDAiLCJQIjoiV2luMzIiLCJBTiI6Ik1haWwiLCJXVCI6Mn0%3D%7C1000&sdata=tKqCMzLUQNCeORd908YqfqZoT7tCy%2FMVwXdjpch1sDY%3D&reserved=0> [Mattr on LinkedIn]<https://aus01.safelinks.protection.outlook.com/?url=https%3A%2F%2Fscanmail.trustwave.com%2F%3Fc%3D15517%26d%3Dw46s4eMXULV_ns1ZfAKYLbVKcqey_PHiW1SbN9fvNg%26u%3Dhttps%253a%252f%252fwww.linkedin.com%252fcompany%252fmattrglobal&data=04%7C01%7CSteve.Lowes%40mbie.govt.nz%7C5a65fe33c70b41fd8ba908d976f3a2f1%7C78b2bd11e42b47eab0112e04c3af5ec1%7C0%7C0%7C637671611076719975%7CUnknown%7CTWFpbGZsb3d8eyJWIjoiMC4wLjAwMDAiLCJQIjoiV2luMzIiLCJBTiI6Ik1haWwiLCJXVCI6Mn0%3D%7C1000&sdata=t%2BidOI32oaKuTJf1AkcG%2B%2FirIJwbrgzXVZnjOAC52Hs%3D&reserved=0> [Mattr on Twitter]<https://aus01.safelinks.protection.outlook.com/?url=https%3A%2F%2Fscanmail.trustwave.com%2F%3Fc%3D15517%26d%3Dw46s4eMXULV_ns1ZfAKYLbVKcqey_PHiW1WdMte6ZA%26u%3Dhttps%253a%252f%252ftwitter.com%252fmattrglobal&data=04%7C01%7CSteve.Lowes%40mbie.govt.nz%7C5a65fe33c70b41fd8ba908d976f3a2f1%7C78b2bd11e42b47eab0112e04c3af5ec1%7C0%7C0%7C637671611076729970%7CUnknown%7CTWFpbGZsb3d8eyJWIjoiMC4wLjAwMDAiLCJQIjoiV2luMzIiLCJBTiI6Ik1haWwiLCJXVCI6Mn0%3D%7C1000&sdata=BD9WWyXEjVGlbpbCja93yW%2FzLJZpe%2Ff8lGooe8V6i7w%3D&reserved=0> [Mattr on Github]<https://aus01.safelinks.protection.outlook.com/?url=https%3A%2F%2Fscanmail.trustwave.com%2F%3Fc%3D15517%26d%3Dw46s4eMXULV_ns1ZfAKYLbVKcqey_PHiWwGdMoDtMw%26u%3Dhttps%253a%252f%252fgithub.com%252fmattrglobal&data=04%7C01%7CSteve.Lowes%40mbie.govt.nz%7C5a65fe33c70b41fd8ba908d976f3a2f1%7C78b2bd11e42b47eab0112e04c3af5ec1%7C0%7C0%7C637671611076729970%7CUnknown%7CTWFpbGZsb3d8eyJWIjoiMC4wLjAwMDAiLCJQIjoiV2luMzIiLCJBTiI6Ik1haWwiLCJXVCI6Mn0%3D%7C1000&sdata=4AhRuXZCnU5i3hcngo4H3UiNayYUtXpRcImV4slS1mw%3D&reserved=0> This communication, including any attachments, is confidential. If you are not the intended recipient, you should not read it - please contact me immediately, destroy it, and do not copy or use any part of this communication or disclose anything about it. Thank you. Please note that this communication does not designate an information system for the purposes of the Electronic Transactions Act 2002. ________________________________ From: Manu Sporny <msporny@digitalbazaar.com> Sent: 23 November 2022 11:38 To: W3C Credentials CG <public-credentials@w3.org> Subject: Re: Publication of VC API as VCWG Draft Note EXTERNAL EMAIL: This email originated outside of our organisation. Do not click links or open attachments unless you recognise the sender and know the content is safe. On Mon, Nov 21, 2022 at 1:42 PM David Chadwick wrote: > this is where I take issue with you (as I said during the plugfest). Yes, I heard the commentary from the back of the JFF Plugfest room and from across the Atlantic Ocean! :P So let's talk about it because some of us took issue with the way the OID4 interop stuff was presented as well. Let's see if we can describe the results from each group in a way we can both sign off on. :) > You will note that all the OID4VCI implementations had holistic VC Issuers, which is why it was a lot more implementation work than that undertaken by the 17 cryptographic signers. TL;DR: Your argument suggests that interoperability that was achieved via CHAPI and VC API doesn't count based on your interpretation of what an "Issuer" is and, in my view, an oversimplification of what the VC API issuing API does. This argument has also been leveraged to excuse some of the interop difficulties that were found related to OID4. In other words, the argument goes: CHAPI + VC API only appeared to achieve better interop than OID4, but it would have struggled just as much as OID4 had "real interop" been attempted. The OID4 struggles mentioned were the findings that 1) OID4 interop split into two non-interoperable end-to-end camps (but was combined into one camp for reporting purposes, inflating the number of issuers beyond what was actually achieved), and 2) almost every OID4 issuer was a software vendor, not an institution that issues workforce credentials. This is a LOOONG post, apologies for the length, but these details are important, so let's get into them. This is going to be fun! :) > You might have 17 implementations of the signing VC-API, but these are not VC Issuers. An Issuer is a role. The issuer may execute that role using a variety of system components. The OID4 specification hides or is agnostic about all those system components "behind the API". It doesn't speak about them because "How an Issuer chooses to manage the process of issuance is out of scope, the only thing that matters is how the credential is delivered to the wallet". This puts all of the focus on the simple delivery or hand off of the credential to the wallet, ignoring the rest of the process. That is just one possible design choice it does not mean other design choices are somehow invalid or are not related to credential issuance. It also comes with its own tradeoffs. For example, putting the process of issuing/verifying/revoking/challenge management out of scope creates a vendor lock-in concern. An alternative approach specifies individual components, allows them to be swapped out and reduces the implementation burden on the frontend delivery services. In other words, the delivery mechanism becomes plug-and-play and extremely simple. This plug-and-play mechanism was demonstrated via the CHAPI playground in the CHAPI + VC API interop work. Now, the VC API group started out making the same mistake of confusing the role of "Issuer" for a set of one or more software components, but it became obvious (over the course of a year) that doing so was causing the group to miscommunicate in the same way that we are miscommunicating right now. It was better to talk about specific functions each of which is used to help the Issuer role accomplish the issuance and delivery of one or more credentials to a wallet. It also became clear that "issuance" and "delivery", as just stated, are different functions as a credential is issued when it is fully assembled and has proofs attached to it, and then it is passed from one holder to another until it reaches the wallet (delivery). This approach also fits cleanly with the VCDM. There are at least three roles in the VC Data Model Issuer, Holder, and Verifier. Each one of those roles will utilize system components to realize that role in the ecosystem. Some of the system components that we have identified are: the Issuer Coordinator, the Issuer Service, the Issuer Storage Service, the Issuer Status Service, and the Issuer Admin Service. There will be others, with their own APIs, as the ecosystem matures. In general, there are two classes of system components that an issuer ROLE utilizes Issuer Coordinators and Issuer Services. You can read more about this in the VC API Architecture Overview (but be careful, the diagram hasn't been updated from "App"->"Coordinator" yet it's also a draft work in progress, there are errors and vagueness): https://w3c-ccg.github.io/vc-api/#architecture-overview All that being said, I think we're making a mistake if we think that the name we apply to the interop work performed matters more than what was actually technically accomplished. Did we accomplish plug-and-play or not and how many different parties participated in providing their plug-and-play components to the process? > A VC Issuer talks to the wallet/holder (as per the W3C eco system model) and has much more functionality than simply signing a blob of JSON. What you are referring to in your comment is the concept of the "Issuer Coordinator" in VC API terminology. It is the entity that does all of the business rule processing to determine if the entity that has contacted it should receive a VC or not. In CHAPI + VC API, this can be done via a simple username/password website login, multifactor login, federated login, login + DIDAuth, or via the exchange of multiple credentials in a multi-step process. The Issuer Coordinator is capable of delegating these steps to multiple service backends. OID4 does not define an API around those steps of delegation (vendor lock risk), VC API claims that they really do matter and defines them (choice in vendors). Also, the Holder role referenced above refers to any party that currently holds the credential. The same party that plays the Issuer role always also plays the Holder role until delivery to a wallet. In the VC API, the VC is issued via the issuing API and then will be delivered through some delivery protocol. Delivery can be done with a simple HTTP endpoint or using other protocols such as OID4. My understanding is that there is a similar concept contemplated in OID4 (called "Batched issuance" or something? I couldn't find a reference) where the VC will be held (by a Holder, of course) until the wallet arrives to receive it. Just because OID4 hides issuance behind a delivery protocol (intentionally being agnostic about how it happens), does not mean that every protocol must work this way. Now, for the JFF Plugfest #2, The CHAPI Playground was ONE of the Issuer Coordinators, but others were demonstrated via Accredita, Fuix Labs, Participate, RANDA, and Trusted Learner Network. So, even when we use your definition of "issuance", which I expect would struggle to achieve consensus, there were multiple parties doing "issuance". Not only did the VC API cohort demonstrate that you could do standalone Issuer Coordinator sites, we also demonstrated a massive Issuer Coordinator that had 13 Issuer Services integrated into the backend. We also had an additional 4 Issuer roles that used their own Issuer Coordinators to put a VC in a wallet, demonstrating not only choice in protocols (Issuance over CHAPI and Issuance over VC API), but choice in Issuer Service vendors as well. > An issuer that simply signs any old JSON blob that is sent to it by the middleman (the CHAPI playground) is not a holistic issuer. It is simply a cryptographic signer. No, that's not how the VC API works. An Issuer Coordinator and an Issuer Service are within the same trust boundary. If we are to only look at the CHAPI Playground as an Issuer Coordinator, it had the ability to reach out to those 13 Issuer Services because those services had given it an OAuth2 Client ID and Client Secret to call their Issuer Service APIs. Those Issuer Services (that implement the VC Issuer API), however, are run by completely different organizations such as Arizona State University, Instructure, Learning Economy Foundation, Digital Credentials Consortium, and others. You are arguing that they are not real Issuers even though: 1) the API they implement is specific to issuing Verifiable Credentials; they do not implement "generic data blob signing", 2) they handle their own key material, 3) they implement Data Integrity Proofs by adding their issuer information (including their name, imagery, and the public keys), using the referenced JSON-LD Contexts, performing RDF Dataset Canonicalization, and by using their private key material to digitally sign the Verifiable Credential that is then handed back to the Issuer Coordinator. Some issuers internally used a separate cryptographic signer API, called WebKMS, to perform the actual cryptographic signing, but *that* API was not highlighted here and would actually be an API that more approximates signing "any old JSON blob". More must be done in an issuance API implementation than just performing cryptographic operations. So your description of the issuance API is not accurate. The whole issuance process is proprietary in OID4 today; it's just simply not defined by design. Only the delivery mechanism (the request for a credential of a certain type and its receipt) is defined. As mentioned, the VC API separates issuance and delivery to help prevent vendor lock-in and to enable multiple delivery mechanisms without conflating them with the issuance process. > The middleman and the signer together constitute a holistic VC Issuer as it is the middleman that talks to the wallet, says which VCs are available to the wallet, authenticates and authorises the user to access the VC(s) and then gets the VC(s) signed by its cryptographic signer. You're basically describing an Issuer Coordinator in VC API parlance: the entity that executes VC-specific business logic that determines if a VC should be issued or not to a particular entity. The VC API has a layered architecture such that the entity implementing the Issuer Coordinator, the entity performing DIDAuth, and the entity in control of the private keys and Issuer Service don't have to be the same entity, component, or service in the system. It's also true that you don't have to keep re-implementing the same logic over and over again with CHAPI, VPR, and VC API and instead can re-use components and put them together in ways that saves weeks of developer time (as was demonstrated during the plugfest by the companies that started from scratch). This enabled people to participate by implementing the pieces that they wanted to (and as reported in the CHAPI matrix) without having to do everything as a monolithic application. This meant even more interoperability and component-reuse. So it is true that almost everyone had a fairly smooth experience achieving the interoperability bar for VC API but it was because of the layered and component-based design of CHAPI + VPR + VC-API. I'm sure that OID4 implementers did benefit from reusing existing OAuth2 tools, but my understanding is that everything behind the API is a full rewrite for each participant instead of allowing for reuse or interoperability between components. It could also have been that the OID4 implementers struggled more because the VC API was easier to implement in a number of other ways. Just taking a guess at some of the things, from my perspective, that may have slowed down OID4 implementers: * The organizations implementing OID4 had to do this extra work to achieve interop: * Publish which OIDC profile they were using * Create a login-based, QRCode-based, and/or deep link initiation page * Decide if they were going to support VC-JWT or VC-DI; reducing interop partners * Publish a Credential Issuer Metadata Endpoint * Create an OAuth token endpoint for pre-authorization code flow * Publish a Credential Resource Endpoint * Publish an OAuth server/Issuer shared JWKS URL * Publish an Issuer JWKs URL * Depend on non-publicly available wallet software to test their interoperability status The folks that used CHAPI + VC API didn't have to do any of that, which made things go faster. Does that mean that different parties did not implement VC delivery? No see the above comments on that. It just seems it was easier for some VC API implementers to implement delivery. Some of this may be due to the use of CHAPI, which they perhaps found easier to implement than some items in the above list. Future OID4 implementations could also avoid some of the items above by relying on CHAPI instead. Now, does that mean CHAPI + VPR + VC API doesn't have its challenges? Of course not! At present, the native app CHAPI flow needs usability improvements, and we're going to be working on that in 2023 Q1 by integrating native apps into the CHAPI selector and using App-claimed HTTPS URLs. The VC API and VPR specs need some serious TLC and the plugfest gave us an idea of where we can put that effort. VPR currently only supports two protocols (browser-based VPR, and VC API-based VPR) and will be adding more early next year since CHAPI is protocol agnostic and it's clear at this point (at least, to me) that we're looking at a multi-protocol future in the VC ecosystem. I'm sure I'm missing other places where CHAPI + VPR + VC API needs to improve, and I'm sure that people on this mailing list won't be shy in suggesting those limitations and improvement areas if they feel so inclined. :) Let me stop there and see if any of the above resonates, or if I'm papering over some massive holes in the points being made above. I'm stepping away for US Thanksgiving now, and am thankful to this community (and DavidC) for these sorts of conversations throughout the years. :) -- manu -- Manu Sporny - https://www.linkedin.com/in/manusporny/ Founder/CEO - Digital Bazaar, Inc. News: Digital Bazaar Announces New Case Studies (2021) https://www.digitalbazaar.com/

Attachments

- image/png attachment: image.png

- image/png attachment: 02-image.png

- image/png attachment: 03-image.png

Received on Wednesday, 23 November 2022 08:18:36 UTC