- From: Christopher Cameron <ccameron@google.com>

- Date: Wed, 31 Mar 2021 01:46:15 -0700

- To: Lars Borg <borg@adobe.com>

- Cc: Simon Thompson-NM <Simon.Thompson2@bbc.co.uk>, "public-colorweb@w3.org" <public-colorweb@w3.org>

- Message-ID: <CAGnfxj9E5Qh6H4DVJtAEuuKEBwO-oe04Y2AYNmfL6_twLDFAJg@mail.gmail.com>

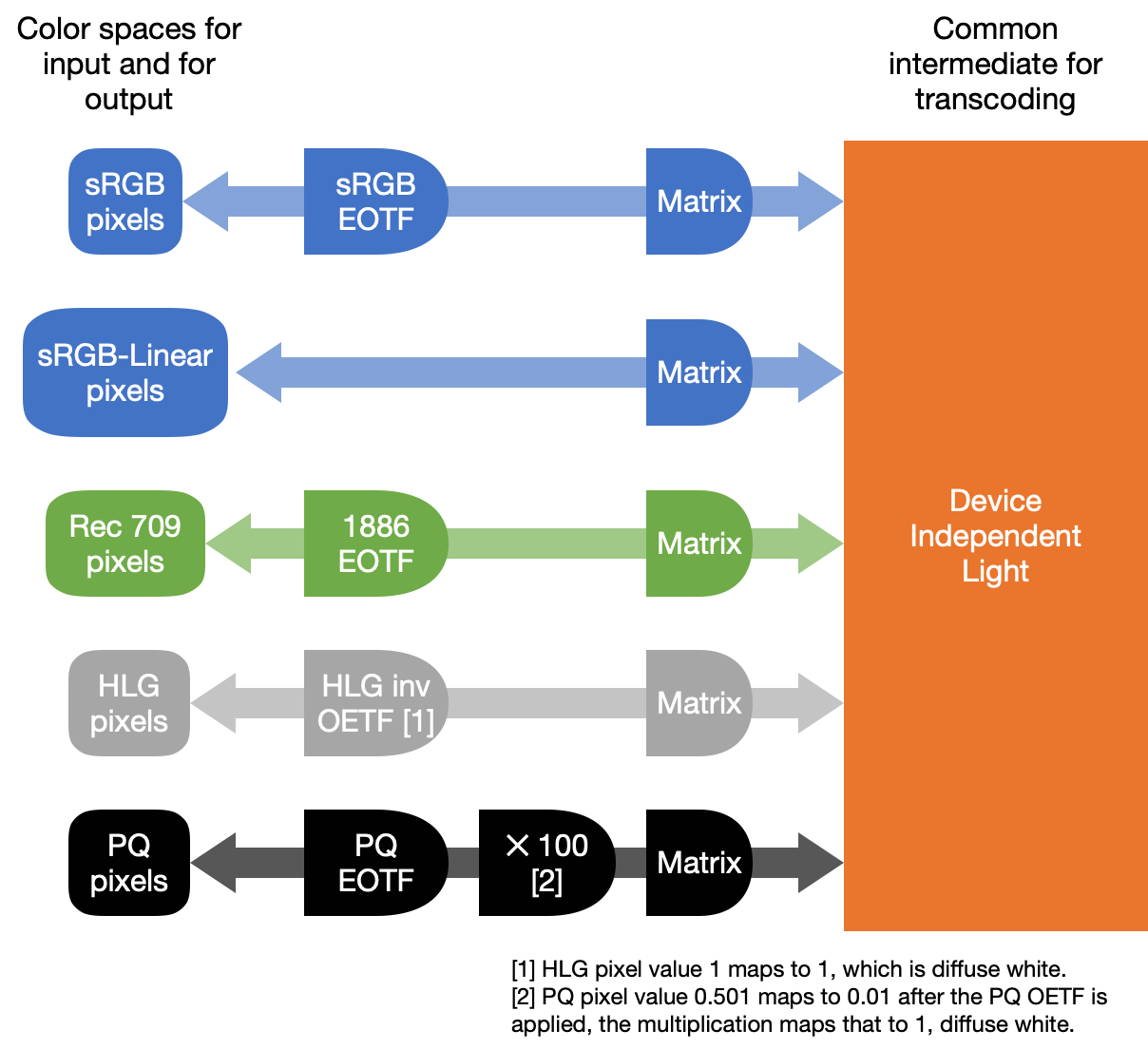

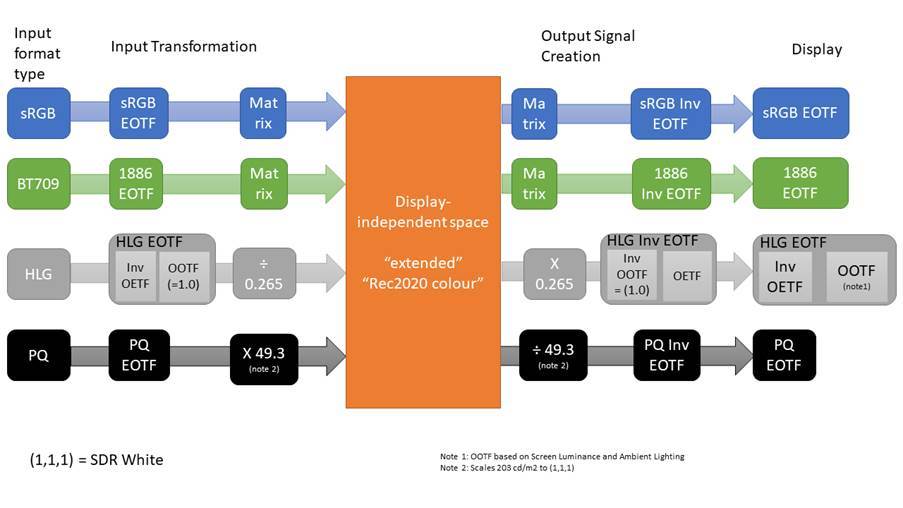

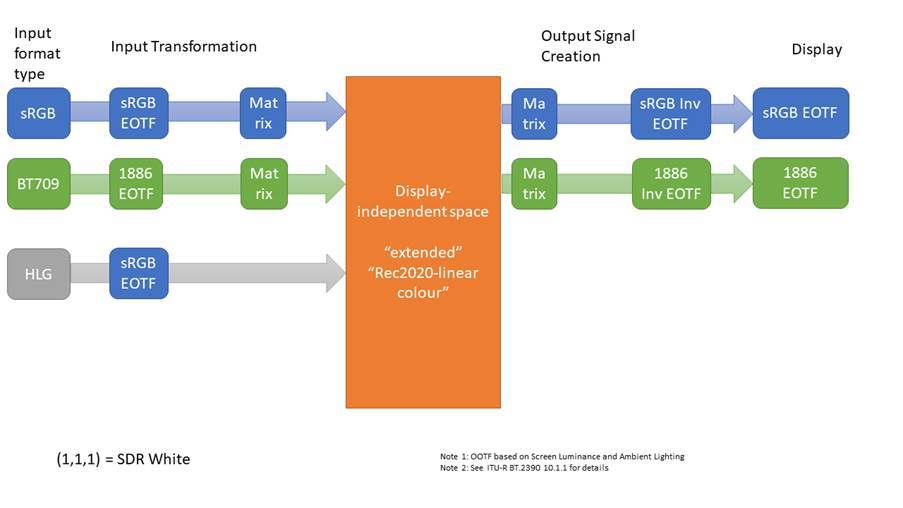

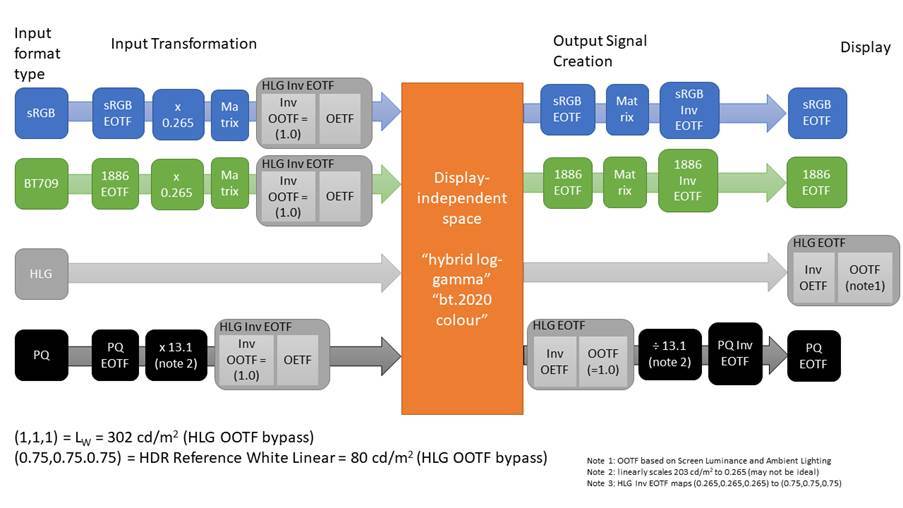

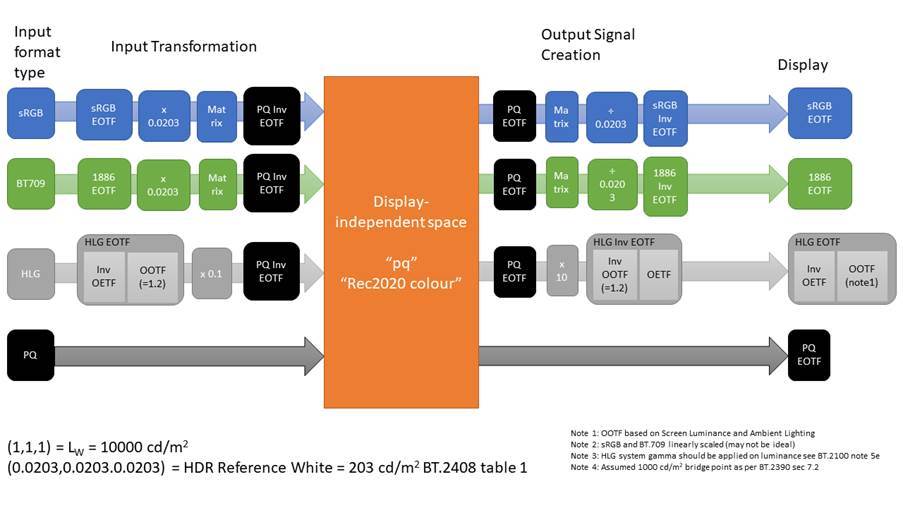

I think that's the core question: Should that be a reference display, or is that undesirable? I'm leaning towards it being undesirable. That we should shovel the complexity of "how should a PQ image appear on an SDR canvas", "how should an SDR image appear in an HLG canvas", and "how should an HLG image appear on a PQ canvas" somewhere else. Arguably, that place would be the ImageBitmap interface, which already has a color space conversion behavior flag, and gets a target color space flag in the P3 proposal. I wrote some slides for tomorrow's discussion here <https://docs.google.com/presentation/d/1hqd5dBgklFn8eFBsd9_NVmH3bYzjrrtTvbGAPmjx4cI/edit?usp=sharing>. It has a couple of worked examples of how ImageBitmap could work. Having gone through them, my feeling is that all images should have pixel values in [0, 1] (so the "multiply PQ by 100" part of the strawman goes away). The slides are meandering thoughts, and depending on where the conversation goes, we may only want to look at a couple of them. On Tue, Mar 30, 2021 at 3:20 PM Lars Borg <borg@adobe.com> wrote: > Consider changing the orange box to read Reference Display Light. This > would be consistent with using sRGB. > > What are the details for the orange box? > > Rec2020 primaries & white point? > > Luminance for signal 1.0? 100 or 203 nits? > > Surround? 5 nits? (BT.2035 is old.) > > Lars > > > > *From: *Christopher Cameron <ccameron@google.com> > *Date: *Tuesday, March 30, 2021 at 9:52 AM > *To: *Simon Thompson <Simon.Thompson2@bbc.co.uk> > *Cc: *"public-colorweb@w3.org" <public-colorweb@w3.org> > *Subject: *Re: HTML Canvas - Transforms for HDR and WCG > *Resent-From: *<public-colorweb@w3.org> > *Resent-Date: *Tuesday, March 30, 2021 at 9:52 AM > > > > Thank you for writing this up! It definitely much more clearly shows the > transformations being applied. I've stolen your diagram scheme. > > > > I think the primary question that we're circling around here is how we > transform between color spaces (sRGB, PQ, HLG), with the options being: > > · A: There exists a single well-defined transformation between > content types > > o Transformations are path-independent > > o > > o Transformations are all invertible (at least up to clamping issues) > > · B: The transformation between content types depends on the > context > > o It's still well-defined, but depends on the target that will draw > the content > > o It is no longer path-independent, and may not obviously be invertible > > (This differs from the proposal in that it respects BT 2408's > white=203nits=0.75 HLG, but that's sort of peripheral to the differences > here -- please read this ignoring that difference). > > > > I lean more heavily towards A, for two reasons. > > · Reason 1: The main advantage of B is that it does better > transcoding between spaces, BUT > > o It is constrained in such a way that it will never do good enough > transcoding to warrant its complexity, in my opinion. > > · > > · Reason 2: It's simple to reason about and is predictable > > o This helps ensure that browsers will be consistent > > o This simplicity allows the developer to implement any fancier > transcoding they want without fighting the existing transcoding. > > Examples of B doing divergent transcoding for HLG are are: > > · PQ Canvas: we apply a gamma=1.2 OOTF. > > o This bakes in an assumption of 1,000 nit max (or mastering) > luminance > > · extended sRGB: we bake in a gamma=1.0 OOTF > > o This bakes in an assumption of 334 nits > > · SDR: we use the sRGB EOTF > > The following diagram shows my proposal for A (in this example I don't use > the BT 2408 numbers, but we could do multiplication so that HLG signal of > 0.75 and PQ signal of 0.581 map to 1 which is diffuse white). The key > feature is that the transformations for input and output are always > identical. > > > > No attempts at intelligent transcoding are attempted. That's not because > the proposal considers transcoding unimportant, rather, it's a recognition > that any such automatic transcoding would not be good enough. With this > scheme, it is fairly trivial for an application to write a custom HLG<->PQ > transcoding library. Such functionality could be added to ImageBitmap > (where the user could say "load this image and perform transcoding X on it, > using parameters Y and Z, because I know where it is going to be used"). > > > > Because all of the color spaces have well-defined transformations between > them, there's no reason to couple the canvas' storage color space to the > HDR compositing mode. In fact, coupling them may be non-ideal: > > · Application Example: Consider a photo editing application that > intends to output HLG images, but wants to perform its manipulations in a > linear color space. The application can select a linear color space (for > working space) and HLG as the compositing mode. > > · Browser Implementation Example: For the above application, it > might be that the OS/Display require that the browser transform to an HLG > signal behind the scenes, but at time of writing, no such OS exists (in > fact, all OSes require conversion into a linear space for HLG, but that may > change). > > Let's discuss this more on Wednesday! > > > > > > On Mon, Mar 29, 2021 at 9:35 AM Simon Thompson-NM < > Simon.Thompson2@bbc.co.uk> wrote: > > > > Hi all, > > > > My colleagues and I have spent some time thinking about the HTML canvas > proposals and the input and output scaling required for each for HDR and > wider colour gamut functionality. I’ve added some plots showing some ideas > on how input and output formats could be transformed for the new canvases. > New extended sRGB canvas format. > > In this canvas (1,1,1) is equal to SDR White and (0,0,0) is equal to > Black. Values are not however clipped outside this range and brighter > parts of the image can be created using values over 1 and wider colour > gamuts can be created using negative values. For each input signal, we can > simply convert to linear rec2020 and then scale such that the SDR reference > white signal has the value (1,1,1). Note, linearly scaling PQ display > light signals without an OOTF adjustment, does not maintain their > subjective appearance. > > For SDR outputs this would cause clipping of the HDR signals above > (1,1,1). For HLG, should the canvas be able to determine the output format > required, we could instead use an sRGB EOTF on the input and use the > compatible nature of the HLG signal to create a tone-mapped sRGB image. > > > > HLG Canvas > > [image: Diagram Description automatically generated] > > In this canvas (1,1,1) is equal to Peak White and (0,0,0) is equal to > Black. The display-independent working space is an BT.2100 HLG signal. > > Input signals that are not HLG are converted to normalised linear display > light. These are converted to the HLG display-independent space using an > HLG inverse EOTF. The EOTF LW parameter is selected such that the HLG > OOTF becomes unity (i.e. a pass-through) simplifying the conversion process > and avoiding a requirement for a 3D-LUT. > > · SDR display-light signals are scaled so that their nominal peak > equals HDR Reference White (0.265) and matrixed to the correct colourspace > prior to conversion to HLG. > > · PQ signals are scaled so that 203 cd/m2 equals HDR Reference White > (0.265) prior to conversion to HLG. > > The HLG conversion maps HDR reference white in normalized linear display > light (0.265,0.265,0.265) to (0.75,0.75,0.75) in the HLG non-linear > signal. Formats which are already linear, e.g. “sRGB-linear”, > “rec2020-linear” are treated similarly but do not require an initial EOTF > being applied to convert to linear. > > Note, BT.1886 can be used with either BT.709 or BT.2020 signals. The > correct matrices should be chosen match the input signal and the output > display. > PQ Canvas > > [image: A picture containing graphical user interface Description > automatically generated] > > > > In this canvas (1,1,1) is equal to 10000 cd/m2 and (0,0,0) is equal to 0 > cd/m2. Input signals that are not PQ are converted to linear and scaled > so that HDR reference white is set to 0.0203. They are then matrixed to > the correct colourspace and converted to PQ. The conversion maps > (0.0203,0.0203,0.0203) to (0.58,0.58,0.58). Formats which are already > linear, e.g. “sRGB-linear”, “rec2020-linear” are treated similarly but do > not require an initial EOTF being applied to convert to linear. > > > > Best Regards > > > > Simon > > > *Simon Thompson* > Senior R&D Engineer > > > >

Attachments

- image/png attachment: image001.png

- image/jpeg attachment: image002.jpg

- image/jpeg attachment: image003.jpg

- image/jpeg attachment: image004.jpg

- image/jpeg attachment: image005.jpg

Received on Wednesday, 31 March 2021 08:46:42 UTC