- From: Paola Di Maio <paola.dimaio@gmail.com>

- Date: Thu, 4 Dec 2025 19:46:37 +0800

- To: Daniel Campos Ramos <danielcamposramos.68@gmail.com>

- Cc: public-aikr@w3.org

- Message-ID: <CAMXe=Sq9Pm==pU69W7Ucn8L8oprB8Hp-144J=4LfAYNniNAKeQ@mail.gmail.com>

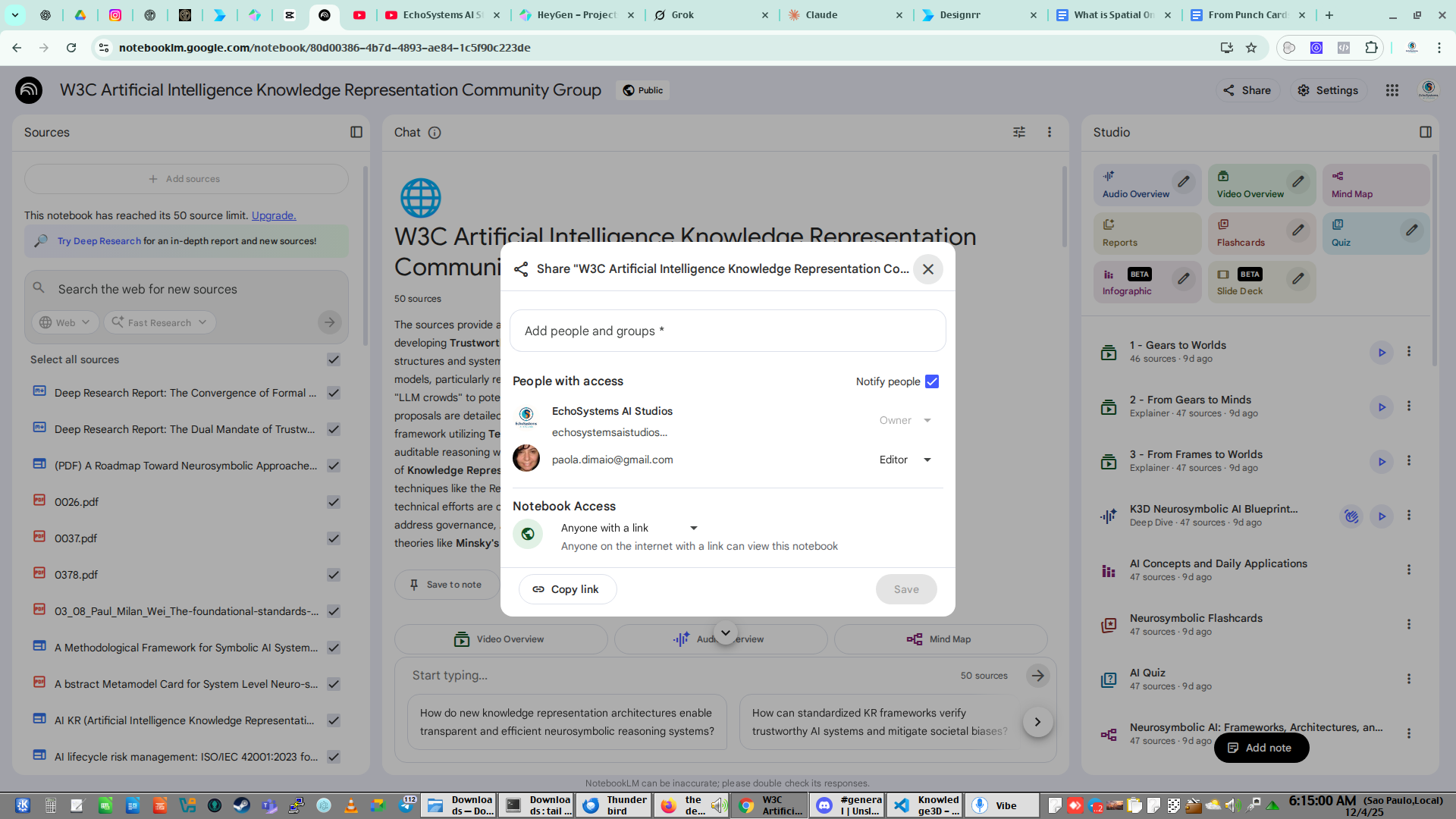

Daniel, I am really sorry we do not communicate at all, probably because we are on different planets *as per previous exchanges- we are taling about different things Karpathy's github repo has code that supports orchestration of queries across multiple LLMs is not related to the web ai standards generator presented at TPAC, which models consensus building for standards I did not access nor opened the links you shared on the list because I do not download code to my computer I use browser based tools only. For this reason I requested over multiple tedious emails that you give a demo from your screen. To clarify further: I was referring to 'inspired to use vibe coding' (not by the architecture which I have not seen to date) and that based on what you described is spatial KR, I did not say that you inspired the proposed web service /generator , as you can see there is nothing in there that relates to your architecture *from what I have been able to see so far in In the same way that the exchanges with Tyson are inspiring adding of an additional bubble to the diagram, to coonceptualise 'interfaces' as a middle layer *to put that possible direction in the right place The fact that you use KR in your project is great, but from what I can see, and I am unable to communicate, is that the scope of work here is generate conceptual artefacts that conceptualize and describe KR. And this is something that you do not seem to process. if you produce such things in any form, pleae make sure they are linked in the wiki and appropriately described *what they are, what they do, how do you see them relate to what is being discussed You did not have a demo during the call, and I cut you short because you started talking about something else that what was not what you said you would present . Apologies if I cut you short but I was expecting a demo I invited you to present the next day. During the following meeting there was an open floor and we spent several minutes asking participants if there was anything they wanted to share You did not show the demo either. You asked a question in the chat and that it. Since thre was no contribution from participants I continued with my presentation But its not too late Please share the video of the demo if you have it *a recording of the demo runnin on your desktop with an explanation of what is it that you are demonstrating, its relevance to KR and what other benefits there may be (use cases and examples) I have asked it so many times, maybe ten or twenty times Please make sure it is linked in the top of your email because I may not read entire emails I am sorry we seem to speak different languages, and no, we are not on the same page at all PDM On Thu, Dec 4, 2025 at 5:35 PM Daniel Campos Ramos < danielcamposramos.68@gmail.com> wrote: > Hi Paola, > > Thanks for the follow-up. I want to address each point clearly: > > 1. MVCIC demo was shared, and you cut it short. > During the TPAC breakout session (Oct 2025), I began walking through the > API-less orchestration demo. The recording shows you stopping it due to the > five-minute slot: “OK, about time to stop. We now reached our third > five-minute slot for recording.” > > That’s why you didn’t see the full run—there wasn’t enough time/interest > allocated and you cut me out starting my explanation (to present nothing). > > You even acknowledge it here: > > "Inspired by Daniel's fast outputting AI powered machine, although still > needing much refinement, jotted down some thoughts towards the possiblity > of AI powered web standards generator *possibly still science fiction, but > since reality is moving so fast." > > https://lists.w3.org/Archives/Public/public-aikr/2025Nov/0006.html > > But then you never properly attributed my work - Please, be honest, do not > steal peoples work this way, stop being dissimulated, please. > > 2. The notebook has been open and duly credited since day one. > As stated earlier, the NotebookLM is just a personal study aid with links > to all original docs. You’ve had edit rights since it was created, and > every entry cites the original source. I’ve now added a prominent > disclaimer reminding readers that official contributions must go through > the CG tools. > > Here's a print screen, since you do not have time to click a link and read > a phrase someone shares. > > > 3. Attribution timeline matters. > > MVCIC (docs/multi_vibe_orchestration in the K3D repo) was first shared on > Oct 9 (AIKR list) and Oct 30 (internal list). > Your Human Process of Web Standards Generation doc is stamped Created Nov > 10, 2025. > The new “AI-Driven Web Standards Specification” CG was proposed Nov 17 and > launched Nov 28 (see > https://www.w3.org/community/blog/2025/11/17/proposed-group-ai-driven-web-standards-specification-community-group/ > and https://www.w3.org/community/aiwss/). > Given that sequence, it’s hard to treat the AI-driven standards initiative > as unrelated to work already discussed in AIKR. > 4. MVCIC isn’t “outside scope.” > MVCIC is documented with explicit KR vocabularies, reproducible workflows, > and KR alignment notes already in the AIKR mailing list and wiki. If there > are specific labels or formats you’d like, point me to them—I’ll adapt > accordingly. The code itself lives in > https://github.com/danielcamposramos/Knowledge3D/ under > docs/multi_vibe_orchestration. It’s been public since October. > > 5. The LLM Council repo is newer. > Karpathy’s repo (https://github.com/karpathy/llm-council) was published > in late November, so it couldn’t have been the basis for your Nov 10 > technical note. That further supports the need for proper attribution of > earlier work already in this CG. > > > > I hope that clarifies the timeline. I’ll be happy to repost the MVCIC demo > links (or run them live) if needed—just let me know if this time you’re > available. > > Best, > Daniel >

Attachments

- image/png attachment: NwcozCTQ2B9kEn0y.png

- image/png attachment: O0tHJnlcGgkMKl0V.png

- image/png attachment: QQpBLXuOsVimhX09.png

Received on Thursday, 4 December 2025 11:47:25 UTC