- From: Paola Di Maio <paoladimaio10@gmail.com>

- Date: Sat, 29 Oct 2022 10:40:05 +0800

- To: Timothy Holborn <timothy.holborn@gmail.com>

- Cc: W3C AIKR CG <public-aikr@w3.org>, public-cogai <public-cogai@w3.org>

- Message-ID: <CAMXe=Sowaq0mKit=4ctY+uT_veYWKikwVNM=4pOgsPo6dK1G4A@mail.gmail.com>

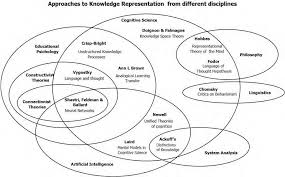

Hay Timothy and all This list is about KR in AI Your question is pertinent, but it has been answered in literature many many years ago [image: image.png] From A General Knowledge Representation Model of Concepts Carlos Ramirez and Benjamin Valdes Tec of Monterrey Campus Queretaro, DASL4LTD Research Group Mexico I personally start every talk and paper on AI KR precisely with this diagram, which serves to provide context (from Ramirez Valdez) file:///C:/Users/paola/Downloads/InTech-A_general_knowledge_representation_model_of_concepts.pdf KR is a big topic and it applies to many disciplines In AI, KR has a specific function /roles (as discussed in many books that it would be advisable to take sight of, since they answer many questions being raised here) KR has limitations, so does ML In my research, I identify novel roles for KR, that is, for example to expose deepfakes, and other things I cannot explain in a post (but that I can try to summarise in a webinar) What may be useful is to provide an reading list for people to familiarise themselves with the notions being discussed and problems being tackled I started one on the AI KR CG home page somewhere, needs updating I do teach a course that I may be able to offer as a MOOC in the future :-) Adeel, YES Brachman and Levesque, but so many others Adeel and Timothy, if you are interested, please contribute to the list of resources already started on the CG pages somewhere, you can also add references and your own annotations On Sat, Oct 29, 2022 at 10:06 AM Timothy Holborn <timothy.holborn@gmail.com> wrote: > Noted. > > https://en.wikipedia.org/wiki/Knowledge_representation_and_reasoning > > In terms of knowledge representation, for humanity, my thoughts have been > that it's about the ability for people to represent the evidence of a > circumstance in a court of law. If solutions fail to support the ability > to be used in these circumstances, to successfully represent knowledge - > which can be relied upon in a court of law; a circumstance that should > never be wanted, but desirable to support peace. > > Then, I guess, I'd be confused about the purposeful definion; or the > useful purpose of any such tools being produced & it's relationship, by > design, to concepts like natural justice. > > https://en.wikipedia.org/wiki/Natural_justice > > Let me know if I am actually "off topic" per the intended design outcomes. > > Regards, > > Timothy Holborn. > > On Sat, 29 Oct 2022, 11:55 am Paola Di Maio, <paoladimaio10@gmail.com> > wrote: > >> >> Just as a reminder, this list is about sharing knowledge, research and >> practice in AI KR, The intersection with KR and CogAI may also be relevant >> here (and of interest to me) >> >> If people want to discuss CogAI not in relation to KR, please use the >> CogAI CG list? >> What I mean is that: if KR is not of interest/relevance to a post, then >> why post here? >> >> What is KR, its relevance and limitations is a vast topic, written about >> in many scholarly books, but also these books are not adequately covering >> the topic, In that sense, the topic of KR itself, without further >> qualification, is too vast to be discussed without narrowing it down to a >> specific problem/question >> KR in relation to CogAI has been the subject of study for many of us for >> many years, and it is difficult to discuss/comprehend/relate to for those >> who do not share the background. I do not think this list can fill the huge >> gap left by academia, however there are great books freely available online >> that give some introduction . >> When it comes to the application of KR to new prototypes, we need to >> understand what these prototypes are doing, why and how. Unfortunately NN >> fall short of general intelligence and intellegibility for humans. >> >> Adeel, thank you for sharing the paper 40 years of Cognitive Architectures >> I am not sure you were on the list back then, but I distributed the >> resource as a working reference for this list and anyone interested in >> February 2021, and have used the resource as the basis for my research on >> the intersection AI KR/CogAI since >> https://lists.w3.org/Archives/Public/public-aikr/2021Feb/0017.html >> >> Dave: the topics KR, AI, CogAI and consciousness, replicability, >> reliability, and all the issues brought up in the many posts in this thread >> and other thread are too vast >> to be discussed meaningfully in a single thread >> >> May I encourage the breaking down of topics/issues making sure the >> perspective and focus of KR (including its limitations) are not lost in >> the long threads >> >> Thank you >> (Chair hat on) >> >> On Fri, Oct 28, 2022 at 6:23 PM Adeel <aahmad1811@gmail.com> wrote: >> >>> Hello, >>> >>> To start with might be useful to explore 'society of mind >>> <http://aurellem.org/society-of-mind/index.html>' and 'soar' as point >>> of extension. >>> >>> 40 years of cognitive architecture >>> <https://link.springer.com/content/pdf/10.1007/s10462-018-9646-y.pdf> >>> >>> Recently, Project Debater >>> <https://research.ibm.com/interactive/project-debater/> also came into >>> the scene. Although, not quite as rigorous in Cog or KR. >>> >>> Thanks, >>> >>> Adeel >>> >>> On Fri, 28 Oct 2022 at 02:05, Paola Di Maio <paoladimaio10@gmail.com> >>> wrote: >>> >>>> Thank you all for contributing to the discussion >>>> >>>> the topic is too vast - Dave I am not worried if we aree or not agree, >>>> the universe is big enough >>>> >>>> To start with I am concerned whether we are talking about the same >>>> thing altogether. The expression human level intelligence is often used to >>>> describe tneural networks, but that is quite ridiculous comparison. If the >>>> neural network is supposed to mimic human level intelligence, then we >>>> should be able to ask; how many fingers do humans have? >>>> But this machine is not designed to answer questions, nor to have this >>>> level of knowledge about the human anatomy. A neural network is not AI in >>>> that sense >>>> it fetches some images and mixes them without any understanding of what >>>> they are >>>> and the process of what images it has used, why and what rationale was >>>> followed for the mixing is not even described, its probabilistic. go figure. >>>> >>>> Hay, I am not trying to diminish the greatness of the creative neural >>>> network, it is great work and it is great fun. But a) it si not an artist. >>>> it does not create something from scratch b) it is not intelligent really, >>>> honestly,. try to have a conversation with a nn >>>> >>>> This is what KR does: it helps us to understand what things are and how >>>> they work >>>> It also helps us to understand if something is passed for what it is >>>> not *(evaluation) >>>> This is is why even neural network require KR, because without it, we >>>> don know what it is supposed >>>> to do, why and how and whether it does what it is supposed to do >>>> >>>> they still have a role to play in some computation >>>> >>>> * DR Knowledge representation in neural networks is not transparent, * >>>>> *PDM I d say that either is lacking or is completely random* >>>>> >>>>> >>>>> DR Neural networks definitely capture knowledge as is evidenced by >>>>> their capabilities, so I would disagree with you there. >>>>> >>>> >>>> PDM capturing knowledge is not knowledge representation, in AI, >>>> capturing knowledge is only one step, the categorization of knowledge >>>> is necessary to the reasoning >>>> >>>> >>>> >>>> >>>> >>>> >>>>> *We are used to assessing human knowledge via examinations, and I >>>>> don’t see why we can’t adapt this to assessing artificial minds * >>>>> because assessments is very expensive, with varying degrees of >>>>> effectiveness, require skills and a process - may not be feasible when AI >>>>> is embedded to test it/evaluate it >>>>> >>>>> >>>>> We will develop the assessment framework as we evolve and depend upon >>>>> AI systems. For instance, we would want to test a vision system to see if >>>>> it can robustly perceive its target environment in a wide variety of >>>>> conditions. We aren’t there yet for the vision systems in self-driving cars! >>>>> >>>>> Where I think we agree is that a level of transparency of reasoning is >>>>> needed for systems that make decisions that we want to rely on. Cognitive >>>>> agents should be able to explain themselves in ways that make sense to >>>>> their users, for instance, a self-driving car braked suddenly when it >>>>> perceived a child to run out from behind a parked car. We are less >>>>> interested in the pixel processing involved, and more interested in whether >>>>> the perception is robust, i.e. the car can reliably distinguish a real >>>>> child from a piece of newspaper blowing across the road where the newspaper >>>>> is showing a picture of a child. >>>>> >>>>> It would be a huge mistake to deploy AI when the assessment framework >>>>> isn’t sufficiently mature. >>>>> >>>>> Best regards, >>>>> >>>>> Dave Raggett <dsr@w3.org> >>>>> >>>>> >>>>> >>>>>

Attachments

- image/png attachment: image.png

Received on Saturday, 29 October 2022 02:42:10 UTC