- From: Adeel <aahmad1811@gmail.com>

- Date: Thu, 27 Oct 2022 12:17:05 +0100

- To: Paola Di Maio <paoladimaio10@gmail.com>

- Cc: Dave Raggett <dsr@w3.org>, W3C AIKR CG <public-aikr@w3.org>

- Message-ID: <CALpEXW3GomgHEgOt3q2C4sSr1yOMQT8mouxmE4LwyXcRjML0jw@mail.gmail.com>

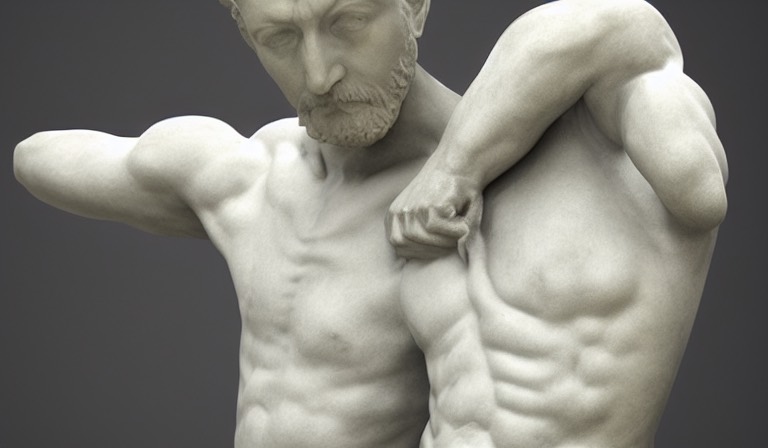

Hello, Do you agree with the concept of a bayesian brain then? I think if you start in the direction of quantum entanglement the conceptualization will need to take into account both space and time. Plausible reasoning on its own would not be sufficient. We also need to distinguish the abstraction between a mind vs brain. Thanks, Adeel On Thu, 27 Oct 2022 at 12:06, Paola Di Maio <paoladimaio10@gmail.com> wrote: > Thank you Dave, I hope we can address these issues during a panel > discussion > There is work to be done > > DR Knowledge representation in neural networks is not transparent, > *PDM I d say that either is lacking or is completely random* > > DR but that is also the case for human brains. > *PDM I d say that it is implici/.tacit* > > We are used to assessing human knowledge via examinations, and I don’t see > why we can’t adapt this to assessing artificial minds > because assessments is very expensive, with varying degrees of > effectiveness, require skills and a process - may not be feasible when AI > is embedded to test it/evaluate it > > This is what I d like to talk about, I may tweak my contribution to the > agenda as I have forgotten to mention some things, will email you separate > PDM > > > On Thu, Oct 27, 2022 at 5:39 PM Dave Raggett <dsr@w3.org> wrote: > >> >> On 27 Oct 2022, at 00:30, Paola Di Maio <paoladimaio10@gmail.com> wrote: >> >> I am suggesting that In order to evaluate and replicate /reproduce the >> (very fun) image outcome of Stable Diffusion he shared >> https://huggingface.co/spaces/stabilityai/stable-diffusion >> we need to know the exact prompt, we also need to repeat the prompt >> several times and find out whether the outcome is reproducible (I have been >> unable to reproduce dave's images- dave, pls send the prompts for us to >> play with) >> ... >> This brings me to the point that KR is absolutely necessary to >> understand/evaluate/reverse engineer/reproduce * that means make >> reliable *any type of reasoning in AI (*cogAI or otherwise) >> Avoiding KR is resulting in scaring absurdity in machine learning, >> (becauses of its power to distort reality, depart from truth). >> >> >> [dropping CogAI to avoid cross posting] >> >> Please note that text to image generators won’t generate the same image >> for a given prompt unless you also use the same seed for the random number >> generator, and furthermore use the exact same implementation and run-time >> options. >> >> The knowledge is embedded in the weights for the neural network models, >> which comes to around 4GB for Stable Diffusion. Based upon the images >> generated, it is clear that these models capture a huge range of visual >> knowledge about the world. Likewise, the mistakes made show the >> limitations of this knowledge, e.g. as in the following image that weirdly >> merges two torsos whilst otherwise doing a good job with details of the >> human form. >> >> >> Other examples include errors with the number of fingers on each hand. >> These errors show that the machine learning algorithm has failed to acquire >> higher level knowledge that would allow the generator to avoid such >> mistakes. How can the machine learning process be improved to acquire >> higher level knowledge? >> >> My hunch is that this is feasible with richer neural network >> architectures plus additional human guidance that encourages the agent to >> generalise across a broader range of images, e.g. to learn about the human >> form and how we have five fingers and five toes. A richer approach would >> allow the agent to understand and describe images at a deeper level. An >> open question is whether this would benefit from explicit taxonomic >> knowledge as a prior, and how that could be provided. I expect all this >> would involve neural network architectures designed for multi-step >> inferences, syntagmatic and paradigmatic learning. >> >> Knowledge representation in neural networks is not transparent, but that >> is also the case for human brains. We are used to assessing human knowledge >> via examinations, and I don’t see why we can’t adapt this to assessing >> artificial minds. That presumes the means to use language to test how well >> agents understand test images, and likewise, to test the images they >> generate to check for the absence of errors. >> >> I don’t think we should limit ourselves to AI based upon manually >> authored explicit symbolic knowledge. However, we can get inspiration for >> improved neural networks from experiments with symbolic approaches. >> >> Best regards, >> >> Dave Raggett <dsr@w3.org> >> >> >> >>

Attachments

- image/jpeg attachment: sculpture.jpg

Received on Thursday, 27 October 2022 11:17:29 UTC