- From: Timothy Holborn <timothy.holborn@gmail.com>

- Date: Wed, 11 May 2022 13:10:48 +1000

- To: Paola Di Maio <paoladimaio10@gmail.com>

- Cc: Adeel <aahmad1811@gmail.com>, Dave Raggett <dsr@w3.org>, ProjectParadigm-ICT-Program <metadataportals@yahoo.com>, public-cogai <public-cogai@w3.org>, W3C AIKR CG <public-aikr@w3.org>

- Message-ID: <CAM1Sok2x-LMpgwotkg60eNdh6T7tDFSPo8bqDcQBW_3uPwCMSg@mail.gmail.com>

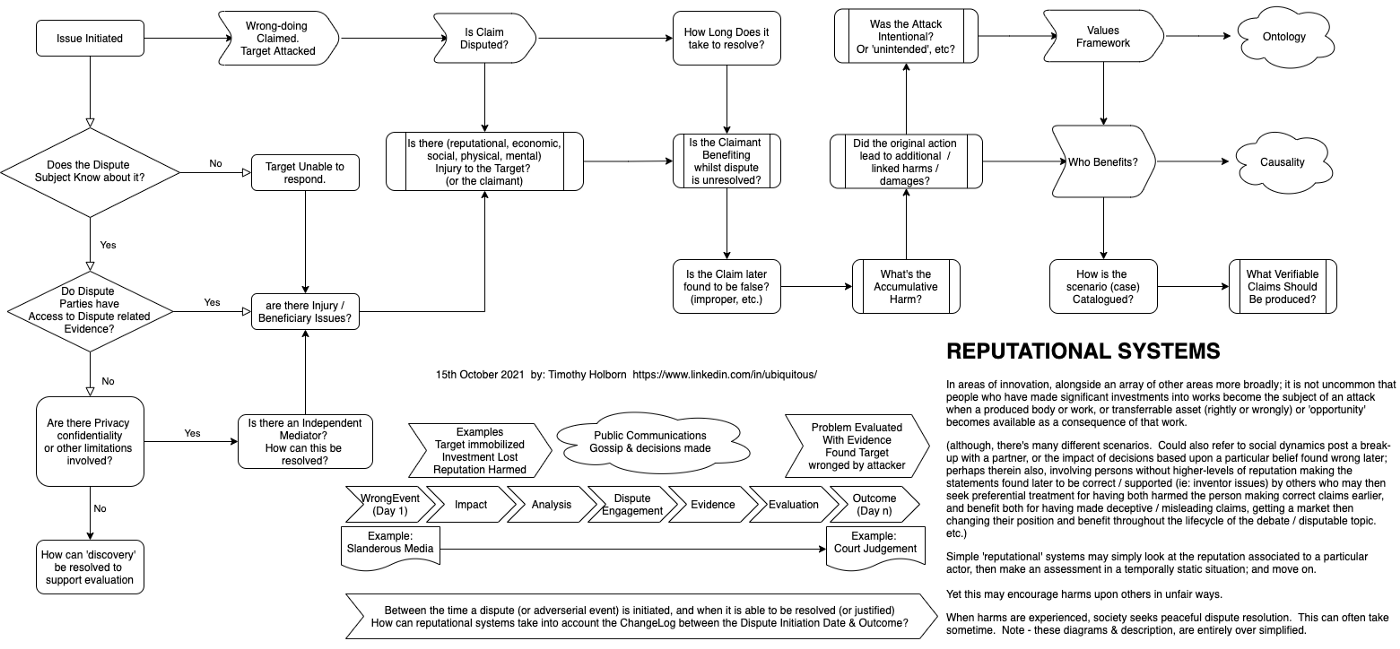

haven't read the entire thread; but i thought, https://en.wikipedia.org/wiki/Defeasible_reasoning might help - therein, an ontological context to support multi-modal objective concepts relating to a desire to create some sort of inspectable modularity re: assumptions, etc? I have some documents on Defeasible Logic here: https://drive.google.com/drive/folders/1pl6ESImD3OcFTE1R7wpuiq8xlF5SrtD5 Re: Natural Agents, The discussion with Chomsky: https://www.youtube.com/watch?v=iJ2vr2YnSGA and this one of Henry Stapp on Quantum Mechanics and Human Consciousness https://youtu.be/ZYPjXz1MVv0 <https://youtu.be/ZYPjXz1MVv0> kinda links to concepts communicated by Jason Silva such as ontological design: https://www.youtube.com/watch?v=aigR2UU4R20 & 'Captains of spaceship earth' https://www.youtube.com/watch?v=lHB_G_zWTbc - techno-social wormholes, or threaded parallal universes of consciousness built upon the temporal comprehensions of observers... not necessarily good, recently there were toilet-paper shortages due to the implications of AI on the 'status of observers'. If we're going to democratise quantum computing (via linguistics / ontologies, etc.) - like bringing about mobile/desktop computing in an age of mainframes, it'll need to have a series of qualities that supports that type of future, imo. therein - kinda important for sustaining democracies, rule of law, etc. I found this part of the interview with kissinger useful: https://twitter.com/DemocracyAus/status/1521721199742832640 One of the inferences i'm evaluating, as i'm doing civics activities relating to our democracy / federal election - noting; we do American Politics so poorly, we don't even have a president - yet, the format of media is often very similar to solutions for democracies like America where you do have a president... But otherwise - there's been alot of candidates who've stepped-up from all sorts of different fields due to widely held concerns that are often not able to be discussed online. Some candidates have had their online accounts terminated, and many appear to have graph rules applied to reduce distribution / visability. This appears to relate to particular 'entity analysis' (topics) characteristics - and i also consider in this process, the implication of 'beliefs' held by some and how that may result in various platform based actions / reputation graph stuff; and what happens if those assumptions or beliefs are later found to be incorrect - that is, that people have been improperly judged, by others (and systems) based upon a prior belief, before, say, the outcomes of court-cases take-effect. This in-turn relates to the graph i drew in the picture attached - but the linked concept i'm flagging - is whether and/or how, those sorts of software based judgements are corrected against entities / accounts, if at all? is the 'sentiment analysis' graph changed upon identification of a 'correction' and that persons 'digital persona character' boosted - to show they were 'fighting for right' if found to be the case later by a court? or are there accounts perminently dimished / harmed, as a consequence of mistakes made by others (perhaps in foreign regions?) reducing their ability to be heard at elections or later in life regardless of whether or not their alerts were found to be accurate... [image: 1_OD62QRiDXQd5mBdR_MiFpQ.png] What are the documentation requirements of platforms to declare how algorithms are made to work? what factors that are like accessibility - but different - could be considered, to ensure 'interference' with the 'status of observers' is defined in a way, that supports the core tenants of rule of law, democracy, access to justice - https://www.youtube.com/watch?v=pRGhrYmUjU4 star-trek reference - 'the right to face ones accuser directly' https://www.youtube.com/watch?v=qs0J2F3ErMc causality, temporal considerations & the ability to distinguish between bad / hostile actors (acts of violence, war, etc.) vs. mistakes - fairly important for CyberPeaceFair (NB, whilst 'FAIR' is employed for various reasons, one of them is: https://joinup.ec.europa.eu/collection/oeg-upm/news/fair-ontologies ) is fairly important, particularly for platforms as the business case for class action litigation mounts, as it becomes clearer that options were available by elected not to be applied, deployed or acted upon due to some sort of commercial rationale. The deliberations made in; https://www.theatlantic.com/magazine/archive/1945/07/as-we-may-think/303881/ are still relavent, imo. The productivity yeilds brought about by a 'knowledge age' https://medium.com/webcivics/a-future-knowledge-age-2e3f5095c67 are going to be enormous, imo. Conversely, those stuck in an 'information age' unable to address 'digital slavery' in its many forms; is just going to be equipped to be made able to do even the most basic functions, so well established in past in environments like our libraries. Without being able to understand what is part of the 'fiction' vs. 'non-fiction' section. the needs of people are made mute. In the 'human centric' concepts relating to the early days of credentials - the concept was that decisions always come down to human or natural entities. I've seen the 'human centric' concept used in major publications, but it appears to have been redefined or misunderstood. computers / software, doesn't pay compensation if its been engaged in wrongs. afaik; corporation comes from latin? originally meaning 'group of persons' --> persona ficta. Ethical finance concepts shun usury; and whilst the concept seems to be poorly supported nowadays, and perhaps that doesn't impact some social groups, it is seemingly quite important to others around the world - so - to form appropriate global standards, labelling - similar to food (ie: halal, kosher, etc.) is a feasible probability, particularly as the business systems advance via Web3 (the DLT / DID stuff, as is distinct to the old Web 3.0 stuff) influences; whether it be globally or inter-nationally (per kissinger comments noted above via link). With other fields of science, people are able to perform the same experiments and get do the tests themselves before they're expected to agree. I'm not sure how that type of thing is supported with AI implications, yet. hope this helps. timo. On Wed, 11 May 2022 at 12:10, Paola Di Maio <paoladimaio10@gmail.com> wrote: > Well, even simply language and communication andthe simplest reasoning > require logic of course, nobody can dis that. > But > a) logic itself depends on other things being true, such as fact checking, > There was a great un example once on ontolog list, where the first order > logic was being demonstrated (if fred is a bat and bats are birds, then > fred is a bird,) that turned out a wrong answer because the premise was > false (bats not birds but mammals). It was a good lesson, showing that the > logic is right but the fact is wrong > > b) there is a probably a lot more than logic to achieve perfection, I > posted an example on this list about the dying woman who, as last wish > asked someone to take her urine to the first person who entered the gate in > such and such place, who then saved her life. Such a request may not sound > logical at first, but probably her intuition was greater than anybody s > logic could have been, which became apparent at the end (the first person > who crossed the gate was a doctor who figured out the poison) > Here is the post > https://lists.w3.org/Archives/Public/public-aikr/2021Feb/0012.html > > I dont know if that is what DR intended in his reply, but I would agree > that even logic rests on correct and relevant facts, and possibly other > things > > PDM > > On Wed, May 11, 2022 at 3:12 AM Adeel <aahmad1811@gmail.com> wrote: > >> Logic is testable and does take prior knowledge into account, precisely >> what inferencing process does through reasoning. You can have prior >> knowledge persisted in a KG that is always evolving via inference. The >> representation is machine-readable as well. In statistics you likely to >> have more uncertainties and inconsistencies in rigid models that are >> overfitted to the data where you are essentially dealing in half-truths of >> false-positives and false-negatives. Each time you repeat the same >> iteration you get a different output. Humans are perfectly rational without >> having to rely on statistics. In fact, logic can be formally tested via >> constraints. Statistics at most can be evaluated. On top of which you then >> need to build an explainability model for it. >> >> >> If you think logic is overrated then, how do you think the PC you are >> using came about? How do you think programming languages came about? Even >> your statistical model will rely on logics to process it which all hardware >> devices use and rely on. >> >> On Tue, 10 May 2022 at 11:11, Dave Raggett <dsr@w3.org> wrote: >> >>> With all due respect to Spock, logic is overrated as it fails to >>> consider uncertainties and has problems with inconsistencies, moreover, it >>> doesn’t take the statistics of prior knowledge into account. Logic is >>> replaced by plausible reasoning based upon rational beliefs, and taking >>> care to avoid cognitive biases. >>> >>> On 10 May 2022, at 05:06, Paola Di Maio <paoladimaio10@gmail.com> wrote: >>> >>> Adeel and all >>> >>> It must be logically correct, however logic must be built on a model >>> that is reasonably complete (for some purpose)\ >>> In the sense that i aim to create say, perfect knowledge about >>> something (or anything), unless the model is representative (adequate) >>> the logic (logical model) may not be complete >>> that actually could be wrong (not adhere to truth) >>> >>> Even a logical correctness must be complete >>> to be adequate >>> >>> You can have a correct logic that is not adequate and far from perfect >>> (many policies are logically correct but do not cover the domain) >>> >>> Perfect knowledge, in the sense of knowledge that mirrors truth >>> without cognitive /subjective ileterin can be neither complete nor >>> correct and still be true. >>> >>> Just sayin. >>> >>> Maybe the notion of perfect knowledge has to be functionally defined to >>> be able to exchange meaningfully about it. >>> >>> On Mon, May 9, 2022 at 6:45 PM Adeel <aahmad1811@gmail.com> wrote: >>> >>>> No, perfection implies logical correctness within the defined >>>> constraints of representation, but under the open-world assumption, it >>>> should not imply completeness. >>>> >>>> >>>> On Mon, 9 May 2022 at 11:30, Paola Di Maio <paoladimaio10@gmail.com> >>>> wrote: >>>> >>>>> >>>>> Does Perfection imply completeness? >>>>> Discuss >>>>> >>>>> >>>>> On Mon, May 9, 2022 at 2:47 PM ProjectParadigm-ICT-Program < >>>>> metadataportals@yahoo.com> wrote: >>>>> >>>>>> If perfect implies complete, we can rule it out because of the proofs >>>>>> by Godel and Turing on incompleteness and undecidability. >>>>>> The concept of perfect only exists in mathematics with the definition >>>>>> of perfect numbers. >>>>>> Unbiased reasoning that leads to results for which truth values can >>>>>> be determined in terms of validity, reproducibility, equivalence and causal >>>>>> relationships are the best way to go for knowledge representation. >>>>>> Knowledge and for that matter consciousness as well are as yet not >>>>>> unequivocally defined, and as such perfection in this context is not >>>>>> attainable. >>>>>> >>>>>> >>>>>> Milton Ponson >>>>>> GSM: +297 747 8280 >>>>>> PO Box 1154, Oranjestad >>>>>> Aruba, Dutch Caribbean >>>>>> Project Paradigm: Bringing the ICT tools for sustainable development >>>>>> to all stakeholders worldwide through collaborative research on applied >>>>>> mathematics, advanced modeling, software and standards development >>>>>> >>>>>> >>>>>> On Sunday, May 8, 2022, 04:15:01 AM AST, Paola Di Maio < >>>>>> paola.dimaio@gmail.com> wrote: >>>>>> >>>>>> >>>>>> Dave R's latest post to the cog ai list reminds us of the ultimate. >>>>>> Perfect knowledge is a thing. Is there any such thing, really? How can it >>>>>> be pursued? >>>>>> Can we distinguish >>>>>> perfect knowledge rom its perfect representation >>>>>> >>>>>> >>>>>> Much there is to say about it. In other schools, we start by clearing >>>>>> the obscurations in our own mind . That is a lifetime pursuit. >>>>>> While we get there, I take the opportunity to reflect on the perfect >>>>>> knowledge literature in AI, a worthy topic to remember >>>>>> >>>>>> I someone would like to access the article below, email me, I can >>>>>> share my copy >>>>>> >>>>>> >>>>>> ARTIFICIAL INTELLIGENCE 111 >>>>>> Perfect Knowledge Revisited* >>>>>> S.T. Dekker, H.J. van den Herik and >>>>>> l.S. Herschberg >>>>>> Delft University of Technology, Department of Mathematics >>>>>> and Informatics, 2628 BL Delft, Netherlands >>>>>> ABSTRACT >>>>>> Database research slowly arrives at the stage where perfect knowledge >>>>>> allows us to grasp simple >>>>>> endgames which, in most instances, show pathologies never thought o f >>>>>> by Grandmasters' intuition. >>>>>> For some endgames, the maximin exceeds FIDE's 50-move rule, thus >>>>>> precipitating a discussion >>>>>> about altering the rule. However, even though it is now possible to >>>>>> determine exactly the path lengths >>>>>> o f many 5-men endgames (or o f fewer men), it is felt there is an >>>>>> essential flaw if each endgame >>>>>> should have its own limit to the number o f moves. This paper >>>>>> focuses on the consequences o f a >>>>>> k-move rule which, whatever the value o f k, may change a naive >>>>>> optimal strategy into a k-optimal >>>>>> strategy which may well be radically different. >>>>>> 1. Introduction >>>>>> Full knowledge of some endgames involving 3 or 4 men has first been >>>>>> made >>>>>> available by Str6hlein [12]. However, his work did not immediately >>>>>> receive the >>>>>> recognition it deserved. This resulted in several reinventions of the >>>>>> retrograde >>>>>> enumeration technique around 1975, e.g., by Clarke, Thompson and by >>>>>> Komissarchik and Futer. Berliner [2] reported in the same vein at an >>>>>> early date >>>>>> as did Newborn [11]. It is only recent advances in computers that >>>>>> allowed >>>>>> comfortably tackling endgames of 5 men, though undaunted previous >>>>>> efforts >>>>>> are on record (Komissarchik and Futer [8], Arlazarov and Futer [1]). >>>>>> Over the >>>>>> past four years, Ken Thompson has been a conspicuous labourer in this >>>>>> particular field (Herschberg and van den Herik [6], Thompson [13]). >>>>>> As of this writing, three 3-men endgames, five 4-men endgames, twelve >>>>>> 5-men endgames without pawns and three 5-men endgames with a pawn [4] >>>>>> can >>>>>> be said to have been solved under the convention that White is the >>>>>> stronger >>>>>> *The research reported in this contribution has been made possible by >>>>>> the Netherlands >>>>>> Organization for Advancement of Pure Research (ZWO), dossier number >>>>>> 39 SC 68-129, notably >>>>>> by their donation of computer time on the Amsterdam Cyber 205. The >>>>>> opinions expressed are >>>>>> those of the authors and do not necessarily represent those of the >>>>>> Organization. >>>>>> Artificial Intelligence 43 (1990) 111-123 >>>>>> 0004-3702/90/$3.50 © 1990, Elsevier Science Publishers B.V. >>>>>> (North-Holland) >>>>>> 112 S.T. D E K K E R ET AL. >>>>>> side and Black provides optimal resistance, which is to say that >>>>>> Black will delay >>>>>> as long as possible either mate or an inevitable conversion into >>>>>> another lost >>>>>> endgame. Conversion is taken in its larger sense. It may consist in >>>>>> converting >>>>>> to an endgame of different pieces, e.g., by promoting a pawn; >>>>>> equally, it may >>>>>> involve the loss of a piece and, finally and most subtly, it may >>>>>> involve a pawn >>>>>> move which turns an endgame into an essentially different endgame: a >>>>>> case in >>>>>> point is the pawn move in the KQP(a6)KQ endgame converting it into >>>>>> KQP(a7)KQ (for notation, see Appendix A). >>>>>> The database, when constructed, defines an entry for every legal >>>>>> configura- >>>>>> tion; from this, for each position, a sequence of moves known to be >>>>>> optimal >>>>>> can be derived. The retrograde analysis is performed by a full-width >>>>>> backward- >>>>>> chaining procedure, starting from definitive positions (mate or >>>>>> conversion), as >>>>>> described in detail by van den Herik and Herschberg [18]; this yields >>>>>> a >>>>>> database. The maximum length of all optimal paths is called the >>>>>> maximin (von >>>>>> Neumann and Morgenstern [16]), i.e., the number of moves necessary >>>>>> and >>>>>> sufficient to reach a definitive position from an arbitrary given >>>>>> position with >>>>>> White to move (WTM) and assuming optimal defence >>>>>> CONCLUSION >>>>>> It has now become clear that the notion of optimal play has been >>>>>> rather naively >>>>>> defined so far. At the very least, the notion of optimality requires a >>>>>> specific >>>>>> value of k for k-optimality and hence a careful bookkeeping of all >>>>>> relevant >>>>>> anteriorities. These additional requirements form but one instance of >>>>>> aiming to >>>>>> achieve optimal play under constraints; of such constraints a k-move >>>>>> rule is >>>>>> merely one instance. In essence, it is not our opinion that a k-move >>>>>> rule spoils >>>>>> the game of chess; on the contrary, like any other constraint, it may >>>>>> be said to >>>>>> enrich it, even though at present it appears to puzzle database >>>>>> constructors, >>>>>> chess theoreticians and Grandmasters alike. >>>>>> >>>>>> >>>>>> >>> Dave Raggett <dsr@w3.org> http://www.w3.org/People/Raggett >>> W3C Data Activity Lead & W3C champion for the Web of things >>> >>> >>> >>> >>>

Attachments

- image/png attachment: 1_OD62QRiDXQd5mBdR_MiFpQ.png

Received on Wednesday, 11 May 2022 03:11:52 UTC