- From: Chris Rogers <crogers@google.com>

- Date: Fri, 30 Jul 2010 12:57:27 -0700

- To: public-xg-audio@w3.org

- Cc: Chris Marrin <cmarrin@apple.com>

- Message-ID: <AANLkTi=b+ub5-Ysu617zmKuc4zfZjiCmMD=TBFHe2NNR@mail.gmail.com>

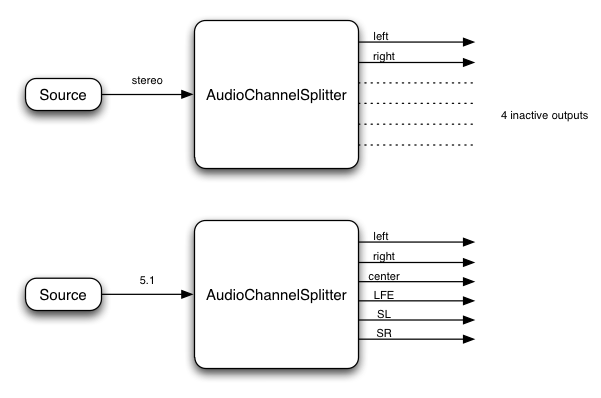

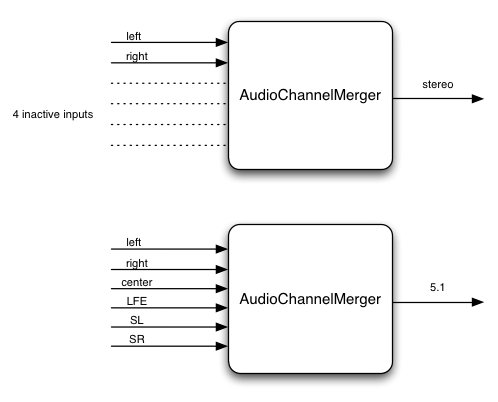

Chris Marrin had asked about getting access to individual channels. I've added AudioChannelSplitter and AudioChannelMerger to my proposal document: http://chromium.googlecode.com/svn/trunk/samples/audio/specification/specification.html I've also implemented them and checked in the code to the WebKit audio branch. These two types of AudioNodes may be used together to implement interesting types of multi-channel matrix mixing or virtualized down-mixing. I've also created a "ping-pong delay" demo (not yet checked in) to illustrate other possible uses. The more detailed description and diagrams are in the proposal document, but here's the basic idea: *AudioChannelSplitter:* [image: channel-splitter.png] *AudioChannelMerger:* * * *[image: channel-merger.png] * * * * The controversal part may be how I'm proposing that there be a fixed number of inputs and outputs, which will depend on the highest number of channels supported (6 channels for 5.1 in this case). But this exact upper limit can be flexible and could be increased to support arbitrarily numbers of channels (7.2 and higher). Still, I wanted to avoid cases where the number of outputs of AudioChannelSplitter would change dynamically depending on what is connected to it since this could create problems if outputs which are connected to other things suddenly disappear in the middle of processing. With a fixed number of outputs, the irrelevant (inactive) outputs would simply output silence if they're connected to anything at all... Chris * * * * *

Attachments

- image/png attachment: channel-splitter.png

- image/png attachment: channel-merger.png

Received on Friday, 30 July 2010 19:58:01 UTC