- From: Jeremy Tandy <jeremy.tandy@gmail.com>

- Date: Wed, 24 Feb 2016 13:17:48 +0000

- To: Simeon Nedkov <s.nedkov@geonovum.nl>, public-sdw-comments@w3.org

- Message-ID: <CADtUq_2e22Ee90KbO+5XgsLfskHziX1pOjuFhpWm9mCT4jmR6Q@mail.gmail.com>

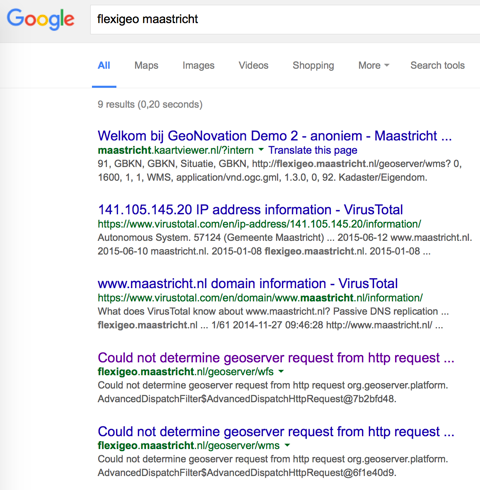

Hello Simeon. First of all, many thanks for your detailed analysis of the best practices FPWD. Your input is very much appreciated. We, the editors, are planning to publish the next Working Draft release of the best practices around end of April 2016. During the interim period we will work through your analysis and respond in detail, where appropriate indicating how the revised publication will address your issues and concerns. Best regards, Jeremy On Sat, 13 Feb 2016 at 10:49 Simeon Nedkov <s.nedkov@geonovum.nl> wrote: > Dear list, > > I read the SDW best practices with great interest in part due to my > involvement in Geonovum’s Spatial Data on the Web testbed, but primarily > due to my interest and experiences in getting non-expert web developers to > use open geo data. My compliments for the work performed thus far! > > Below I provide a number of general observations regarding the best > practices (BP). I do this from my background as a Geo-IT consultant and web > developer with a passion for open data, open/agile government and civic > hacking. I’ve participated in many open data hackathons and challenges > where I witnessed firsthand the struggles and difficulties that open data > hackers, web developers and other non-geo experts encounter when dealing > with the W*S family of standards, SDIs and geographical information in > general. While many problems arise due to technical difficulties and > shortcomings, an equal amount stem from the unfavourable developer > experience that many geo-related services/standards offer: bad > documentation, lack of examples, cumbersome tooling, heavy portals that > require geo-jargon, etc. Hence I was looking forward to the SDWBPs and am > very happy for the opportunity to contribute to what might be the next step > in geo on the web. > > In the following a — (double dash) signifies a section and a - (single > dash) signifies a remark. > > > — Problem statement and purpose > - The aim of the Best Practices (BP) is to improve discoverability and > accessibility of geodata. It is, however, not clear (in a structured way) > what is wrong with the current state of affairs. While problems and > challenges are mentioned throughout the text, it is difficult to distill an > organized and structured overview of the issues. The document does not > explicitly state why geo data is difficult to find and access in the > current SDI-dominated situation and why/how the proposed solution (Linked > Data) will improve the situation, especially for developers not versed in > geo/linked data. This makes the connection between the stated goal and the > proposed solution (“this document focuses on the content of the spatial > datasets: how to describe and relate the individual resources and > entities”) unclear and difficult to make. In short: the document is missing > a clear analysis of sorts that links the identified problems to the > proposed Linked Data (LD) solutions. In other words: 1) how were the > requirements in section 5 of SDW-URC selected and 2) how are the BPs in > this document related to them? > * Suggestion: clearly identify what the current acces/discover problems > are from the stated audience’s point of view and why/how Linked Data is > going to solve these. In terms of presentation: explicitly link to the data > discover/access problems identified in DWBP and make clear how this > documents relies on and/or expands them. Best way to do this, IMHO, is to > extend the second image (why can’t I link to it? ;) ) in section 5 (why > can’t I link to it? ;) ) issues/problems challenges that are specific to > geo. > > - The best practices (BP) are derived from an exhaustive list of use cases > and requirements. This is a great approach as it ensures that the BPs are > addressing real-world needs. This does not, however, mean that the document > address real world problems that prevent, as we speak, users from finding, > accessing and using data. I found myself wondering (numerous times) “which > concrete problem does this BP solve/address?” In addition, by listing needs > instead of challenges/problems there is risk of trying to be everything at > once/cover all edge cases which is one of the posed critiques of SDIs. The > document states that "there are some questions around publishing spatial > data on the Web that keep popping up and need to be answered.” What are > these questions? > Suggestion: link each BP explicitly to the use case(s) that > defined/justified it in SDW-UCR and to the corresponding DWBP problem/BP. > This will go a long way towards exposing the missing analysis/linkage > between the identified problems and proposed solutions. Explicitly > enumerate the "questions that keep popping up" and link them to the > proposed BPs. > > -Although each BP stems from a use case, the document as a whole reads as > a prescription for publishers how to publish (Linked) data. It does not > provide (detailed) observations about how data is currently/actually used > by the target audiences and what problems they run into in their day-to-day > (grunt) work. The jump from the problem statement to the proposed solution > (LD) is sudden and the reasoning unclear. The claim that “… the Linked > Data <http://www.w3.org/standards/semanticweb/data> approach is most > appropriate for publishing and using spatial data on the Web” in the face > of many other data dissemination initiatives (see below) is difficult to > defend. The BPs read therefore as a LD manifesto. This is perfectly fine > but does not fit the title which, in that case, should be Linked Spatial > Data on the Web. > * Suggestion: make an inventory of concrete problems that users run into > related to the stated problem (i.e. accessibility and discoverability), > link these to the proposed LD concepts and include them and the analysis in > the description of each BP as e.g. “this BP solves real-world problems x, > y, z through LD concepts x, y, z“. For bonus points: investigate and > mention under what constraints each target audience group is working. E.g. > web developers need snappy APIs; they often can’t/won’t wait for WFS/SPARQL > endpoints to deliver. In addition, more and more data processing is > happening in the browser (see e.g. Mapbox’ Turf.js library -> powerful GIS > operation in the browser) which diminishes the need for smart endpoints. A > "flat” API that delivers (cached) data is all they need. > > - The document on several occasions “blames” the complexity and steep > learning curve of SDIs for the current lack of data usage by web developers > and other geo novices. Yet, for many web residents LD is as complex and > cumbersome as the SDI paradigm. Aren’t you replacing one complexity with > another? And if so, on what grounds? Again: which concrete problems does LD > address? How do these LD solutions compare to other data dissemination > strategies? For instance, the steep learning curve of SDIs is partly caused > by extremely developer unfriendly documentation (e.g. the WMS spec contains > the word ‘example’ once, at the very end). How is LD/the proposed BPs going > to solve these kinds of problems? > Suggestion: list the problems that users run into when searching for data > in the current SDI-dominated landscape and “justify” the deployment of LD > to tackle those (in the face of all the other data dissemination platforms). > > - One of the stated major problems with the current geo web landscape is > the lack of WFS endpoints. It is unclear how the proposed solution (LD) > will improve the availability of vector data on the web. What are the the > causes for the lack of WFS endpoints? How is LD going to address them? What > if the causes are not technological but organisational? > > — More focus on people, community, tooling, ecosystems and the social > aspect in general > - Establishing a standard/best practices requires, in addition to great > BPs/spec/manifesto (this document), tooling, documentation, a community, > evangelism a.o. In other words: it needs an ecosystem. Having the data out > there is a great first step but often times more is needed to actually make > it useful to people. OpenStreetMap’s ecosytem is a great example: they have > extensive documentation, several tools for editing data, several tools and > APIs for downloading data, plugins for QGIS/Leaflet, tools for transforming > OSM data to other formats and structures (e.g. PostGIS), routing libraries > and big players (Mapbox, Mapzen) that root for and use the data. There are > many communities one can turn to for help. In short: OSM offers a great > ecosystem and developer experience (DX) i.e. how easy/difficult is it to > get started using the goods. > While I realize that comparing these BPs to OSM’s ecosystem is > unrealistic, paying more attention to its properties and affordances and > translating them to this doc is important. A great example of a new > initiative seeking for developer adoption is Google’s effort to establish > Web Components as a standard. To do this they’ve checked several things off > the DX list: a dedicated project (Polymer [1]) that has a flashy web page, > documentation, extensive examples and tooling in the form of a developer > kit, online presence, etc. The core developers Tweet, blog, vlog, etc. > regularly about advancements and uses. They’ve realised a lively > conversation on their GitHub issue tracker. In short, they are working hard > on achieving high DX and creating an ecosystem. Again: Google has unlimited > resources to do this; I simply want to create awareness that these things > are important. Doing stuff on the modern social web means you need to > address the social part as much as you address the web/tech part. > * Suggestion: What community/ecosytem BPs can be distilled from > OSM/Polymer in the light of Spatial Data on the Web? > > -The same can be said about data publishers. If a standard, tech or a set > of BPs are a pain to implement and maintain because of a weak ecosystem > many will be reluctant to do so. What BPs address the process of LD > publishing? > > — Link to Data on the Web Best Practices document > - It is unclear to what extent the SDWBP will implement the best practices > outlined in DWBP e.g. is SDWBP also going to provide bulk data download? > What will happen to best practices in DWBP that are not explicitly > mentioned in SDWBP. > * Suggestion: explicitly mention which DWBP will be implemented by SDWBP > and include them in the document as own entities with a note stating e.g. > “this BP is inherited from DWBP”. > > — Modernize existing infrastructure > - This document discards the current SDI-dominated situation. The reality > is, however, that OGC’s standards and the SDI mindset aren’t going to go > away anytime soon. New datasets (by new data providers) will continue to be > published as WMS/WFS even though these have well-known shortcomings. While > Linked Data is a great concept, many organisations have invested heavily in > the “traditional” SDI paradigm or are obliged by law to stick to it and > will not be moving to LD anytime soon. In addition, a number of them flat > out refuse to think about the topic as “it is not required by INSPIRE/the > standard/the law”. > * Suggestion: make an inventory of the common legacy problems that > degenerate developers’ experience when working with OGC’s standards. Draft > BPs to address those or, at the very least, mention them in passing (keep > an eye on Geonovum’s SDW testbed). In the best case: submit change requests > to OGC to alleviate the shortcomings. If there are no plans to accommodate > “legacy” infrastructure I’d suggest the document’s title be changed to > Linked Spatial Data on the Web. > > - Modernizing does per se entail implementing Linked Data. E.g. spatial > data need not be linked in order to make it crawlable by search engines. > Google already indexes OWS services, see the links on the bottom of the > attached image. However, it can’t do much with the non-descriptive error > message that the endpoints return when e.g. the query parameters are > omitted. A simpler (?) solution is therefore to return an HTML document > that e.g. lists the contained data, shows a map, declares semantics through > JSON-LD, etc. The former has the added benefit of making the endpoint > legible for developers. > > — Link to other initiatives > - Although the stated use cases and requirements are numerous, the > document proposes a one solution/paradigm (LD) in the face of all the other > (community driven) solutions, e.g. http://dat-data.com/, > http://data.okfn.org, http://plenar.io/, Socrata and CKAN but also the > OpenStreetMap ecosystem. These initiatives are not referred to, even in > passing, which makes the document feel isolated and detached from reality > (especially when some of the initiatives have serious mileage e.g. CKAN and > OpenStreetMap and are de facto standards) and the web community. Reflecting > on these and discussing their strengths and weaknesses in relation to the > LD BPs proposed in this document is essential. Without an analysis or at > the very least a comparison this document reads as a Linked Data manifesto. > In other words, it risks being a prescription for how the web should be > instead of a vehicle for describing how the web _is_ by surfacing > well-estbalished best practices. Again, this is perfectly fine but does not > fit the title. > * Suggestion: refer to other data dissemination initiatives, at least in > passing, and discuss why LD is a better solution to the problem (what is > the problem actually?). Why should users buy into the proposed approach > when e.g. downloading any type of data from OSM and consuming it in their > favourite tool is much easier and, more importantly, already works? > > — Producing Linked Data > - The Linked Data paradigm promises great interoperability once datasets > are harmonised and linked. The question is, however: who is going to > harmonise and link these datasets? Stories from the trenches tell of > strained government bodies that have limited resources to do proper/any > data management. Harmonisation processes take a lot of time and are prone > to failure as parties sometimes fail to agree on a harmonisation strategy > and outcome. Then there are the compromises: harmonised sets that are > semantically poor due to the reached consensus. In other words: linked data > does not produce itself; people are. What best practices can you provide > that reduce the (social) cost of producing LD? Does e.g. the data need to > be acquired in a different manner in order to make linking easier? > * Suggestion: provide BPs for harmonising and linking data. Identify which > bottlenecks exist in the data preparation and linking step and list > possible solutions and strategies. > > — Ordering of BPs > - How are the BPs ordered? Do they have weights/priorities? Suppose an > organisations has limited resources, which ones should they implement for > sure? For instance, BP27 advises to “publish data at the granularity you > can support" and "As a minimum, it should be possible for users to download > data on the Web as a single resource; e.g. through bulk file formats.” So, > an org that plans to go for the bulk download approach needs not to look at > most (if not all) of the other BPs. Hence, should B27 be the first BP they > encounter? > > — Terminology > - State clearly what is meant by discoverability and accessibility. These > terms mean different things to different users and contexts. Also, each > user group attaches its own affordances to them and expects them to behave > differently E.g. while some are avid consumers of WFS, most open data > hackers and developers are more than happy with a flat CSV. > * Suggestion: list the affordances that each group in the Audience section > attaches to discoverability and accessibility and try to match the BPs as > much as possible to them. > > - Define what is meant by web technologies: for the authors of this > document these are the Linked Data paradigms, for others it’s HTML5, AJAX, > responsive web design, etc. > > - The word mashable is not used anymore. People on the web talk about web > apps, interactive visualisations and data science, data-driven journalism, > maps, etc. > > — BP7: “Keep geometry descriptions to a size that is convenient for Web > applications.” > Remark: this needs to be quantified. How big is too big? Also, what > strategies are there to achieve this and how do they relate to the Linked > Data approach? How would e.g. vector tiles be implemented in the LD > paradigm? > > — BP24: “... software agents to explore rich and diverse content without > the need to download a collection of datasets for local processing in order > to determine the relationships between resources.” > Remark: software agents that automatically explore linked data such as > Cortana, Siri, Google Now, etc. are in essence a separate user type and > should be added to the Audience section as such. > > — BP28: “... but rather than adapting the WFS (GML) output, it may be more > effective to provide an alternative 'Linked Data friendly' access path to > the data source” > Remark: make sure to use a developer-friendly Linked Data dissemination > method. The purpose of a convenience API is defeated if it uses unpopular > standards. > > — Section 6.4 enabling discovery > Remark: how should data be found that is, for whatever, reason, not > indexed by a popular search engine? Is a catalog still needed? > > With kind regards, > Simeon > >

Attachments

- image/png attachment: Screenshot_2016-02-09_09.42.41.png

- image/png attachment: 02-Screenshot_2016-02-09_09.42.41.png

Received on Wednesday, 24 February 2016 13:18:29 UTC