- From: Christina Noel <CNoel@musicnotes.com>

- Date: Thu, 21 Apr 2016 16:06:52 +0000

- To: "public-music-notation-contrib@w3.org" <public-music-notation-contrib@w3.org>

- Message-ID: <9b618cf38f694d2c9a79cd02a94d3168@MNMail01.musicnotes.com>

Hello, James, Joe, (and others)!

I have been behind in keeping up with what’s going on in this community, and I want to speak from the point of view of a programmer who would have to interpret the CONTRACT in order to make a program that can read or write the INSTANCE that is being passed between two applications.

In the first place, whatever encoding we produce must be able to contain both the graphical and temporal data in one document. I’ve seen several parts of this discussion talk about using SVG+MIDI, which is fine for the output of a PARSING_APP or the input of the AUTHORING_APP, but should not be used for the INSTANCE. Whatever is used for INSTANCE, it needs to be self-sufficient in a single file because otherwise you end up with read/write problems: What if you lose one of the files, or are only given one? What if you have both files, but one is from a previous version of the music?

I love the concept her of a Performable Notation you presented, and the simplified object schema you present. That makes perfect sense to me as a programmer, and is a very useful separation for those of us who just want the graphical stuff (score:object) or just want the audible stuff (score:event/score:durationSymbol)

* a score:object is perceptible in space. It therefore has non-zero width, non-zero height, and a defined position (x, y) on a page in a score. score:objects can nest.

* a score:event is perceptible in time. It therefore has a duration greater than or equal to 1ms and a defined position (msPosition) with respect to the beginning of a performance. score:events can nest.

* a score:durationSymbol is a container that has a duration.

* a score:chordSymbol is a score:durationSymbol containing a score:object and one or more score:events.

* a score:restSymbol is score:durationSymbol that contains a score:object but no score:event.

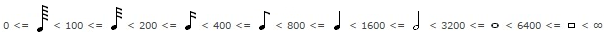

However, I’m not sure about your timing graphic:

------------------------------------------

James’ says:

But I don't use tuplets. Duration classes are defined as bandwidths. For example, like this (durations in milliseconds)

[cid:image001.png@01D19BBC.0E464C20]

------------------------------------------

I’m not sure what that means. Is that just a convenient wrapper for beats to note notation, or does it really mean milliseconds, and ignores the possible tempo specification? What happens when you need triplets, which don’t fit those definitions? (For triplets to represent properly, you need your whole note count to be a multiple of 3).

--Christina Noel

Musicnotes.com

---

Christina Noel

Sr. Software Engineer, Musicnotes

Direct: | Fax: 608.662.1688 | CNoel@musicnotes.com<mailto:CNoel@musicnotes.com>

[Musicnotes.com]<http://www.musicnotes.com>

www.musicnotes.com<http://www.musicnotes.com/> | Download Sheet Music

Musicnotes, Inc | 901 Deming Way, Ste 100 | Madison, WI 53717

Facebook<http://www.facebook.com/musicnotesdotcom> | Twitter<http://twitter.com/musicnotes>

From: James Ingram [mailto:j.ingram@netcologne.de]

Sent: Friday, April 15, 2016 7:19 AM

To: Joe Berkovitz <joe@noteflight.com>

Cc: public-music-notation-contrib@w3.org

Subject: Re: Notations in Scope

Hi Joe, all,

Before replying to Joe, I'd first like to first make another iteration through defining what a "Performable Notation" is.

I currently have:

A Performable Notation is one in which one or more synchronous events can be mapped to one or more graphic objects in the score.

This needs breaking up into some sub-definitions. I want to use the term 'object' to mean something that is perceptible and spatially extended, and the word 'event' to mean something that is perceptible and temporally extended. These two words are heavily used already in computing ('object' is a base class in Javascript, 'event' is used everywhere to mean an instantaneous trigger), so I need to do the standard thing -- define a namespace. I'm going to call it 'score'. I can now make some more formal definitions:

* a score:object is perceptible in space. It therefore has non-zero width, non-zero height, and a defined position (x, y) on a page in a score. score:objects can nest.

* a score:event is perceptible in time. It therefore has a duration greater than or equal to 1ms and a defined position (msPosition) with respect to the beginning of a performance. score:events can nest.

* a score:durationSymbol is a container that has a duration.

* a score:chordSymbol is a score:durationSymbol containing a score:object and one or more score:events.

* a score:restSymbol is score:durationSymbol that contains a score:object but no score:event.

I can then say:

* A Performable Notation consists of score:objects and score:durationSymbols

Notes:

* The same score:events can be associated with different score:objects -- i.e. different scores of the same piece are possible.

* The same score can contain multiple audio renderings (Use Case MC10)

* Nothing has been said about how score:events are allowed to nest. In fact they can nest both synchronously and consecutively. A performed melody is a score:event.

* a CWMN chord symbol is a score:chordSymbol. If it is decorated by an ornament sign, then it will contain several consecutive score:events.

* some score:objects are containers (e.g. system, staff, voice etc.), some are just annotations (e.g. slurs, 8va signs, performance instructions, hairpins, instrument names that are the labels of staves etc.).

Comments and suggestions for improvements would, of course, be welcome.

In my reply to Joe, I'd like to refer to the Architectural Proposal I made on 9th April. In case anyone has lost the original posting, I include it at the bottom of this posting.

The core proposal is that we should think in the following terms:

There is an authoring application (AUTHORING_APP), that creates an instance of a score (INSTANCE).

The INSTANCE is parsed by an application (PARSING_APP) that does something with the contained data.

The AUTHORING_APP makes a contract (CONTRACT) with the PARSING_APP telling it how the data can be parsed.

In the case of CMN, the AUTHORING_APP would be one of the applications that allow their users to create CMN score INSTANCEs. Applications that create other kinds of score (e.g. Braille editors, or my Moritz) are also in the AUTHORING_APP category.

Joe said:

[snip] SVG is a nice existing standard covering graphic objects, it is far more open ended than MIDI. MIDI is in my view not much better than CWMN (and is arguably worse) in the range of musical events that it can represent.

To be consistent with the condition that we shouldn't mention specific technologies, I haven't actually mentioned SVG or MIDI in the formal definitions above. However, I think SVG is going to be important because it is closely related to printing. Many existing AUTHORING_APPs print, so it should be fairly easy for them to create (extended) SVG from their existing code.

MIDI is the best (only?) standard for temporal events that we have got. I can imagine using mp3 files as little building blocks, but that's far less flexible. The PARSING_APP might want to play the score at half speed, for example. That's quite trivial in MIDI, but very difficult to achieve on the fly with audio files. MIDI is also very flexible and well connected in other areas too. The MIDI output from the PARSING_APP can be sent not only to a synthesizer, but also to apparatus controlling lighting or other things. See Use Case MC13.

The PARSING_APP will often be a web application written in Javascript.

Joe: If you want to use HTML because you have existing libraries that you'd like to use, that's fine by me. However, you need an INSTANCE to work with. That means you need an AUTHORING_APP to create it. Have you got one?

For more about the CONTRACT, see the original posting below. Its very much like a schema...

Here's some more about my Moritz and Assistant Performer software:

The INSTANCES, created by Moritz and read by the Assistant Performer, use CWN symbols, because they have evolved over centuries to become very legible and use space very efficiently. Also, they can be read by most musicians. (Note that we do not read consistently from left to right, but in chunks.)

But I don't use tuplets. Duration classes are defined as bandwidths. For example, like this (durations in milliseconds)

[cid:image001.png@01D19BBC.0E464C20]

I compose with millisecond durations, and let Moritz do the transcription. Bars add up at the millisecond level. There is nothing to say that all the quavers in the score have the same duration.

Note that Moritz writes scores that contain only a small number of annotations. Given an appropriate SVG editor, it would be quite straightforward to add more (phrase marks, hairpins etc.) in the annotations layer. Unfortunately Inkscape isn't very good, and I haven't tried Corel Draw or Illustrator yet... I know that it is possible to draw beautiful slurs (pointed lines) using Corel Draw - presumably Illustrator can do that too nowadays. :-)

The Assistant Composer's documentation is here

http://james-ingram-act-two.de/moritz3/assistantComposer/assistantComposer.html

Some scores can be viewed and played on-line by the Assistant Performer at

http://james-ingram-act-two.de/open-source/assistantPerformer/assistantPerformer.html

The SVG files can all be downloaded from GitHub at

https://github.com/notator/assistant-performer/tree/master/scores

They can be viewed quite well on GitHub, but they need to load fonts, and that doesn't always work there.

Song Six is obsolete on GitHub. It can no longer be played on the Assistant Performer, but it can be viewed at

http://james-ingram-act-two.de/compositions/songSix/setting1Score/Song%20Six.html

where you can play an (unsynchronised) mp3 of it.

Joe: if there's anything I failed to cover from your previous post, please raise it again. This post is quite long enough already.

all the best,

James

=================================================

Here's my posting from 9th April, in case anyone wants to read it, but has lost it.

To be consistent with the above, I've changed the abstract authoring application's name from AA to AUTHORING_APP, and the name of the abstract parsing application's name from PA to PARSING_APP.

Hi all,

Great to see you all yesterday. Nice to see that you are all real people! :-)

I'd also like to thank Joe for his first draft of the Architectural Proposals. There are some really important insights there. However, I completely agree with Zoltan, that we need to discuss the architecture without mentioning specific technologies (SVG, MusicXML, CSS etc.)

I was only able to finish reading Joe's doc just before the meeting, so there wasn't really time for it to sink in, or for me to prepare a considered reply.

Here's what's going through my mind this morning:

I have a working prototype that is an instance of such an architecture.

Here's a description, leaving out the specific technologies:

There is an authoring application (AUTHORING_APP), that creates an instance of a score (INSTANCE).

The INSTANCE is parsed by an application (PARSING_APP) that does something with the contained data.

The AUTHORING_APP makes a contract (CONTRACT) with the PARSING_APP telling it how the data can be parsed.

That's all.

Here's how my prototype fits this model (the code is all open-source on GitHub - see [1]):

AUTHORING_APP is my Assistant Composer, which is part of Moritz (happens to be written in C#, and runs offline inside Visual Studio).

Each INSTANCE that Moritz creates is a score written in standard SVG, extended to contain MIDI information.

The Assistant Composer creates its output according to the CONTRACT [2], and (in every INSTANCE) includes an xlink: to a file that tells PARSING_APP's Developer how the file is organised.

PA is my Assistant Performer web application. There is a stable, working version at [3].

The CONTRACT Moritz uses isn't machine readable, but that doesn't really matter because the Assistant Performer doesn't have to parse the file. All that is really necessary is that the Assistant Performer's Developer can find out how the file is structured, so as to be able to program his app correctly.

Unfortunately I don't know how to write a machine readable schema that describes extensions to SVG. Maybe someone here does? If it were machine readable, then software could be written that could check if the INSTANCE really conforms to the CONTRACT.

More about the CONTRACT:

This just contains the formal names of the objects in the file, and how they are nested. The names are supposed to be self-explanatory. My Svg Score Extensions [1] document provides some comments too.

Common Western Music Notation defines things like "system", "staff", "voice", "chord" etc.

Non-Western musics would define things with other names, that nest in their own ways.

I'm not sure that I really understand Zoltan's profiles, but I could well imagine them describing different levels of implementation of CMN, which would be expressed in different CONTRACTs. For example, the parsing application should only look for grace notes if they are actually included in the CONTRACT. I imagine that some parsing applications might need to be told which notes are grace notes, in order to do something special with them. My apps dont use grace notes...

Apropos Separation of responsibility. Note that the CONTRACT contains no units of measurement. No pixels, no milliseconds. Those are only included in the INSTANCEs.

And the parsing application knows when it is reading temporal info (milliseconds), and when it is reading spatial info (often pixels).

All the best,

James

[1] https://github.com/notator

[2] http://james-ingram-act-two.de/open-source/svgScoreExtensions.html

[3] http://james-ingram-act-two.de/open-source/assistantPerformer/assistantPerformer.html

=================================================

Attachments

- image/png attachment: image001.png

Received on Thursday, 21 April 2016 16:07:24 UTC