- From: Mandyam, Giridhar <mandyam@quicinc.com>

- Date: Fri, 15 Feb 2013 18:01:36 +0000

- To: Martin Thomson <martin.thomson@gmail.com>, "public-media-capture@w3.org" <public-media-capture@w3.org>

- Message-ID: <CAC8DBE4E9704C41BCB290C2F3CC921A163BDCF1@nasanexd01h.na.qualcomm.com>

Thanks for the write-up. It really frames the discussion

>__Other Settings, Implications and Interactions

The other settings that we've seen (fillLightMode) directly affect sources. These are easy: constraints can't specify conflicting values.

However, this implies that the first track to apply a given setting determines the operating mode for the source. As long as that track lives, its setting is the one that wins and other tracks are either unable to attach to the source, or unable to apply another setting.

This is not ideal when settings interact. We might manage as long as error feedback indicates that the error is due to there being other constraints on other tracks. Or maybe we need to expose both the set of all possible modes along with the current set of possible modes, noting of course that the track that made the current setting could change it at any time. That could result in an API that is a little hard to explain properly. I don't have a good answer to this problem.

What about source settings that are directly manipulated by the end user? For instance, what if the user is directly able to change the fillLightMode? If a track has already been initiated with a certain source setting and the settings change, then should this be an error condition? I would think that some applications may be content to live with the end-user initiated settings change without destroying the track. One possible approach is to define a settings change listener of some sort, which notifies the application when a settings change has occurred (due to end user interaction, or another track initialization, etc. – the cause of the settings change does not need to be exposed to the application). Then the application can handle the settings change in any way it wants to - treat it as an error, or continue the track with the new settings.

From: Martin Thomson [mailto:martin.thomson@gmail.com]

Sent: Thursday, February 14, 2013 5:06 PM

To: public-media-capture@w3.org

Subject: Super-academic, highly-abstract meta-modelling time: The Media Path

I was asked to more clearly elucidate my concerns with various constraint proposals that were in the throes of being proposed during the interim. Had I been sufficiently forewarned, perhaps we could have avoided something of a lengthy discussion, but then we'd never had come to this email, which I think you will find is highly enlightening. Though the extent to which the enlightenment is relevant to the work of this task force may vary.

I initially reacted poorly to the thought that constraints on tracks could imply that something would perform processing on those tracks. That didn't fit the model I had...at the time.

Here's the expanded model and how I think that constraints like aspect ratio can fit that model. I've talked to Travis about this, and I think we agree on the high level points. I believe that this is close to the model he used to build the settings proposals. However, Travis hasn't seen this email yet and my first draft was totally incoherent. So...

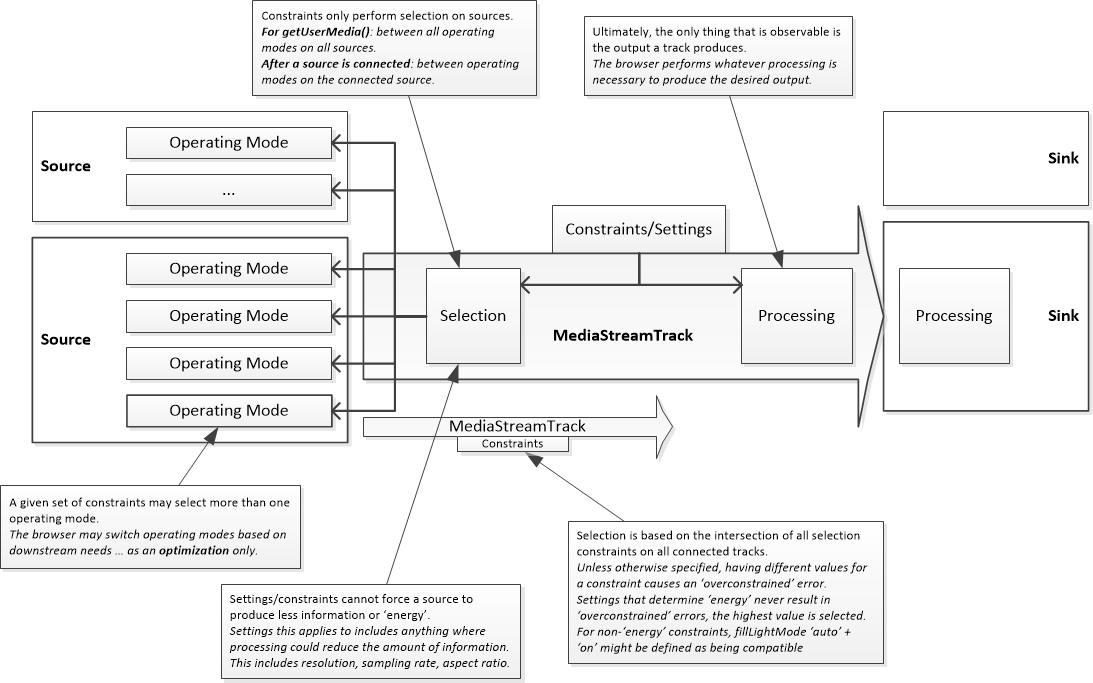

(tl;dr version: see the picture)

__Actors

I think that we all agreed to this basic taxonomy:

* Sources: Camera, microphone, file, blob, RTCPeerConnection, processing API, …

* Connector: MediaStreamTrack*

* Sinks: <video> tag, <audio> tag, RTCPeerConnection, processing API, recording API, …

Where cardinality is:

Source (1) .. (*) Connector (1) .. (*) Sink

__A Picture

1000 words worth of goodness. Apologies for the size.

[Inline images 2]

__Summary of Conclusions

(Thanks to Travis for these.)

* "Selection" is a process that evaluates changes in connector constraints and attempts to choose a source, plus an operating mode on the source

* Selection has an associated "scope" (of operating modes)

* If a connector doesn't have a source, then its scope doesn't apply (it selects from the empty set)

* If a connector is in the process of acquiring a source (from getUserMedia), it's scope is all available [Note 1] operating modes of all available sources

* If a connector has a source, it's scope is limited to the operating modes of its source (only)

* Selection with multiple connectors has a specific policy, depending on constraint, one of:

* "greatest common energy [Note 2]" (the operating mode selected is the one that maps to the highest energy need of the connected sinks)

* "pick one only" (the operating mode cannot be in multiple contradictory states, e.g., the fill light can't be both on and off)

* special - as defined by the constraint, some choices might not be mutually exclusive (on and auto)

Note 1: Sources (and operating modes) can be rendered unavailable by having other tracks connected to them. Some operating modes are mutually exclusive. For example, you can't have the fill light both on and off based on different constraints; or, an encoding camera might operate in a way that is not compatible with having multiple users of that data, so the browser simply disables sharing for that camera. This can also happen through explicit action, but we need to define those special "constraints".

Note 2: The concept of 'energy' needs further explanation.

__Energy

Energy is just a word that has little meaning in this context. Energy == information, but in a qualitative fashion only. The energy a source produces is the amount of information the track conveys. A higher resolution contains more energy, a higher sample rate contains more energy.

Processing can reduce energy safely, either by frame dropping, scaling down, cropping, adding black bars, etc...

In contrast, scaling up doesn't add energy, it just pads with bits that contain no information. Thus, certain sinks will be (and should be) unwilling to scale up. For example, RTCPeerConnection doesn't want to send pointless extra stuff on the wire - it should be able to learn of the actual energy of the track and refuse to use anything that is scaled up, while scaling down as circumstances dictate. If you want to scale up real-time video, scale it up on the receiver! (BTW, don't infer new API requirements from this, this is purely internal browser-stuff.)

When multiple tracks are attached to the same source, each might set a constraint on energy. Any constraint that limits energy is ignored - for the purposes of selecting a track. Any constraint that imposes a minimum level of energy is used to determine which source and operating mode is selected. The highest energy constraint from any track attached to a source is what determines its actual operating mode.

For example, if track A wants 1080 lines minimum and track B wants 480 lines minimum, track A wins and the camera produces 1080 lines. If track B also wanted 480 lines maximum, then it will have to apply some processing to get that.

Constraints/settings that follow this rule include resolution (height and/or width), frame or sample rate, bits per sample (if we could be bothered with this). Minimum values are used to select sources or operating modes, maximum values are sent to the processing box. Cropping/letter-boxing are always processing instructions.

__Other Settings, Implications and Interactions

The other settings that we've seen (fillLightMode) directly affect sources. These are easy: constraints can't specify conflicting values.

However, this implies that the first track to apply a given setting determines the operating mode for the source. As long as that track lives, its setting is the one that wins and other tracks are either unable to attach to the source, or unable to apply another setting.

This is not ideal when settings interact. We might manage as long as error feedback indicates that the error is due to there being other constraints on other tracks. Or maybe we need to expose both the set of all possible modes along with the current set of possible modes, noting of course that the track that made the current setting could change it at any time. That could result in an API that is a little hard to explain properly. I don't have a good answer to this problem.

In general, the model also implies that tracks don't report the actual "shape" of a track. Tracks can report the settings that are currently in effect and any optional settings that could be. But tracks cannot say that the video flowing inside is this or that resolution - it could change, and should be permitted to. It might be OK to provide an indication of the current source operation mode, with a clear warning that this is volatile and not under direct application control.

__Double Processing

There are two places in the media path where processing logically occurs. Implementations will naturally collapse those. For instance, two lots of scaling can be reduced to a single scaling operation in most cases. However, sometimes this will result in ugliness.

The best example of ugly would be a 16:9 source that is sent through a 16:10 constrained track to a 16:9 video tag. In that case, the correct thing to do is to display a nice black frame around the video, unless one of the aspect ratio changes cropped rather than black-barred, in which case...

__Example

We can apply this model to answer the important questions:

What happens if you constrain/set a track to width=640,height=480 for a 1920x1080 camera source?

If you consider the model, you reach two conclusions:

* the source only needs to provide 480 lines worth of energy, though it may provide more, it could just pipe out 1920x1080 video

* the data that is provided to the sink is scaled and cropped (or letter-boxed) to 640x480

Adding another track (with no constraints) results in output of 1920x1080, depending on what limits are implicitly applied by its sink.

__Make It Simpler, Please

One major thing we could do to simplify things is to dump the idea of mandatory vs. optional constraints. This model supports a lot of flexibility without having "soft" constraints. Anything you don't care that much about can be applied as settings after connecting the track.

I can actually see how this model could be considered way too complicated as it is without optional constraints. It's already hard enough to implement. More importantly, as a user of the API, it's very difficult to understand the model sufficiently that you can choose optional constraints that produce sensible, or even predictable, outcomes.

__Render This All Moot

By allowing applications to gain access to information about sources and to connect sources to local playback sinks prior to gaining consent.

(In the same vein: Harald did make a mildly convincing argument for allowing this after consent for one stream was granted, based on the premise that once you can grab an image using your camera, there isn't much left that fingerprint has to do. That didn't account for very tightly controlled sources, or tainted streams, however, so I'm not sure we've reached that particular place just yet.)

__End Transmission

Attachments

- image/png attachment: image001.png

Received on Friday, 15 February 2013 18:02:14 UTC