- From: Simon Taylor <simon@zappar.com>

- Date: Tue, 15 Mar 2022 14:06:36 +0000

- To: public-immersive-web@w3.org

- Message-Id: <0FD9411C-5384-4582-9F2B-37001752B74B@zappar.com>

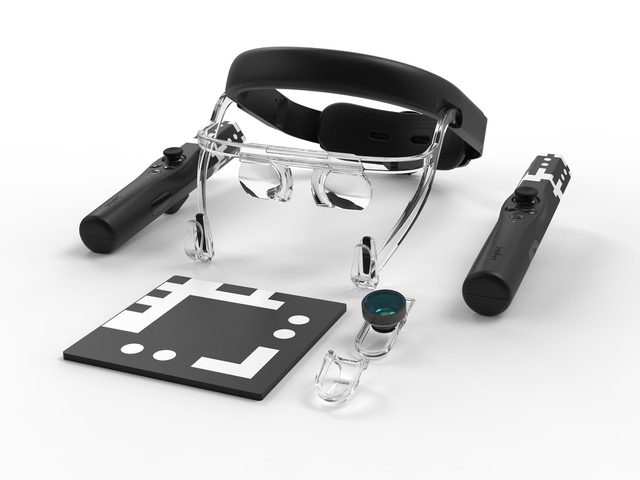

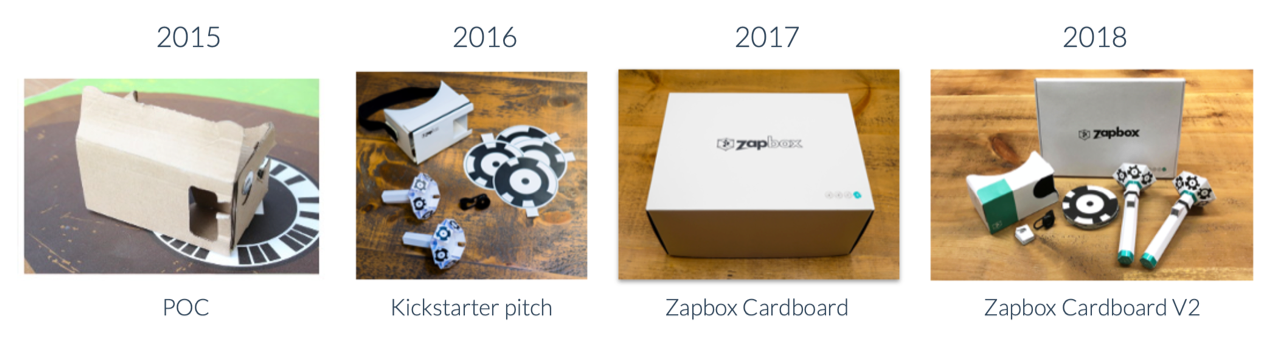

Hi again everyone, I mentioned in my previous email that I’d send a separate one introducing our Zapbox product. We’ve just started investigating a WebXR runtime for Zapbox, and I think the product idea overall might be of interest to the wider WebXR community. Zapbox is a new approach to smartphone-powered VR (and video see-through MR) that includes two controllers and offers full 6-DoF tracking, aiming to offer fully-tracked XR experiences at a really affordable price. Retail pricing isn’t set in stone yet, but we’re expecting it to land somewhere around $60. Here’s a render of the full Zapbox kit (minus a few additional world markers): The headset has a very open design which makes it really well-suited for MR, and the straightened-off lens shape on the lower side gives a nice smooth transition from the direct real world view to the XR content. For VR the content still feels immersive but the user maintains a level of awareness of the real world environment too - an advantage for co-located collaborative experiences, less so for the terrifying horror VR experiences that are out there! The controllers connect via Bluetooth LE for Android and iOS compatibility, and include an IMU (accelerometer and gyroscope) and a similar set of inputs to the Oculus Touch controllers so it should be pretty easy to adjust content for compatibility. Positional tracking comes from the “D3” marker designs on the ends of the controllers, visually tracked through the camera on the smartphone. We also include some “world markers” which give a physical way to set up the origin for content and will allow a consistent world coordinate system for multiple-user experiences. Some recent devices (notably iPhone 11 and later) include high-quality ultra-wide cameras capable of providing HD frames at 60 fps, and we plan to leverage those cameras where available. Other devices can use the included ultra-wide lens adapter to increase the field-of-view of their main camera. That both helps to increase the size of the region where the controllers can offer full 6-DoF tracking, and better match the camera field-of-view with the eye viewport field-of-view for the video see-through MR use case. We’re very close to the first production run (just awaiting the final production sample for sign-off) and aiming to get our initial pre-orders fulfilled and some retail stock available around June this year. This new version of Zapbox is a complete re-imagining of the product, but we have been experimenting with similar underlying ideas in a form factor inspired by Google Cardboard since back in 2015, culminating in the flat-pack 2018 iteration. Our Zapbox Cardboard controllers were entirely passive but still offered 6-DoF tracking and an analog trigger (so actually are capable enough for the basic generic tracked-controller WebXR API). As part of the Kickstarter campaign for the new version, I posted an as-live walkthrough showing what was possible with Zapbox Cardboard, demonstrating some of the content we’ve built in our in-house engine, and talking about some implementation details such as how we render the camera feed in stereo: https://vimeo.com/473170564/a9dbe9ab14 <https://vimeo.com/473170564/a9dbe9ab14> We feel it’s a pretty compelling step-up from what was possible with the standard Google Cardboard approach, and that the tabletop setup in particular seems well-suited for this style of MR content. As part of the new product we are rewriting the runtime from scratch, this time making proper use of predicted poses, late warping, and late latching camera frames in the compositor to minimise latency. We will expose a native API (likely pretty close to OpenXR) and a wrapper XR Provider plugin for Unity, but developers will need to build and publish their native apps to the separate app stores for iOS and Android. I’ve recently caught up on the massive progress that the working group has made on the WebXR specifications since we first started experimenting with Zapbox. I’m really excited about the possibilities that a WebXR implementation for Zapbox could unlock. If we are able to deliver a high-quality implementation, I can easily see WebXR being the primary way that content will be delivered. The Zapbox WebXR browser would render both immersive-vr and immersive-ar sessions in stereo, with the only distinction being whether or not the runtime composites the latest camera frame behind the content. If anyone wants further updates on our progress, the best place to find them will be the updates section of our Kickstarter project page, I promise not to spam this list with any further updates… https://www.kickstarter.com/projects/all-newzapbox/all-new-zapbox-awesome-mixed-reality-for-40/posts <https://www.kickstarter.com/projects/all-newzapbox/all-new-zapbox-awesome-mixed-reality-for-40/posts> There was no particular purpose to this message outside of sharing what we’re up to, but I hope it was of some interest! Simon

Attachments

- text/html attachment: stored

- image/jpeg attachment: full_zapbox_kit.jpeg

- image/png attachment: Screenshot_2022-03-15_at_13.45.27.png

Received on Tuesday, 15 March 2022 14:06:52 UTC