- From: Timothy Holborn <timothy.holborn@gmail.com>

- Date: Wed, 2 Nov 2022 00:46:54 +1000

- To: Dave Raggett <dsr@w3.org>

- Cc: public-cogai <public-cogai@w3.org>

- Message-ID: <CAM1Sok0Qyqy-w9G1_BAa8MvHLgFc2gnBNha74PemPaYoDzZQFQ@mail.gmail.com>

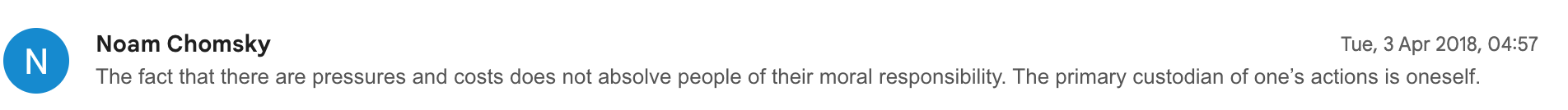

https://twitter.com/dandolfa/status/1187849109782306817 https://www.google.com/search?q=toilet+paper+shortage noam told me - it wasn't his area. he then later followed-up with the following statement; [image: Screen Shot 2022-11-02 at 12.42.25 am.png] which i responded to, both - broadly agreeing - save circumstances where natural persons are impaired for some reason of significance. human agency - is important, and often unsupported by AI systems - which has various implications of significance. tim.h. On Wed, 2 Nov 2022 at 00:23, Dave Raggett <dsr@w3.org> wrote: > The lecture focuses on traditional logic and ignores the imprecision and > context sensitivity of natural language. As a result it sounds rather dated. > > The other lectures in the course: > > https://www.cs.princeton.edu/courses/archive/fall16/cos402/ > > They cite Chomsky’s comments deriding researchers in machine learning who > use purely statistical methods to produce behaviour that mimics something > in the world, but who don't try to understand the meaning of that behaviour. > > This of course predates recent work on large language models which > demonstrate a very strong grasp of language and world knowledge, but are > rather weak in respect to reasoning. The brittleness of both hand-authored > knowledge and deep learning, motivates work on machine learning of > reasoning for everyday knowledge. > > The last twenty years have shown that machine learning is vastly superior > to hand authoring when it comes to things like speech, text and image > processing. However, we have still to successfully mimic human learning and > reasoning. > > To get there, I believe that some hand authoring for small scale > experiments can help illustrate what’s needed from more scalable > approaches. I don’t see anyone else on this list being interested, though, > in helping with that. Where are the programmers and analysts when you need > their help? > > I disagree that the slides you linked to are effective in arguing for > applying KR to ML. You could say that ML requires a choice of KR since ML > software has to operate with information in some form or other, but that is > like stating the obvious. > > Perhaps you are assuming that KR should use a high level theoretical > formalism for knowledge? That’s wishful thinking as far as I am concerned. > It certainly didn’t help when it came to former stretch goals for AI, e.g. > beating chess masters at their own game. > > On 1 Nov 2022, at 13:25, Paola Di Maio <paola.dimaio@gmail.com> wrote: > > Sweet and short set of slides that speaks CS language in making the > argument of KR for ML, the authors may be instructors at Princeton and do > not cite any literature, but do a good job at summarizing the main point- > > Lecture 12: Knowledge Representation and > Reasoning Part 1: Logic > > https://www.cs.princeton.edu/courses/archive/fall16/cos402/lectures/402-lec12.pdf > <https://www.google.com/url?sa=i&rct=j&q=&esrc=s&source=web&cd=&cad=rja&uact=8&ved=0CAQQw7AJahcKEwi40bXYiI37AhUAAAAAHQAAAAAQAw&url=https%3A%2F%2Fwww.cs.princeton.edu%2Fcourses%2Farchive%2Ffall16%2Fcos402%2Flectures%2F402-lec12.pdf&psig=AOvVaw1dCxdvDmEmj3r5EiLn5UGV&ust=1667395111043516> > > Some may appreciate the presence of a penguin illustrating the argument > > > Dave Raggett <dsr@w3.org> > > > >

Attachments

- image/png attachment: Screen_Shot_2022-11-02_at_12.42.25_am.png

Received on Tuesday, 1 November 2022 14:47:45 UTC