- From: Rob Manson <roBman@mob-labs.com>

- Date: Mon, 30 Sep 2013 10:36:43 +1000

- To: public-media-capture@w3.org

- CC: "public-ar@w3.org" <public-ar@w3.org>

- Message-ID: <5248C79B.6050704@mob-labs.com>

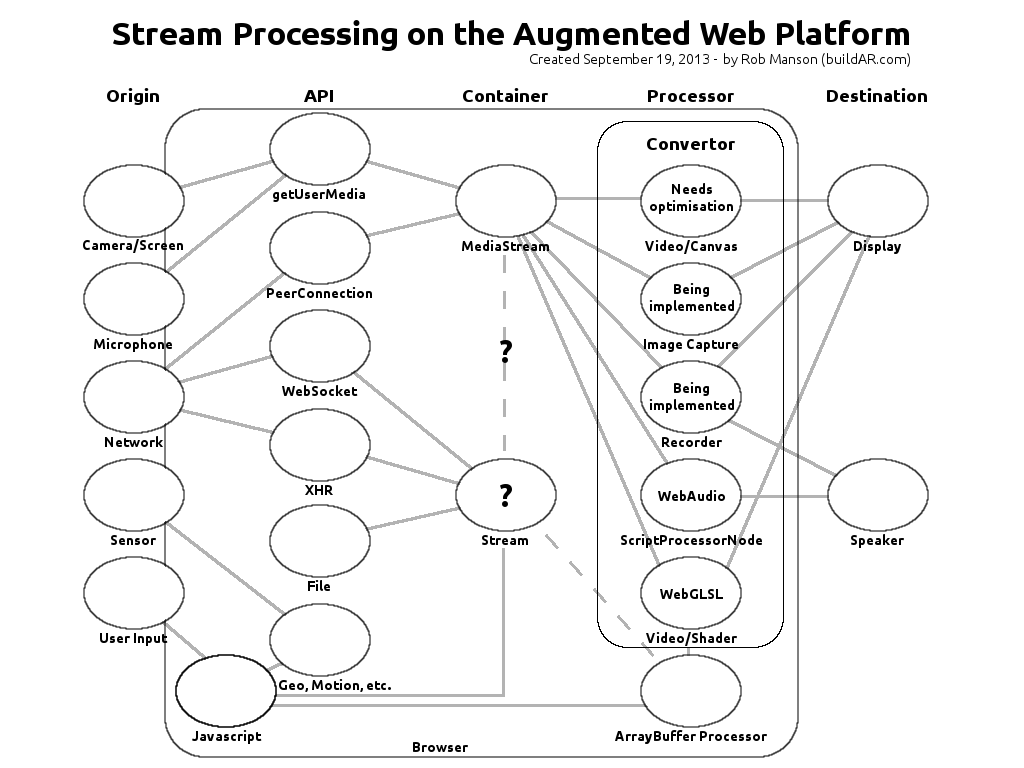

I drew this diagram recently in an attempt to put together a clear picture of how all the flows work/could work together. It seems to resonate with quite a few people I've discussed it with. NOTE: This was clearly from the perspective of the post-processing pipeline scenarios we were exploring. Code: https://github.com/buildar/getting_started_with_webrtc#image_processing_pipelinehtml Slides: http://www.slideshare.net/robman/mediastream-processing-pipelines-on-the-augmented-web-platform But the question marks in this diagram really relate directly to this discussion (at least in the write direction). It would be very useful in many scenarios to be able to programatically generate a Stream (just like you can with the Web Audio API). Plus all the other scenarios this type of overall integration would unlock (zoom, etc). And then we would be able to use this Stream as the basis of a MediaStream. And then we would be able to pass this MediaStream to a PeerConnection like normal. There's a lot of discussion in many different threads/working groups and amongst many of the different browser developers about exactly this type of overall Stream based architecture...and the broader implications of this. I think this overall integration is a key issue that could gain good support and really needs to be looked into. I'd be happy to put together a more detailed overview of this model and gather recommendations if there's no strong objections? It seems like this would most sensibly fit under the Media Capture TF...is this correct home for this? PS: I'm about to present this pipeline work as part of a workshop at the IEEE ISMAR in Adelaide so I'll share the slides once they're up. roBman On 30/09/13 09:59, Silvia Pfeiffer wrote: > On Mon, Sep 30, 2013 at 8:17 AM, Harald Alvestrand <harald@alvestrand.no> wrote: >> On 09/29/2013 06:39 AM, Silvia Pfeiffer wrote: >>> FWIW, I'd like to have the capability to zoom into a specific part of >>> a screen share and only transport that part of the screen over to the >>> peer. >>> >>> I'm not fuzzed if it's provided in version 1.0 or later, but I'd >>> definitely like to see such functionality supported eventually. >> >> I think you can build that (out of strings and duct tape, kind of) by >> screencasting onto a canvas, and then re-screencasting a part of that >> canvas. I'm sure there's missing pieces in that pipeline, though - I >> don't think we have defined a way to create a MediaStreamTrack from a >> canvas yet. > > Interesting idea. > > It would be possible to pipe the canvas back into a video element, but > I'm not sure if we can as yet take video element content and add it to > a MediaStream. > >> It takes more than a zoom constraint to do it, however; at minimum you >> need 2 coordinate pairs (for a rectangular viewport). > > True. I also think we haven't standardised screensharing yet, even if > there is an implementation in Chrome. > > Silvia. > >

Attachments

- image/png attachment: stream_processing_overview-03.png

Received on Monday, 30 September 2013 00:36:51 UTC