- From: Patrick Besner <patrick@novosteer.com>

- Date: Tue, 5 Aug 2025 23:01:10 -0500

- To: Ronald Reck <rreck@rrecktek.com>

- Cc: Milton Ponson <rwiciamsd@gmail.com>, David Booth <david@dbooth.org>, W3C AIKR CG <public-aikr@w3.org>

- Message-ID: <CAOTs2MutpZwmeAq0sUtzqx08_XPMt0YUSB6KSkMfLswccWS8Zw@mail.gmail.com>

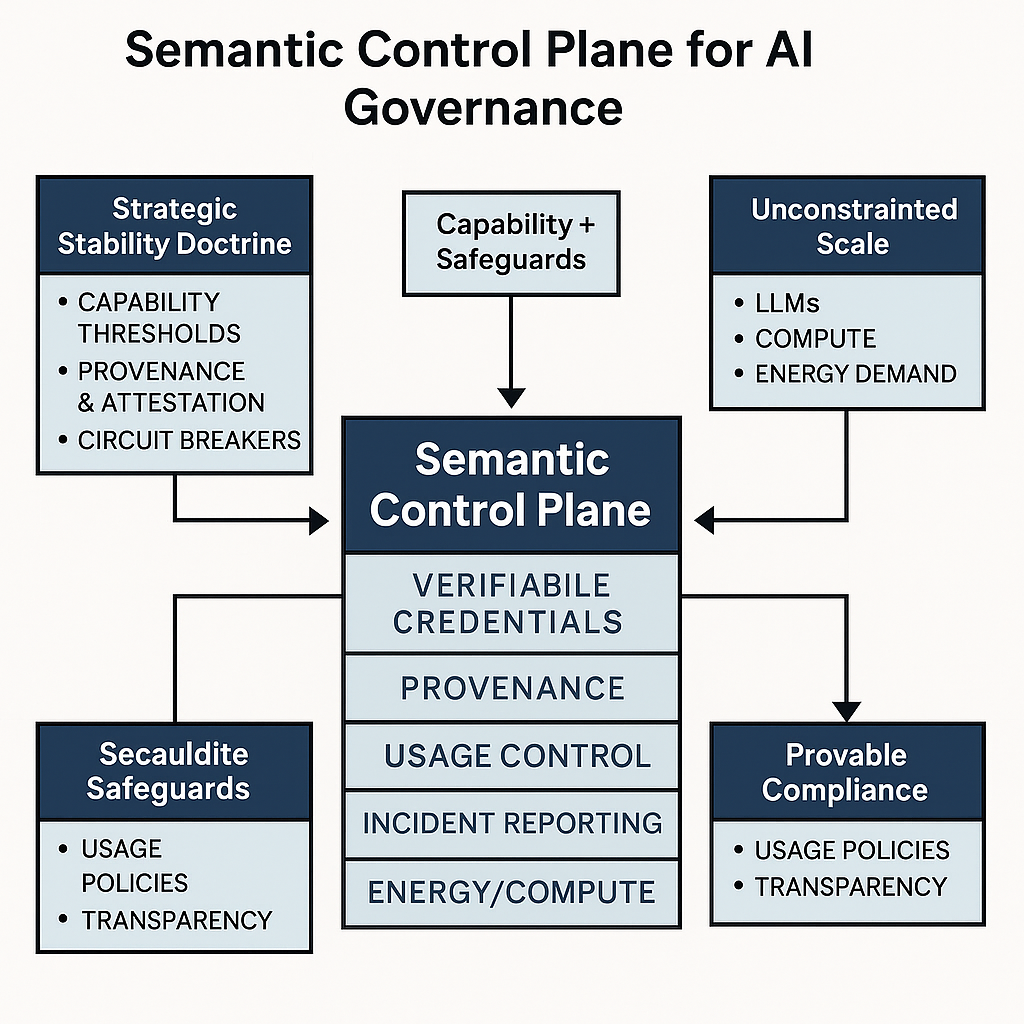

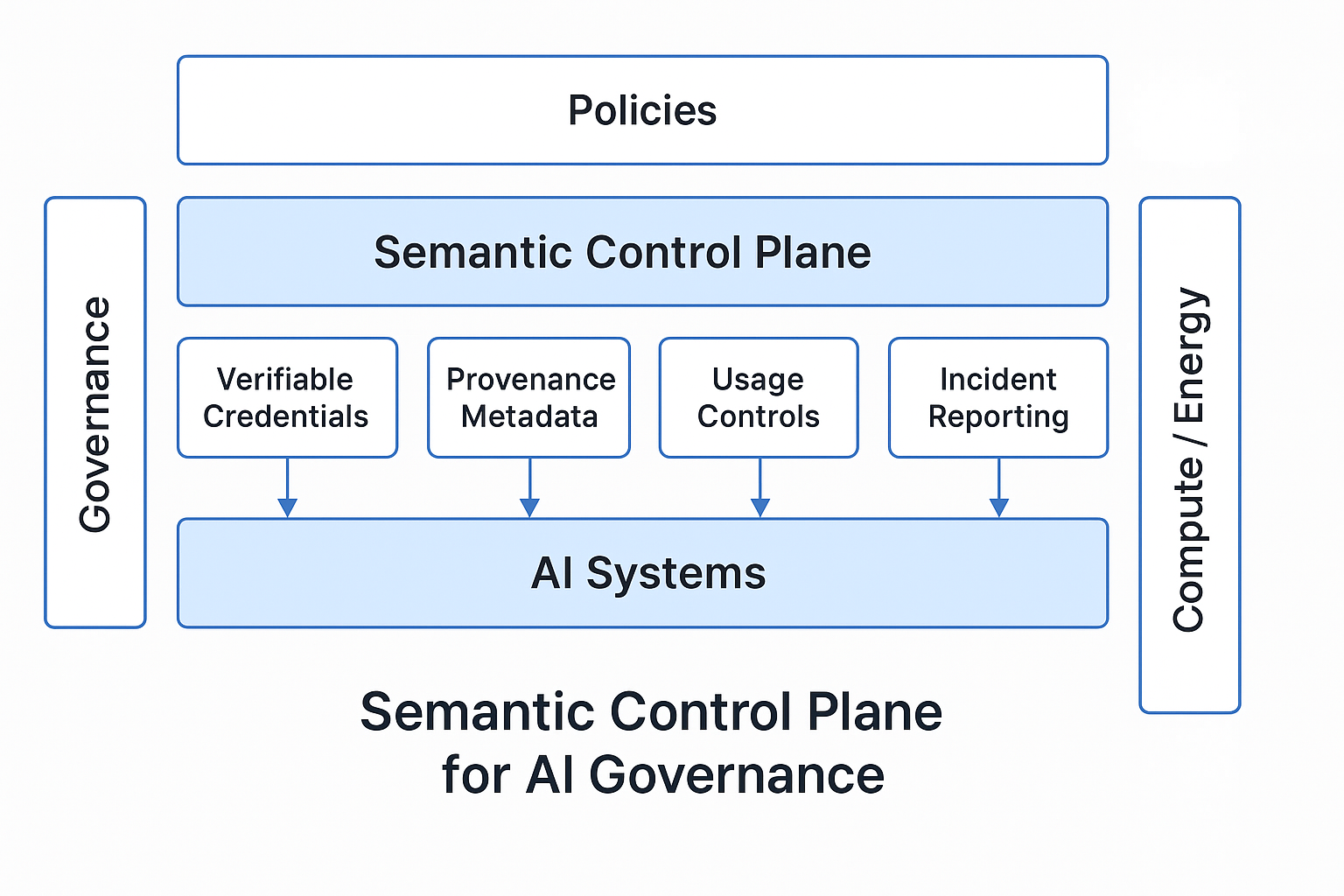

Colleagues, AI is advancing faster than the governance structures meant to contain it. Two truths must guide us: 1. *Determined adversaries will exploit AI regardless of domestic legislation.* 2. *Unconstrained scale — LLMs + compute + energy demand — risks strategic and ecological instability.* If those are givens, the question is no longer *whether* AI should advance, but *who will control it, under what doctrine, and with what safeguards*. ------------------------------ Strategic Stability Doctrine for AI We need the AI equivalent of arms-control norms — not to halt progress, but to ensure capability comes with enforceable boundaries: - *Capability thresholds with automatic deployment limits* (e.g., API-only access at higher risk levels). - *Cryptographically verifiable provenance* from data to deployment. - *Circuit breakers* embedded in cloud and hardware execution keys. - *Mandatory energy/resource transparency* for major training runs. *Example:* a high-capability model capable of designing advanced malware should automatically trigger deployment restrictions, require attested monitoring, and be subject to rapid disablement if misuse is detected. ------------------------------ Why W3C Has a Unique Role If other bodies can set the policy goals, *W3C KR can define the machine-readable substrate* that makes them enforceable and interoperable across the ecosystem: - *Verifiable credentials* for models, datasets, and evaluations. - *Provenance profiles* linking artifacts to responsible operators. - *Usage-control vocabularies* for machine-interpretable policy enforcement. - *Incident/misuse reporting schemas* for trusted intel exchange. - *Energy/compute vocabularies* tied to sustainability metrics. [image: Semantic_Control_Plane_AI_Governance.png] ------------------------------ Why Act Now If we delay, the high ground will go to actors who do not share our values or constraints. If we build these KR primitives now, regulators and other SDOs can adopt them immediately — embedding trust and accountability into the infrastructure *before it ossifies without us*. ------------------------------ Next Steps 1. Form 2–3 small task forces to draft core vocabularies and credential formats. 2. Run pilot programs with model labs and cloud providers. 3. Coordinate with NIST, ISO/IEC, C2PA, and other SDOs for alignment. ------------------------------ This is not just an ethics conversation — it is a matter of *strategic stability and resilience*. W3C can define the technical foundation before the norms harden without us. [image: Semantic-CPAIG.png] Patrick Besner W3C AI Knowledge Representation | NIST AI RMF Collaborator Founder & CEO, Novosteer Technologies Corp. [image: Logo] <https://www.novosteer.com/> [image: facebook icon] <https://www.facebook.com/novosteer/> [image: linkedin icon] <https://www.linkedin.com/company/novosteer/mycompany/> [image: instagram icon] <https://www.instagram.com/novosteer_technologies/> Patrick Besner President, Chief Executive Officer (CEO) Novosteer Technologies Corp M 1-613-363-1498 | P 1-888-983-8333 E patrick@novosteer.com 191 Lombard Ave, 4th Floor Winnipeg, MB, Canada, R3B 0X1 www.novosteer.com On Tue, Aug 5, 2025 at 4:32 PM Ronald Reck <rreck@rrecktek.com> wrote: > I share the concerns expressed here, but I believe it skirts the central > threat: bad actors and adversarial nation states. Hand wringing and > legislation alone won’t slow much less deter them from exploiting AI to > outmaneuver and destabilize us. > > While ethics and sustainability are crucial, we need to confront the real > problem. The assumption that AI development can be responsibly reined in > while competitors weaponize it is naïve. The triad Milton points out: LLMs, > data centers, and ecological fallout matter, but none of it will be > addressed if we dont first secure the geopolitical high ground. > > The question isn't whether AI should advance, it has and will continue to > do so. To me the real question is: who will control it, under what > doctrine, and to what end? > > On Tue, 5 Aug 2025 16:50:43 -0400, Milton Ponson <rwiciamsd@gmail.com> > wrote: > > > This is the main problem with AI today. Unfortunately putting legislation > > in place like in the European Union with the EU AI Act, Digital Services > > Act, Digital Markets Act, General Data Protection Regulation isn't > enough. > > What we need in addition is experts, scientists and independent media > > exposing the dangers and ethical issues involved in misuse of science and > > technologies. > > Effective communication about these issues is being targeted in the USA, > as > > scientists, engineers, academics and academia and independent press media > > are being attacked for questioning government and corporate narratives. > > > > Big Tech in this context consists of Big Oil and Gas, who stand to gain > > from the explosive growth in energy demand from hyperspace data centers, > > Big Internet, AI and Semiconductor Tech and the data centers, for which a > > separate executive order was signed deregulating this industry to > > accommodate accelerated growth. > > And with the EPA removing the Endangerment Finding, which lays the > > foundation for the EPA to coordinate federal climate change action, the > > weakening of other environmental legislation for water, soil and > wetlands, > > unlimited access to water and fossil fuel generated electric power is > > granted to data centers, which benefits all above mentioned "Big" > > industries. > > > > The problem in fact is three fold, (1) the assumption that generative LLM > > AI is the future is wrong, (2) massive expansion of energy grids and data > > center capacity is wrong and (3) the climate and environmental impacts of > > this industry will be ecologically and economically catastrophic. > > > > Very few people can effectively communicate this combination of issues. > > > > Finding experts, scientists and academics to tackle all three parts of > the > > problem combined is the challenge. > > > > Milton Ponson > > Rainbow Warriors Core Foundation > > CIAMSD Institute-ICT4D Program > > +2977459312 > > PO Box 1154, Oranjestad > > Aruba, Dutch Caribbean > > > > On Tue, Aug 5, 2025, 13:14 David Booth <david@dbooth.org> wrote: > > > > > Interesting work and commentary on the impact of AI on society: > > > > > > > https://ainowinstitute.org/publications/research/executive-summary-artificial-power > > > > > > A quote from their executive summary: "Those of us broadly engaged in > > > challenging corporate consolidation, economic injustice, tech > oligarchy, > > > and rising authoritarianism need to contend with the AI industry or we > > > will lose the end game. Accepting the current trajectory of AI > > > proselytized by Big Tech and its stenographers as “inevitable” is > > > setting us up on a path to an unenviable economic and political > future—a > > > future that disenfranchises large sections of the public, renders > > > systems more obscure to those it affects, devalues our crafts, > > > undermines our security, and narrows our horizon for innovation." > > > > > > Any new technology brings a complex combination of positive and > negative > > > impacts, and those with a vested interest in that technology inevitably > > > promote the positives while minimizing the negatives. I share the > > > authors' net concerns about the increasing consolidation of power that > > > the latest breed of mega AI brings. > > > > > > There is something particularly pernicious about the intersection of > > > unfettered capitalism, authoritarianism and big AI, which will > > > inevitably be used to further accelerate developments that are > > > increasingly harmful to society and our fragile planet. > > > > > > I am wondering what we can do, to help prevent these downsides. > > > > > > Thanks, > > > David Booth > > > > > > > > > Ronald P. Reck > > http://www.rrecktek.com - http://www.ronaldreck.com > >

Attachments

- image/png attachment: Semantic-CPAIG.png

- image/png attachment: Semantic_Control_Plane_AI_Governance.png

Received on Wednesday, 6 August 2025 04:01:46 UTC