- From: Mike Beltzner <beltzner@mozilla.com>

- Date: Fri, 16 Mar 2007 14:57:28 -0700

- To: Mary Ellen Zurko <Mary_Ellen_Zurko@notesdev.ibm.com>

- Cc: public-wsc-wg@w3.org

- Message-Id: <B4368C05-77B2-46D4-B69E-8E26C66096DD@mozilla.com>

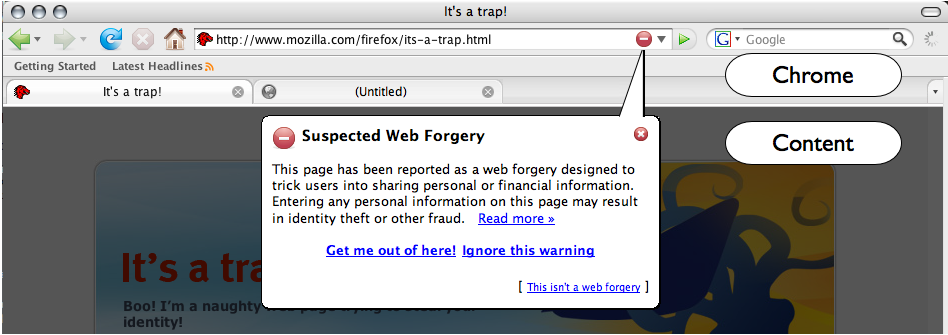

On 6-Mar-07, at 6:05 AM, Mary Ellen Zurko wrote: > Thanks Mike. > I've got some questions on the ones you put out there: > > > multiple indicators used to indicate status, such as SSL > connections being indicated by different color in the URL bar, > padlock icon in the URL bar and padlock icon in the status bar > > The theory on that is that robustness is enhanced by the > redundancy? That it's harder to attack 2 than it is 1, and 3 is > even better? Correct. The redundancy plus the fact that these signals are all hosted in application-controlled chrome areas are meant to enhance the robustness. > > unspoofable UI elements that cross the chrome-content border, > such as the anti-phishing warning bubble > > I'm unfamiliar with that that is, and why it's unspoofable. Can you > provide a pointer or say what it is and why it's unspoofable? I > didn't get a lot of good hits when I searched around. I don't know if there's a pointer, but I can try to describe it. The content area (of a tab or window) is separated from the tabs trip by a solid white line. Web pages control the space in the content area, but cannot draw in the application chrome. By drawing UI which crosses that boundary space, you get the advantage of placing it front and centre before the user while also linking it back to the protected space of chrome. I've attached a screenshot that shows how Firefox's anti-phishing UI takes advantage of this technique:

> > UI controls that are disabled until in focus for a certain amount > of time to prevent click-through and "whack a mole" attacks where > users are encouraged by nuisance elements to continually click in a > given location > > Ditto on this one. I'm unfamiliar with this, so could use a more > detailed explanation (or a pointer) on what it does and how that > increases robustness. I'm unfamiliar with click-through and "whack > a mole" attacks. From what you say, it sounds a bit more like users > getting used to asking questions they're ignored than an attack, so > I must be misunderstanding. Would this be an attack where a script > puts up a bunch of dialogs where the "warning" dialog will appear, > one after the other, to get the user hitting the click, and having > them allow the attack through the warning dialog, and not even > noticing? Nice thought. Have there be any of those in the wild > (just curious). The Javascript alert() method allows a web page to pop up a modal dialog with arbitrary content. Imagine a javascript that runs a loop where 50 such alert boxes are popped up. Now imagine the 51st action is a method that sets a homepage or requests privileges to install software. The poor, beleaguered user has just started clicking like mad to get rid of all of these dialogs, and inadvertently hits "OK" to a question asking if they trust the software. Boo. One solution is that when presenting a dialog that's requesting permission to do something that's potentially dangerous, we put a timeout on the "OK" button so that it's not instantly enabled. I'm not particularly thrilled with the technique, as it tends to annoy users, but it also tends to work. We've definitely seen these sorts of attacks in the wild, although due to mechanisms such as the one above, they've become less popular recently. > > strict cross-site scripting execution policies to ensure that > content is being rendered from appropriate sources > > Here's a reference (in case we need one): http://en.wikipedia.org/ > wiki/Cross_site_scripting > It seems that this would be a merit of the status quo that we > missed in the current draft of the Note. Policies against accessing > data to/from other sites through web user agent scripting languages. > Agreed. cheers, mike

Attachments

- image/png attachment: chrome-content.png

Received on Saturday, 17 March 2007 16:56:36 UTC