- From: Bassbouss, Louay <louay.bassbouss@fokus.fraunhofer.de>

- Date: Fri, 7 Feb 2014 11:56:53 +0000

- To: Anton Vayvod <avayvod@google.com>

- CC: "Rottsches, Dominik" <dominik.rottsches@intel.com>, "public-webscreens@w3.org" <public-webscreens@w3.org>

- Message-ID: <3958197A5E3C084AB60E2718FE0723D4741BBA23@FEYNMAN.fokus.fraunhofer.de>

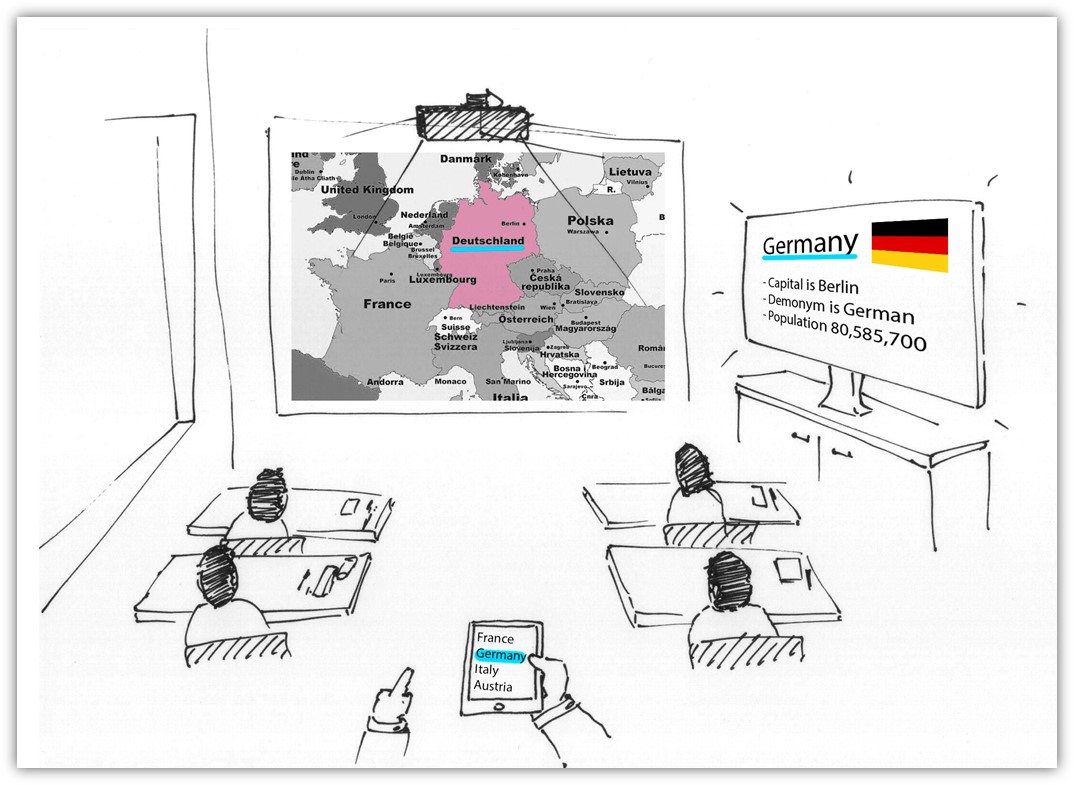

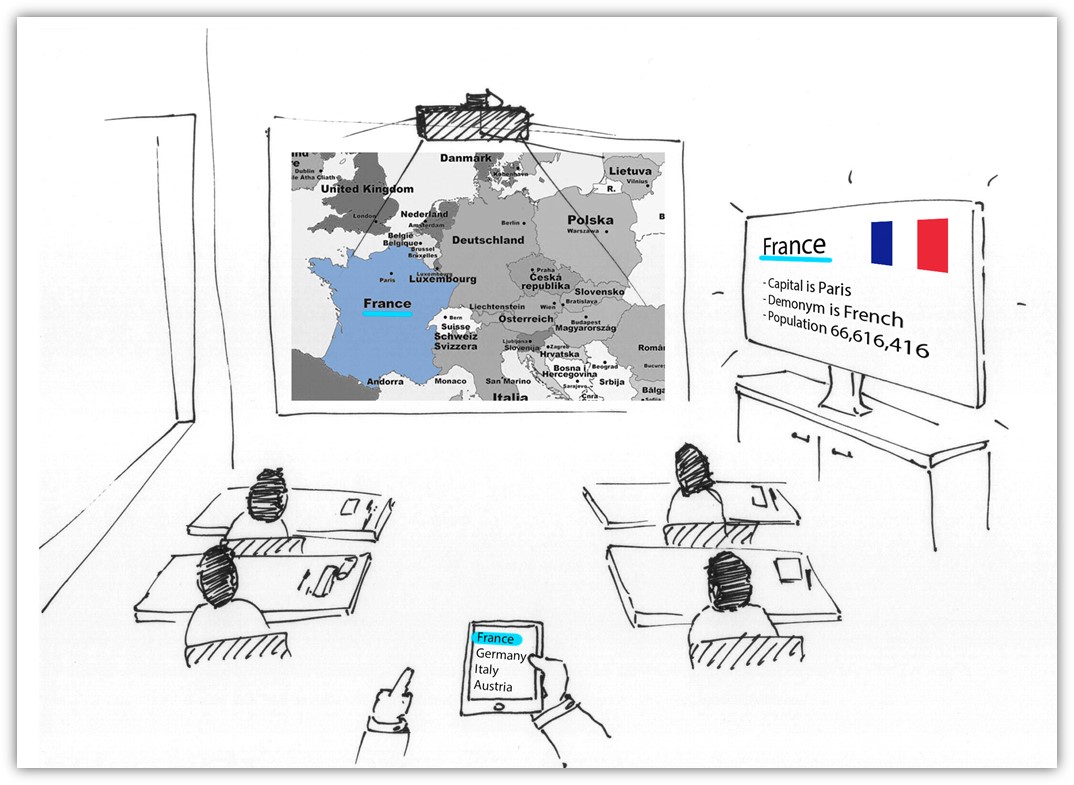

Hi Anton, Please find my comments inline. Best regards, Louay From: Anton Vayvod [mailto:avayvod@google.com] Sent: Donnerstag, 6. Februar 2014 18:40 To: Bassbouss, Louay Cc: Rottsches, Dominik; public-webscreens@w3.org Subject: Re: New Proposal now up for Discussion on the Wiki Hi Louay, this use case looks very interesting. It also covers the generic presentation use case when there's a second second screen that is only visible to the presenter (e.g. with notes). It also seems agnostic from one or two UAs question. Yes both use cases are the same from requirements perspective. We already realized the presentation use case you mentioned. Controller on Smartphone, Slides on one screen and notes on another screen. How would the UI for establishing these two connections look from your perspective? Is there an existing solution (not web based, anything) that handles this kind of use case? Is it supported by common hardware? I don't think Android can cast to more than one Chromecast device or mirror to more than one Wi-Fi Direct display (though together it gives two screens so I'm not saying the use case with two displays is not implossible). In don´t think there is a mobile platform (at least WiFi Display on Android, Airplay on iOS) which supports connecting multiple displays at same time natively (and I don’t think in the future mobile platforms will support multiple displays natively). Multiple displays makes sense for me only on application level. In our case, we used Android HDMI sticks for displays (each runs a Browser). The setting looks something like this: - Android Smartphone/Tablet for controller, Android HDMI Stick for each Display. - All devices runs our experimentation Browser Famium (based on chromium). On the Android sticks we run also a simple service which implements something similar to DIAL Server but using Bonjour instead SSDP (we allow only launching web applications). Launch requests results in opening the Famium Activity and pointing it to the requested URL. For Communication we used WebRTC data channels. - The Famium Browser running on the Android Smartphone acts as something similar to DIAL client. We provide a JavaScript bridge so that the controller application can directly access the client. I imagine the controller (on a tablet in this case) would have two URLs for each presentation and would have to perform two discovery requests for each URL and then check that the available screens allow presenting on two at the same time (e.g. it's not just one screen that is the same). Then the user would have to trigger two selection dialogs for each content page selecting the right screen. Yes in our settings the flow was as following: - The teacher opens the controller application on his smartphone or tablet in Famium Browser. - The Controller application starts a Bonjour Discovery (using specific service name) and each device found/disappeared will be added/removed to/from a device list. - Teacher can now launch the map application and the info application separately on each Android Stick (two separate steps). Launching will be triggered by dragging some elements representing the application to launch and drop them on the UI element representing each device. As you can see from my description, we didn’t address security/privacy aspects since it was for us a proof of concept prototype focusing only on the multiscreen aspect. Overall, it seems like the API would allow the multiple screen use case as it is with some effort from the user and the developers. Do you think we need to add something to the spec at this point? Personally, I'd rather focus on a single screen cases to limit the scope of problems we are trying to solve. We could enhance the spec later on for multiple screen case by providing things like broadcast messaging (since "next slide" or "select country X" should go to both screens) or filtering the devices requested already for presenting some other content. Agree with you we don´t need to add something to the spec at this point. broadcast messaging can be realized as multiple unicast channels between controller and each display at the moment. Thanks, Anton. On Thu, Feb 6, 2014 at 4:56 PM, Bassbouss, Louay <louay.bassbouss@fokus.fraunhofer.de<mailto:louay.bassbouss@fokus.fraunhofer.de>> wrote: Hi Dominik, all, Please find attached a use case (each graphic is a use case step) illustrating the usage of multiple displays (here 2 display) in same session. The use case is about "classroom in the future" where the teacher opens a web application containing "learning material" he want to shows to the pupils. He can display content on two displays (e.g. map on one screen and text material on another screen) and control both from his tablet. I can explain the use case in more details and put it on the wiki page, what do you think? Step1: [cid:image001.jpg@01CF23F8.D929CA90] Step2 [cid:image002.jpg@01CF23F8.D929CA90] Best regards, Louay -----Original Message----- From: Rottsches, Dominik [mailto:dominik.rottsches@intel.com<mailto:dominik.rottsches@intel.com>] Sent: Donnerstag, 6. Februar 2014 12:20 To: public-webscreens@w3.org<mailto:public-webscreens@w3.org> Cc: Anton Vayvod Subject: Re: New Proposal now up for Discussion on the Wiki Hi Anton, Louay, On 06 Feb 2014, at 12:38, Anton Vayvod <avayvod@google.com<mailto:avayvod@google.com<mailto:avayvod@google.com%3cmailto:avayvod@google.com>>> wrote: as shown here: http://roettsch.es/player_target_list.png This server requires a login when I click on the link. However, I think I understand what you mean and thanks for reminding of this important use case. Apologies, this should work now. On 06 Feb 2014, at 12:38, Anton Vayvod <avayvod@google.com<mailto:avayvod@google.com<mailto:avayvod@google.com%3cmailto:avayvod@google.com>>> wrote: In this case the YouTube page is aware of the available presentation devices and shows them using its own styling and UI. For this to be possible via Presentation API, enumeration with human readable names would be required. I think we could go the other way: allow the site to provide additional items to the selection list. So YouTube would add a list of its own device ids for these manually paired devices. I believe this is what the new Cast Web SDK does. Just to clarify: You mean, allowing the page to add items to the selection dialog that the UA shows? Would you have a reference or example for how this is done in the Cast Web SDK? Could you perhaps elaborate a bit more how you’d see this working. I am skeptical this works generically while still providing a logical and consistent Presentation API which would not break custom casting implementations like the one we see here, Youtube pairing. Imagine: The page calls requestSession() or present() or a similarly named function, and has previously called something like addFlingMenuEntry(“CustomPairingTarget”). How would you handle the result if for the custom server-side pairing implementation you just need to have the “CustomPairingTarget” name returned and then do proprietary communication with the server in order to control the remote instance. There’s something to say about both approaches. The page author saves some time by not having to provide a selection mechanism. On the other hand I’d still say it gives the page more flexibility if it can use the human readable names for its own selection mechanism. Louay, Fraunhofer raised a github issue on supporting multiple screens - maybe you could elaborate a bit on the use cases you had in mind? Dominik

Attachments

- image/jpeg attachment: image001.jpg

- image/jpeg attachment: image002.jpg

Received on Friday, 7 February 2014 11:58:27 UTC