- From: Henry Story <henry.story@bblfish.net>

- Date: Thu, 12 Jul 2018 20:09:36 +0200

- To: ryan@sleevi.com

- Cc: Dave Crocker <dcrocker@gmail.com>, public-web-security@w3.org

- Message-Id: <DBFB6B57-4951-4710-BDF8-31D342528870@bblfish.net>

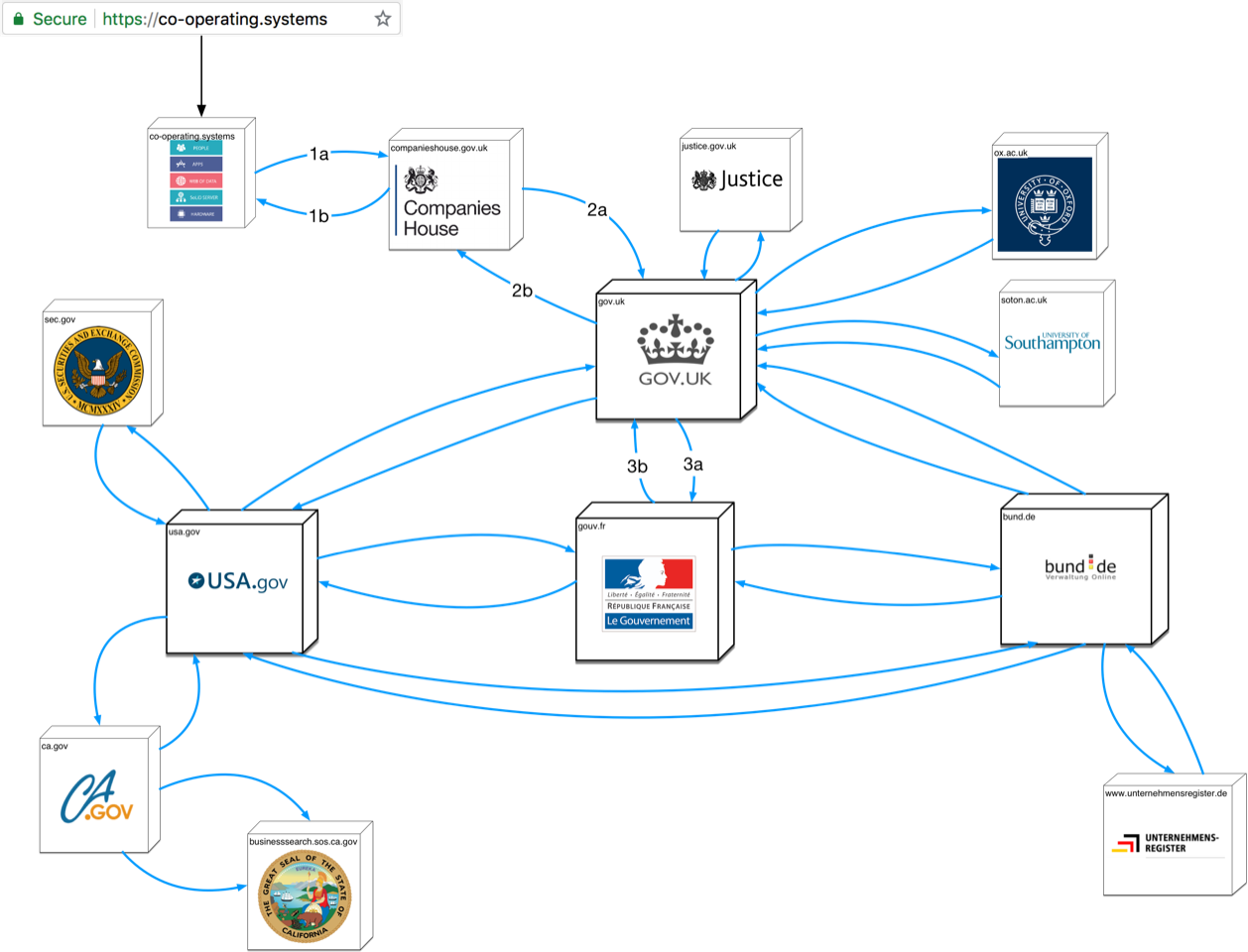

> On 12 Jul 2018, at 19:32, Ryan Sleevi <ryan@sleevi.com> wrote: > > > > On Thu, Jul 12, 2018 at 12:06 PM Henry Story <henry.story@bblfish.net> wrote: > > >> On 12 Jul 2018, at 15:34, Dave Crocker <dcrocker@gmail.com> wrote: >> >> On 7/12/2018 5:19 AM, Henry Story wrote: >>> I have recently written up a proposal on how to stop (https) Phishing, >> >> http://craphound.com/spamsolutions.txt >> >> originally written for email, but it applies here, too. > > :D > > But, not really: The architectural difference between the web and e-mail are very > big. Furthermore the problems looked at are completely different: that questionnaire > is for spam, and this is a proposal against phishing. > > These problems are more similar than different, and what Dave linked to is just as applicable. They share complex social and political issues, and technologists that ignore that are no doubt likely to be ignored. Except that I am not ignoring them. The subtitle of the post "a complete socio/technical answer" points at that. > > Then the type of solution I provide is very unlikely to have ever been > thought of pre-web, given the type of technologies involved. Also I have > spoken to people from Symantec and presented this at the cybersecurity > Southampton reading group, and so it has had some initial tyre > kicking already. > > Given the lack of familiarity with Gutmann’s work, which in many ways has served as a basic reader into the PKI space, I would be careful about speculating about what ideas may or may not have been considered. I actually refer to Gutmans 2014 book in the answer on UI here https://medium.com/@bblfish/response-to-remarks-on-phishing-article-c59d018324fe#1a75 > Similarly, given that Symantec has left the PKI business after a series of failures, it’s unclear if you’re speaking of the current entity or the former. I just said I have kicked the tires a bit, not that it has gone through a full review. The Spamsolution questionnaire would make sense as a first mail to send someone who had not thought about the problem at all, as an incentive to get them to kick the tires. Dave Crocker does not know me, nor if I did some initial work on the topic, so I am ok that he sent it out. It's quite funny actually. > > The question of an interrelated distributed set of links - and of authority for different name spaces - is not new or original in this space. It’s true whether you look at the Web of Trust or when you consider the PKI’s support for mesh overlays with expressions of degrees of reciprocality of trust. > > Similar, the suggestion of recognizing the different “organs”, as you suggest, for different degrees of validation is also not new. You can see this from the beginning of the X.509 discussions, in which ITU-T would maintain a common naming directory from which you could further express these links in a lightweight, distributed directory access protocol. Well there has been a lot of progress in decentralised data since ldap which is a pre-web technology that dates back to the 1980ies. But you are right a good thesis would need to look at what the advances in this field of linked data have been and why things could not be done then that can be done now. > > There is, underneath it all, a flawed premise resting on the idea that X.509 is even relevant to this, but that criticism could easily occupy its own voluminous email detailing the ways in which certificates are not the solution here. The basic premise - that what we need is more information to present to users - is itself critically and irredeemably flawed. X509 is not necessary at all to my argument. It is relevant only insofar as X509 certificates are currently deployed in the browser. You could move to RFC 6698 (DNS-based Authentication of Named Entities (DANE)) and you would have the same problem, which is that all you would know about a server is that you have connected to it. Ie you just know this: But what people in the real world need to know is the full web of relations that site has to legal institutions they can go to if they want to lodge a complaint. > > > Philosophically the answer presented is very different too. You can see that with > the first line of that "questionnaire" > > Your post advocates a > ( ) technical ( ) legislative ( ) market-based ( ) vigilante > approach to fighting spam. > > The approach here is none of those: it is organological [1], in the sense that it is > thinking of the problem from an approach that takes the body politic (the organs of the state), > law, the individual and technology into account as forming a whole that co-individuates itself. > So to start it does not fit first choice box... > > But you don't need to understand that philosophy to understand the proposal. You just > have to be open to new possibilities. I > > Henry > http://bblfish.net/ > > [1] There was a conference on this here for example. > http://criticallegalthinking.com/2014/09/19/general-organology-co-individuation-minds-bodies-social-organisations-techne/ > >> >> And fwiw, for any UX issue, there is no certitude in the absence of very specific testing. > > Yes of course. I do go more carefully into the problem with the https UX here > > https://medium.com/@bblfish/response-to-remarks-on-phishing-article-c59d018324fe#1a75 > > I argue there with pictures to go along, that the problem is that there is not enough information > in X509 certificates for it to make sense to users. Even in EV certs. What is needed is live > information. > >> >> >> d/ >> -- >> Dave Crocker >> Brandenburg InternetWorking >> bbiw.net

Attachments

- text/html attachment: stored

- image/gif attachment: CompaniesHouse-urlbar-reference.gif

- image/png attachment: WebOfNations_response.png

Received on Thursday, 12 July 2018 18:10:08 UTC