- From: Rob Manson <roBman@mob-labs.com>

- Date: Thu, 02 Sep 2010 18:48:28 +1000

- To: Alex Hill <ahill@gatech.edu>, "Public POI @ W3C" <public-poiwg@w3.org>

- Message-Id: <1283417308.3423.1065.camel@localhost.localdomain>

Hey Alex,

> If I understand correctly, you are suggesting that "triggers" should

> be formulated in a flexible pattern language that can deal with and

> respond to any form of sensor data.

That's a great summary. I may re-use that if you don't mind 8)

> This would be in contrast to the strictly defined "onClick" type of

> events in JavaScript or the existing VRML trigger types such as

> CylinderSensor [1].

Well...I see it more as creating a broader, more flexible super-set that

wraps around current ecma style events, etc.

> I think this idea has merit and agree that some significant

> flexibility in the way authors deal with the multiple visual and

> mechanical sensors at their disposal is vital to creating compelling

> AR content.

> However, the flexibility that this approach would give, seems at first

> glance, to take away some of the ease of authoring that "baked" in

> inputs/triggers give.

Well...I think we're generally on the same track here. But let me

expand my points a little below. I hope I can present a case that this

could make this type of authoring "easier" rather than "less easy".

> And, I it is not obvious to me now how one incorporates more general

> computation into this model.

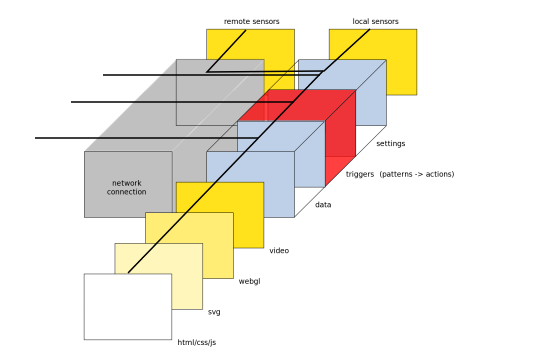

Attached is a simple diagram of the type of Open Stack that I think will

enable this type of standardisation. However, there is a lot of hidden

detail in that diagram so I would expect we may need to bounce a few

messages back and forth to walk through it all 8)

This type of system could easily be implemented in one of the really

modern browsers simply within javascript, however to get the full

benefit of the dynamic, sensor rich, new environment it would be built

as a natively enhanced browser (hopefully by many vendors).

My underlying assumption is that all of this should be based upon open

web standards and the existing HTTP related infrastructure.

> Take the aforementioned CylinderSensor; how would you describe the

> behavior of this trigger using patterns of interest?

That is a good question. I think tangible examples really help our

discussions. CylinderSensor binds (at quite a programmatic level)

pointer motion to 3D object manipulation/rotation.

My proposal would allow you to treat the pointer input as one type of

sensor data. With a simple pattern language you could then map this (or

at least defined patterns within this) to specific URIs. In many ways

this could be seen as similar to a standardised approach to creating

listeners.

So the first request is the sensor data event.

The response is 0 or more URIs. These URIs can obviously contain

external resources or javascript:... style resources to link to dynamic

local code or APIs. The values from the sensor data should also easily

be able to be mapped into the structure of this URI request. e.g.

javascript:do_something($sensors.gps.lat)

The processing of these generated URIs are then the second layer of

requests. And their responses are the second layer of response. These

responses could be any valid response to a URI. For standard http:// or

similar requests the response could be HTML, SVG, WebGL or other valid

mime typed content. For javascript: style requests the responses can be

more complex and may simply be used to link things like orientation to

the sliding of HTML, SVG or WebGL content in the x dimension to simulate

a moving point of view.

But pointers are just one very simple type of input sensor. I'm sure

we'd all agree that eye tracking, head tracking, limb/body tracking and

other more abstract gestural tracking will soon be flooding into our

systems from more than one point of origin.

> While there may be standards that will eventually support this (i.e.

> the W3C Sensor Incubator Group [2]), I wonder if this type of "sensor

> filtering language" is beyond our scope.

This could well be true, however I think it would simply be building on

top of the work from the SSN-XG. And I also think that by the time we

completed this work just for a lat/lon/alt based Point of Interest the

standard would be out-dated as this space is moving so quickly. From my

perspective this window is only a matter of months and not years.

With this simple type of language and the most basic version of this

Open AR Client Stack a web standards version of any of the current

Mobile AR apps could easily be built.

1. lat/lon/alt/orientation are fed in as sensor data

2. based on freshness/deltas then the following requests are composed

a - GET http://host/PointsOfInterest?lat=$lat&lon=$lon

b - javascript:update_orientation({ z:$alt, x:$x, y:$y })

3. The results from 2a are loaded into a local data store (js object)

4. The 2b request updates the current viewport using the orientation

params and the updated data store.

NOTE: One key thing is that the current browser models will need to be

re-thought to be optimised for dynamic streamed data such as

orientation, video, etc.

> The second main point you make is that we should reconsider the

> request-response nature of the internet in the AR context.

> Again, this is an important idea and one worth seriously considering.

> But in a similar fashion to my concerns about pattern of interest

> filtering, I worry that this circumvents an existing model that has

> merit.

> The data-trigger-response-representation model you suggest already

> happens routinely in rich Web 2.0 applications.

> The difference is that it happens under the programatic control of the

> author where they have access to a multitude of libraries and

> resources (i.e. jQuery, database access, hardware, user settings,

> etc.)

I think that's the great opportunity here. To take the best practices

and benefits from this type of 2.0 interaction...and abstract this out

to integrate the growing wave of sensor data AND make it more

accessible/usable to the common web user.

The type of system outlined in the attached diagram would extend this in

two ways. Each of the browser vendors that implement this type of

solution could compete and innovate at the UI level to make the full

power of this standard available through simple

point/click/tap/swipe/etc. style interfaces.

They could also compete by making it easy for developers to create

re-usable bundles at a much more programmatic level.

Outside of this publishers can simply use the existing open HTML, SVG

and WebGL standards to create any content they choose. This leaves this

space open to re-use the existing web content and services as well as

benefiting as that space continues to develop.

And the existing HTTP infrastructure already provides the framework for

cache management, scalability, etc. But I'm preaching to the choir here

8)

> (this point is related to another thread about (data)<>-(criteria) [3]

> where I agree with Jens that we are talking about multiple

> data-trigger-reponses)

I agree. That's why I propose enabling multiple triggers with

overlapping input criterion that can each create 0 or more linked

requests delivers just that.

> I may need some tutoring on what developing standards means,

Ah...here I just meant SVG, WebGL and the current expansion that's

happening in the CaptureAPI/Video space.

> but in my view, things like ECMA scripting are an unavoidable part of

> complex interactivity.

I agree...but would be fantastic if we could open a standard that also

helped the browser/solution vendors drive these features up to the user

level.

> Perhaps you can give an example where the cutoff between the current

> request-response model ends and automatic

> data-POI-response-presentation begins?

In it's simplest form it can really just be thought of as a funky form

of dynamic bookmark. But these bookmarks are triggered by sensor

patterns. And their responses are presented and integrated into a

standards based web UI (HTML, SVG, WebGL, etc.).

I hope my rant above makes sense...but I'm looking forward bouncing this

around a lot more to refine the language and knock the rough edges off

this model.

Talk to you soon...

roBman

>

> On Aug 20, 2010, at 10:19 AM, Rob Manson wrote:

>

> > Hi,

> >

> > great to see we're onto the "Next Steps" and we seem to be

> > discussing

> > pretty detailed structures now 8) So I'd like to submit the

> > following

> > proposal for discussion. This is based on our discussion so far and

> > the

> > ideas I think we have achieved some resolution on.

> >

> > I'll look forward to your replies...

> >

> > roBman

> >

> > PS: I'd be particularly interested to hear ideas from the linked

> > data

> > and SSN groups on what parts of their existing work can improve this

> > model and how they think it could be integrated.

> >

> >

> >

> > What is this POI proposal?

> > A simple extension to the "request-response" nature of the HTTP

> > protocol

> > to define a distributed Open AR (Augmented Reality) system.

> > This sensory based pattern recognition system is simply a structured

> > "request-response-link-request-response" chain. In this chain the

> > link

> > is a specific form of transformation.

> >

> > It aims to extend the existing web to be sensor aware and

> > automatically

> > event driven while encouraging the presentation layer to adapt to

> > support dynamic spatialised information more fluidly.

> >

> > One of the great achievements of the web has been the separation of

> > data

> > and presentation. The proposed Open AR structure extends this to

> > separate out: sensory data, triggers, response data and

> > presentation.

> >

> > NOTE1: There are a wide range of serialisation options that could be

> > supported and many namespaces and data structures/ontologies that

> > can be

> > incorporated (e.g. Dublin Core, geo, etc.). The focus of this

> > proposal

> > is purely at a systemic "value chain" level. It is assumed that the

> > definition of serialisation formats, namespace support and common

> > data

> > structures would make up the bulk of the work that the working group

> > will collaboratively define. The goal here is to define a structure

> > that enables this to be easily extended in defined and modular ways.

> >

> > NOTE2: The example JSON-like data structures outlined below are

> > purely

> > to convey the proposed concepts. They are not intended to be

> > realised

> > in this format at all and there is no attachment at this stage to

> > JSON,

> > XML or any other representational format. They are purely

> > conceptual.

> >

> > This proposal is based upon the following structural evolution of

> > devices and client application models:

> >

> > PC Web Browser (Firefox, MSIE, etc.):

> > mouse -> sensors -> dom -> data

> > keyboard -> -> presentation

> >

> > Mobile Web Browser (iPhone, Android, etc.):

> > gestures -> sensors -> dom -> data

> > keyboard -> -> presentation

> >

> > Mobile AR Browser (Layar, Wikitude, Junaio, etc.):

> > gestures -> sensors -> custom app -> presentation

> > [*custom]

> > keyboard -> -> data [*custom]

> > camera ->

> > gps ->

> > compass ->

> >

> > Open AR Browser (client):

> > mouse -> sensors -> triggers -> dom -> presentation

> > keyboard -> -> data

> > camera ->

> > gps ->

> > compass ->

> > accelerom. ->

> > rfid ->

> > ir ->

> > proximity ->

> > motion ->

> >

> > NOTE3: The key next step from Mobile AR to Open AR is the addition

> > of

> > many more sensor types, migrating presentation and data to open web

> > based standards and the addition of triggers. Triggers are explicit

> > links from a pattern to 0 or more actions (web requests).

> >

> > Here is a brief description of each of the elements in this high

> > level

> > value chain.

> >

> > clients:

> > - handle events and request sensory data then filter and link it to

> > 0 or

> > more actions (web requests)

> > - clients can cache trigger definitions locally or request them from

> > one

> > or more services that match one or more specific patterns.

> > - clients can also cache response data and presentation states.

> > - since sensory data, triggers and response data are simply HTTP

> > responses all of the normal cache control structures are already in

> > place.

> >

> > infrastructure (The Internet Of Things):

> > - networked and directly connected sensors and devices that support

> > the

> > Patterns Of Interest specification/standard

> >

> >

> > patterns of interest:

> > The standard HTTP request response processing chain can be seen as:

> >

> > event -> request -> response -> presentation

> >

> > The POI (Pattern Of Interest) value chain is slightly extended.

> > The most common Mobile AR implementation of this is currently:

> >

> > AR App event -> GPS reading -> get nearby info request -> Points Of

> > Interest response -> AR presentation

> >

> > A more detailed view clearly splits events into two to create

> > possible

> > feedback loops. It also splits the request into sensor data and

> > trigger:

> >

> > +- event -+ +-------+-- event --+

> > sensor data --+-> trigger -> response data -> presentation -+

> >

> > - this allows events that happen at both the sensory and

> > presentation

> > ends of the chain.

> > - triggers are bundles that link a pattern to one or more actions

> > (web

> > requests).

> > - events at the sensor end request sensory data and filter it to

> > find

> > patterns that trigger or link to actions.

> > - these triggers or links can also fire other events that load more

> > sensory data that is filtered and linked to actions, etc.

> > - actions return data that can then be presented. As per standard

> > web

> > interactions supported formats can be defined by the requesting

> > client.

> > - events on the presentation side can interact with the data or the

> > presentation itself.

> >

> > sensory data:

> > Simple (xml/json/key-value) representations of sensors and their

> > values

> > at a point in time. These are available via URLs/HTTP requests

> > e.g. sensors can update these files on change, at regular intervals

> > or

> > serve them dynamically.

> > {

> > HEAD : {

> > date_recorded : "Sat Aug 21 00:10:39 EST 2010",

> > source_url : "url"

> > },

> > BODY : {

> > gps : { // based on standard geo data structures

> > latitude : "n.n",

> > longitude : "n,n",

> > altitude : "n",

> > },

> > compass : {

> > orientation : "n"

> > },

> > camera : {

> > image : "url",

> > stream : "url"

> > }

> > }

> > }

> > NOTE: All sensor values could be presented inline or externally via

> > a

> > source URL which could then also reference streams.

> >

> > trigger:

> > structured (xml/json/key-value) filter that defines a pattern and

> > links

> > it to 0 or more actions (web requests)

> > [

> > HEAD : {

> > date_created : "Sat Aug 21 00:10:39 EST 2010",

> > author : "roBman@mob-labs.com",

> > last_modified : "Sat Aug 21 00:10:39 EST 2010"

> > },

> > BODY : {

> > pattern : {

> > gps : [

> > {

> > name : "iphone",

> > id : "01",

> > latitude : {

> > value : "n.n"

> > },

> > longitude : {

> > value : "n.n"

> > },

> > altitude : {

> > value : "n.n"

> > }

> > },

> > // NOTE: GPS value patterns could have their own ranges

> > defined

> > // but usually the client will just set it's own at the

> > filter level

> > // range : "n",

> > // range_format : "metres"

> > // This is an area where different client applications can

> > add their unique value

> > ],

> > cameras : [

> > {

> > name : "home",

> > id : "03",

> > type : "opencv_haar_cascade"

> > pattern : {

> > ...

> > }

> > }

> > ]

> > },

> > actions : [

> > {

> > url : "url",

> > data : {..}, // Support for referring to sensor values

> > $sensors.gps.latitude & $sensors.compass.orientation

> > method : "POST"

> > },

> > ]

> > }

> > ]

> >

> > data

> > HTTP Responses

> >

> > presentation

> > client rendered HTML/CSS/JS/RICH MEDIA (e.g. Images, 3D, Video,

> > Audio,

> > etc.)

> >

> >

> >

> > At least the following roles are supported as extensions of today's

> > common "web value chain" roles.

> >

> > publishers:

> > - define triggers that map specific sensor data patterns to

> > useful actions (web requests)

> > - manage the acl to drive traffic in exchange for value

> > creation

> > - customise the client apps and content to create compelling

> > experiences

> >

> > developers:

> > - create sensor bundles people can buy and install in their

> > own

> > environment

> > - create server applications that allow publishers to

> > register

> > and manage triggers

> > - enable the publishers to make their triggers available to

> > an

> > open or defined set of clients

> > - create the web applications that receive the final actions

> > (web requests)

> > - create the clients applications that handle events and map

> > sensor data to requests through triggers (Open AR browsers)

> >

> >

> [1] http://www.web3d.org/x3d/wiki/index.php/CylinderSensor

> [2] http://www.w3.org/2005/Incubator/ssn/charter

> [3]

Attachments

- image/png attachment: OpenARClientStack-01.png

Received on Thursday, 2 September 2010 08:51:53 UTC